InfiniBand is a high-speed data transfer technology that enables fast and efficient communication between servers, storage devices, and other computing systems. Unlike Ethernet, a popular networking technology for local area networks (LANs), InfiniBand is explicitly designed to connect servers and storage clusters in high-performance computing (HPC) environments.

InfiniBand uses a two-layer architecture that separates the physical and data link layers from the network layer. The physical layer uses high-bandwidth serial links to provide direct point-to-point connectivity between devices. In contrast, the data link layer handles the transmission and reception of data packets between devices.

The network layer provides the critical features of InfiniBand, including virtualization, quality of service (QoS), and remote direct memory access (RDMA). These features make InfiniBand a powerful tool for HPC workloads that require low latency and high bandwidth.

Recommended Reading: EPON, a long-haul Ethernet access technology based on a fiber optic transport network

One of the critical features of InfiniBand is its high bandwidth. InfiniBand can support data transfer rates of up to 200 Gigabits per second (Gbps), significantly faster than traditional networking technologies like Ethernet.

InfiniBand also supports QoS, prioritizing traffic and allocating bandwidth resources as needed. This ensures that critical workloads receive the necessary bandwidth and that non-critical workloads do not consume more than their fair share of resources.

Another essential feature of InfiniBand is its virtualization capabilities. InfiniBand supports the creation of virtual lanes, which provide a way to partition the network so that different workloads can be isolated. This enables multiple workloads to run on the same InfiniBand network without interfering with each other.

The InfiniBand architecture is based on a layered approach that separates the physical, data link, and network layers. The physical layer provides the physical connectivity between devices, while the data link layer handles the transmission and reception of data packets.

The network layer provides the critical features of InfiniBand, including RDMA. RDMA delivers a way for one device to access the memory of another device directly without the need for intermediate software or hardware. This eliminates the need for data to be copied between devices, which can significantly reduce latency and increase performance.

The InfiniBand architecture also includes the concept of switches, which are used to connect multiple devices. InfiniBand switches are designed to provide low-latency, high-bandwidth connectivity between devices, making them ideal for HPC workloads.

While Ethernet is a popular networking technology for LANs, it is not designed for the high-performance requirements of HPC workloads. Ethernet typically has higher latency and lower bandwidth than InfiniBand, making it less suitable for data-intensive workloads.

In addition, Ethernet does not support features like QoS and RDMA, which are critical for HPC workloads that require low latency and high bandwidth.

RDMA is a crucial feature of InfiniBand that provides a way for devices to access the memory of other devices directly. With RDMA, data can be transferred between machines without intermediate software or hardware, significantly reducing latency and increasing performance.

RDMA in InfiniBand is implemented using a two-step process. First, the sending device sends a message to the receiving device requesting access to its memory. The receiving device then grants access to its memory and returns a letter to the sender. Once access is granted, the sender can read or write directly to the memory of the receiving device.

RDMA is particularly useful for HPC workloads that require low latency, as it eliminates the need for data to be copied between devices, which can introduce additional latency. RDMA can also reduce CPU utilization by offloading data transfer tasks to the network hardware, freeing CPU resources for other jobs.

As the demand for faster data transfer speeds grows, InfiniBand network systems have become an increasingly popular solution for data centers. With its high-speed connections, InfiniBand is an excellent choice for applications that require low latency and high throughput.

One of the critical elements of an InfiniBand network system is its switches. InfiniBand switches are responsible for routing data between devices connected to the network. These switches work at the network’s physical layer, transmitting data at the highest possible speed.

InfiniBand switches come in various sizes and configurations, from small buttons for connecting individual servers to large switches for building high-performance clusters. They also have Quality of Service (QoS) features, which allow administrators to prioritize traffic and manage network resources effectively.

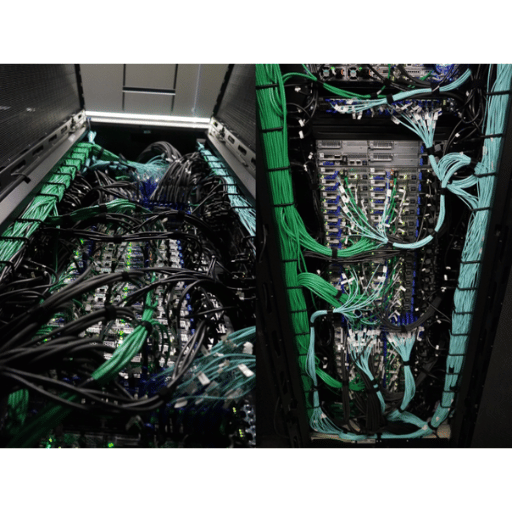

InfiniBand cables and connectors are the network’s backbone, providing high-speed data transfer between devices. These cables come in various lengths, from a few feet to hundreds of feet, allowing for flexible network configurations.

One of the unique features of InfiniBand cables and connectors is their active copper technology. This technology allows the wires to amplify the signals, improving signal integrity and reducing signal loss over longer distances.

InfiniBand adapters are network interface cards (NICs) that enable devices to connect to InfiniBand networks. These adapters are designed to deliver high-speed data transfer rates, low latency, and low CPU utilization.

InfiniBand adapters come in various configurations, from single-port adapters to quad-port adapters. The adapters also support different speeds, ranging from 10Gb/s to 200Gb/s. This flexibility allows for easy scalability and design of the network.

Recommended Reading: Data Center Network Architecture – Cloud-Network Integrated Data Center Network – Arithmetic Network – SDN Architecture

InfiniBand technology has become a popular choice for cluster computing, which involves connecting multiple computers to work together as a single system. InfiniBand’s low latency and high throughput make it an ideal choice for applications that require real-time data processing, such as financial trading and scientific simulations.

InfiniBand supports message-parsing and remote direct memory access (RDMA) technologies, allowing efficient communication between cluster nodes. This efficiency results in higher performance and faster data processing.

Building InfiniBand Networks in Data Centers

Several key elements are required to build an InfiniBand network in a data center, including switches, cables, connectors, and adapters. In addition, it is essential to have a thorough understanding of the network topology and configuration.

One of the advantages of InfiniBand networks is their modular design. Administrators can add or remove switches, cables, and adapters without affecting the network’s performance or reliability.

When it comes to high-performance computing, speed, and low latency are crucial factors that can make or break the success of an application. One technology that has revolutionized the world of high-performance computing is InfiniBand, a network architecture that enables data to be transmitted at lightning-fast speeds.

The InfiniBand Trade Association (IBTA) was founded in 1999 to develop and publish specifications for InfiniBand technology. The IBTA comprises industry leaders from various companies, including IBM, Intel, Mellanox Technologies, Oracle, Sepaton, and many others. The primary goals of the IBTA are to establish InfiniBand as the premier network for high-performance computing and to foster innovation in the field.

To achieve these goals, the IBTA has established a series of InfiniBand specifications that define the requirements for InfiniBand hardware and software. These specifications cover a range of features, including bandwidth, latency, power consumption, and more. The IBTA regularly updates its specifications to keep up with technological advancements.

One of the fundamental aspects of InfiniBand technology is its ability to transmit data at incredible speeds. The IBTA has defined several InfiniBand specifications that detail the maximum bandwidth and latency requirements for InfiniBand devices. These specifications include Quadruple Data Rate (QDR), Single Data Rate (SDR), and Double Data Rate (DDR).

QDR is the fastest of these specifications, with a maximum bandwidth of 40 Gbps (gigabits per second) and a latency of less than two microseconds (μs). SDR has a maximum bandwidth of 10 Gbps and a latency of less than five μs. DDR is a step up from SDR, with a maximum bandwidth of 20 Gbps and a latency of less than three μs.

The latest InfiniBand specification is known as High Data Rate (HDR). This specification was introduced in 2017 and has become the standard for high-performance computing. HDR has a maximum bandwidth of 200 Gbps and a latency of less than one μs, making it five times faster than QDR. HDR also features a more efficient encoding scheme, reducing the overhead of transmitting data.

The following InfiniBand specification is known as Next Data Rate (NDR). This specification is currently under development and is expected to deliver a maximum bandwidth of 400 Gbps and a latency of less than one μs. NDR will use PAM4 encoding, allowing longer distances between devices without losing signal quality.

So, how does InfiniBand enable low-latency and high-performance computing? InfiniBand achieves these feats through critical features, including hardware offloads, remote direct memory access (RDMA), and congestion control.

Hardware offloads allow InfiniBand network adapters to offload tasks traditionally performed by the CPU, such as checksum calculations and message routing. This offloading reduces the CPU’s workload and frees up resources for other jobs, improving overall system performance.

RDMA allows applications on one device to access the memory of another device directly. This direct memory access bypasses the CPU and traditional network stack, reducing latency and improving overall system performance. Congestion control is also critical in managing traffic in high-performance computing environments. InfiniBand’s congestion control mechanisms prevent network congestion and ensure data is transmitted at the highest possible speeds.

Recommended Reading: What is a Data Center Network? How to manage a data center network

InfiniBand technology has revolutionized how supercomputers, storage systems, data centers, and clusters operate. Its high-speed data transfer capabilities make it a key solution in several industries.

InfiniBand was introduced in 1999 as a high-speed interconnect technology for parallel computing environments. It is a low-latency, high-bandwidth technology that rapidly transfers large amounts of data between systems. The InfiniBand architecture uses a switched fabric topology that allows multiple hosts to communicate simultaneously and independently. It differs from other competing technologies like Ethernet and Fibre Channel due to its low latency and high bandwidth support.

InfiniBand is the technology that powers the world’s fastest supercomputers. It allows for a high degree of parallelism, making it possible to process large amounts of data simultaneously. InfiniBand plays a central role in the design of high-performance computing (HPC) systems and is used in various types of scientific research, like physics, biology, and meteorology. According to the Top500 website, which ranks the fastest supercomputers in the world, InfiniBand technology is used in over 70% of the systems listed in the top 500.

InfiniBand technology is also a vital component in high-performance storage systems. InfiniBand’s high-speed technology enables fast data communication between servers and storage systems. Low latency and high bandwidth are particularly useful for data-intensive applications that require quick access to large amounts of data. InfiniBand technology can also help reduce storage system latency in database applications, enabling more rapid processing of queries and transactions.

InfiniBand technology connects servers and other devices in data center and cluster environments, such as storage systems. InfiniBand’s high-speed communication capabilities enable clusters to operate as a single entity, enhancing performance for applications that require parallel computing. Furthermore, InfiniBand provides a reliable and scalable interconnect for virtualization platforms, enabling more efficient use of server resources.

InfiniBand’s high-bandwidth and low-latency technology plays a significant role in HPC workloads. It enables large amounts of data to be processed simultaneously, increasing the performance of scientific research applications. InfiniBand is also used in financial modeling, oil and gas exploration, and other data-intensive workloads.

InfiniBand technology has continued to advance over the years, from its early days in parallel computing environments to its widespread use in HPC and data center environments. The latest InfiniBand technology provides data transfer speeds of up to 400 gigabits per second (Gbps) per port, enabling faster data processing. InfiniBand’s low latency and high bandwidth characteristics are also particularly relevant in emerging quantum computing environments. The technology can allow speedier communication between quantum processors and classical computers.

The two leading players in the InfiniBand market are Mellanox Technologies (now part of NVIDIA) and Intel Corp. Other notable companies include IBM, Cray, Penguin Computing, and Fujitsu. According to MarketWatch, the global InfiniBand market is expected to grow at a compound annual growth rate (CAGR) of 18.1% between 2021 and 2026.

InfiniBand technology has come a long way since its inception in the early 2000s. Originally designed as a high-speed interconnect for server clusters, its capabilities have expanded to accommodate the needs of a wide range of computing applications. This section will discuss the future trends of this technology and its growing significance in modern computing.

InfiniBand technology has undergone significant improvements over the years. The latest versions of InfiniBand, including the most recent HDR 200G, provide ultra-fast data transfer rates, low latency, and high-bandwidth performance. These improvements have been achieved through constant collaboration between industry stakeholders, standardization bodies, and research communities.

One of the most significant advancements in InfiniBand technology is the development of new interfaces and connectors. Vendors such as Mellanox and Intel have introduced adapters and cables that make connecting InfiniBand devices to computing systems easier. An excellent example of a new interface is the M2PCIE3 card presented by Mellanox, which enables users to connect InfiniBand devices to PCIe 3.0 slots.

InfiniBand is unique among high-speed interconnects due to its low latency and high-bandwidth capabilities. Other technology, like Ethernet and Fibre Channel, cannot match InfiniBand’s data transfer rates and interconnectivity performance. InfiniBand’s Remote Direct Memory Access (RDMA) enables it to move data efficiently without using the host CPU, reducing overhead and enabling faster data transfer.

Low latency and high bandwidth are crucial in modern computing, especially in applications that rely on real-time data processing and analytics. InfiniBand’s ability to provide high-performance connectivity without compromising speed or latency makes it a popular solution in high-performance computing clusters, cloud computing, and data centers.

InfiniBand technology’s significance in modern computing will likely grow in the coming years, driven by the increasing need for high-performance computing and data processing. With the rise of the Internet of Things, big data analytics, and artificial intelligence, a growing demand exists d for high-bandwidth and low-latency interconnectivity.

The future of InfiniBand technology may see more innovations, such as faster data transfer rates, lower latency, and new applications in areas such as machine learning and autonomous driving. InfiniBand will likely remain a critical component of modern computing infrastructure as the technology evolves.

Recommended Reading: Data Center & Cloud

A: Infiniband is a high-speed interconnect technology used for data transfer in computer networking. It provides high bandwidth and low latency communication between servers, storage devices, and other network resources.

A: Infiniband offers higher data transfer speeds and lower latency than standard Ethernet. It is particularly well-suited for RDMA (Remote Direct Memory Access) technology, which enables direct memory access between machines without involving the CPU.

A: RDMA stands for Remote Direct Memory Access. It is a technology that allows data to be transferred directly between the memory of two computers without involving the CPU. Infiniband supports RDMA, enabling fast and efficient data transfers.

A: HDR Infiniband (High Data Rate) is the latest generation of Infiniband technology that provides even higher bandwidth than previous generations. It supports data transfer rates of up to 200 gigabits per second.

A: IBTA stands for InfiniBand Trade Association. It is an industry consortium that develops and promotes the Infiniband technology. The consortium collaborates with member companies to define and maintain industry standards for Infiniband.

A: QDR, SDR, and DDR are different generations of Infiniband technology:

A: Infiniband uses RDMA protocol for data transfer. RDMA allows data to be transferred directly between the memory of two machines without involving the CPU, resulting in low latency and high performance.

A: ROCE (RDMA over Converged Ethernet) is a protocol that enables RDMA technology to be used over standard Ethernet networks. It allows high-speed and low-latency communication using the Infiniband RDMA protocol over Ethernet connections.

A: In Infiniband, a Queue Pair (QP) is a communication entity consisting of a send and receive queue. It is the basic unit for data transfer and processing in the Infiniband fabric.

A: Some examples of Infiniband products include host channel adapters (HCAs), switches, cables, and software. These products are used to build and deploy Infiniband networks.