The fifth generation of Mellanox ConnectX-5 network adapters has become a mainstream adapter for modern data centers, cloud environments, and enterprises. Outfitted with a great deal of throughput and scalability, the NVIDIA Mellanox ConnectX-5 adapters have become essential in virtually any setting with strict low latency and efficient data processing requirements. This document aims to assist in understanding the multifunctional nature of ConnectX-5 so that IT professionals and executives can use it most effectively. Toward these ends, this article will ensure that you know what technology is at your disposal and how to best use it, whether it is system scaling, improving performance, or cloud infrastructure within compute-bounded resource applications.

For modern dedicated data centers looking to scale and perform, the Mellanox ConnectX-5 adapters have a range of advanced capabilities that accomplish this and more:

With these features combined, the Mellanox ConnectX-5 handles cloud AI and HPC workloads scalably and efficiently to establish a secure high throughput environment.

With the integration of Mellanox ConnectX-5, data centers can accomplish impeccable performance, security, and cost-effective scaling, all of which are imperative for today’s technological ecosystem.

When the Mellanox ConnectX-5 is examined along with the network adapters, some advantages are of particular interest, such as:

To sum up, the Mellanox ConnectX-5 is a top performer, multiplies productivity,, and provides better security, making it the best option for modern high-performance networking requirements.

Support for speeds of up to 100Gb per second enables the ConnectX-5 to improve Ethernet functionality with little latency for an organization with high-bandwidth workloads. Data is efficiently transferred using advanced features such as RDMA over Converged Ethernet (RoCE), and throughput is significantly improved in demanding applications like high-performance computing and virtualization. Moreover, multi-host features further enhance performance by reducing infrastructure costs while optimizing the connections on several systems. It is for these reasons that the ConnectX-5 EN network adapter PCI is a great backbone for next-generation networks.

The performance of the ConnectX-5 is greatly enhanced by PCIe 3.0 due to the high-speed data interface that it enables. The PCI 3.0 has a theoretical bandwidth of 985 MB per lane, providing fast, highly reliable, and efficient communication between host systems and the network adapter. As a result, real-time analytics and virtual workloads over gigabit ethernet become easy. The PCIe 3.0 allows the ConnectX-5 to be backward compatible and provide versatility and deployment flexibility by being integrated into a wide range of system architectures.

Low latency and high bandwidth are key information in achieving a high degree of connectivity in contemporary networks. Low latency guarantees quick delivery of data sets, which is essential for modern needs such as video calling, online gaming, and financial trading systems. High bandwidth, however, allows for the greater data flow needed by these applications so that no bottlenecks are encountered in any single process, and many processes can run in parallel. When put together, these attributes reduce delays, improve user experience, and enable enhanced support for extreme workloads, especially in data-intensive places such as cloud computing and virtualized infrastructures.

Before I install the Mellanox ConnectX-5, here is what I verify that my system has. First, I check whether the server or workstation has a PCIe interface available, which is permissive of the slot for the ConnectX-5 adapter, such as PCIe Gen3 or better for performance. Second, I checked for operating system support for the Mellanox driver, which is for mainstream Linux, Windows, and VMware ESXi. In addition, I look for the specific psu that would cater for the card’s power consumption. Lastly, I suggest that the firmware and drivers be downloaded prior to the installation process from Mellanox’s support area to ensure compatibility and performance.

Check to see whether the Dell Mellanox ConnectX-5 cards have been recognized and installed by the operating account. On a Linux computer, you may employ commands such as `lspci` or `dmesg.` You can also track Windows users through the Device Manager. Also, make sure that elementary network diagnostics are successful. Enable changes that will help ameliorate the equipment set benchmarks.

Several common problems occur when Mellanox network cards are being installed, which could precede one or multiple issues with working and/or performance. Here are some suggested methods for troubleshooting these issues:

When logging in with your account does not solve the issues, please check the logs that your operating system or your networking tools keep. In most cases, the `dmesg` command, Event Viewer, or even installation logs have all sorts of error codes that can pinpoint the problem. If problems continue after taking all the previous steps, refer to Mellanox documentation or contact their customer service for assistance.

Large-scale data center environments fully meet the expectations of exceptional scalability and flexibility built by Mellanox ConnectX-5 adapters. They deliver high throughput networking with bandwidth options up to 100 Gb/s (Gbps), ensuring they satisfy modern application expansion demands. Plus, advanced features such as SR-IOV and RDMA provide optimized resource utilization and low-latency communication across virtualized and non-virtualized environments. The combination of these two types of networks helps to integrate adapters into existing architectures seamlessly. Adaptability alongside low latency support sets ConnectX-5 as an efficient solution for expanding networks.

Mellanox ConnectX-5 adapters integrate into existing network infrastructures with minimal disturbance and maximum compatibility. The ease of enabling Ethernet, TCP/IP, and even InfiniBand network protocols helps mitigate the complexities involved in deployment across existing architectures. Plus, these adapters smoothen transitions by allowing broadly supported SR-IOV virtualization without major adjusting existing setups. In addition to these benefits, ConnectX-5 broadens driver support by ensuring continuous stalk interoperability, lightweight integration, and reduced downtime.

Mellanox ConnectX-5 adapters are built with performance, scalability, and price point in mind, offering a solid return on investment. Also, their energy-conservative design increases profitability by significantly reducing power use while still delivering sufficient throughput. Furthermore, due to their physical nature, energy efficiency, and strength, the adapters are reliable and have extremely long life spans, reducing replacement frequency. Since the adapters support wide integration with various systems and applications, businesses save on costly infrastructure modifications using the ConnectX-5 EN network adapter PCI. The last point, but not the least important, is the ConnectX-5’s ability to support modern network environments at an economical price point.

The Mellanox ConnectX-5 EN (Ethernet) adapters have been purposely designed for Ethernet-based networks and enable Ethernet connectivity wherever Ethernet protocols are used. These adapters provide scalability for Ethernet deployments because they have low latency and extremely high throughput.

In contrast, the Mellanox ConnectX-5 VPI (Virtual Protocol Interconnect) adapters can communicate over Ethernet and InfiniBand protocols. Thus, they can be used in hybrid networking environments. Such flexibility benefits high-performance computing (HPC) and other data-intensive applications. The VPI version of ConnectX-5 is more productive in settings when there is a heavy reliance on InfiniBand low latency bandwidth.

The primary difference is in the application and supported protocols—EN models fit well within Ethernet infrastructures. Still, VPI models are best suited when the infrastructure is mixed or heavily reliant on InfiniBand.

There are two versions to choose from: EN and VPI. Choose the EN version if your network infrastructure is purely Ethernet-based because it is highly optimized for these conditions. However, use the VPI version if your organization needs to work with Ethernet and InfiniBand interfaces. The VPI version is more suitable for mixed systems and high-performance computing environments where low latency and high bandwidth are the baseline. Knowing your network’s demands and your preferred protocol will help you pick the right adapter.

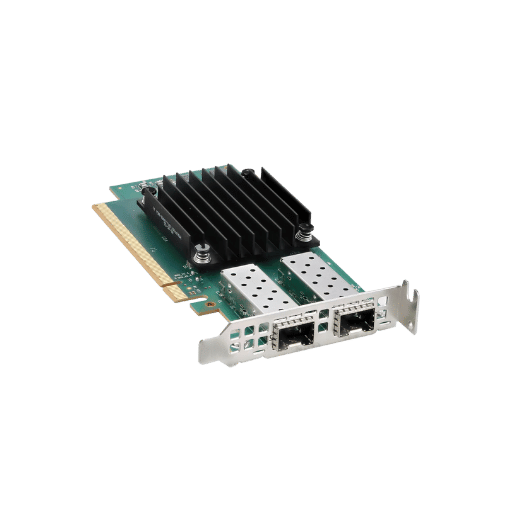

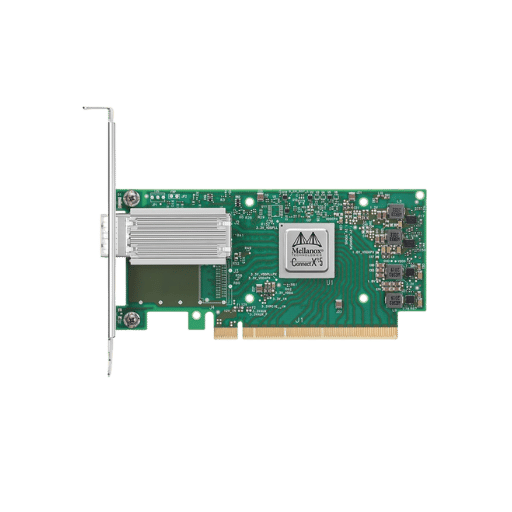

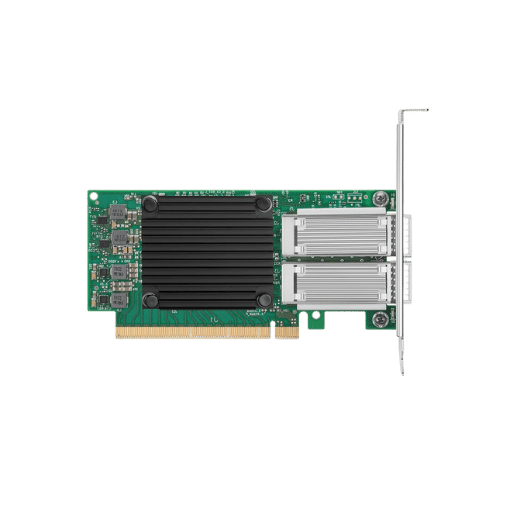

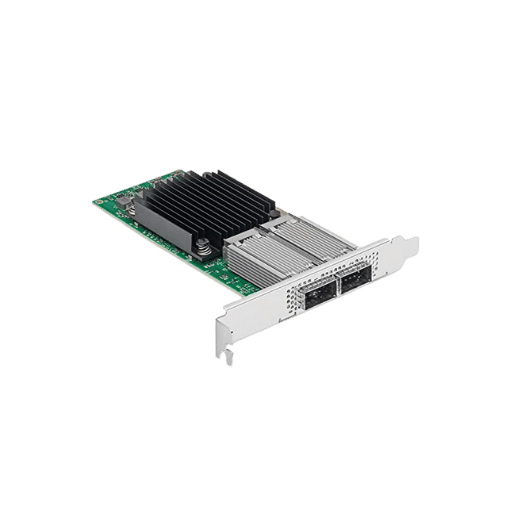

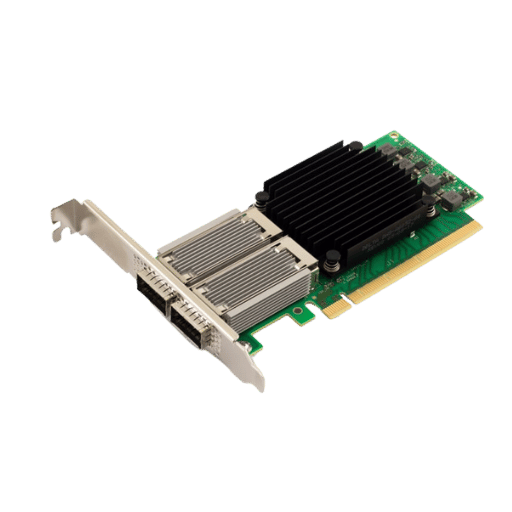

A: The ConnectX-5 Mellanox is a subsequent generation Ethernet network adapter card with a robust architecture for data centers and enterprises. This adapter card has some features, including support for 100Gb Ethernet, PCIe 3.0 x16 interface, and advanced off-loading capabilities like RDMA and NVMe over Fabrics. It comes in single-port and dual-port configurations, allowing network architecture flexibility.

A: The ConnectX-5 can operate at various speeds, such as 10GbE, 25GbE, 40GbE, 50GbE, and 100GbE. Such features favor different network infrastructures and easy upgrades when the demand increases.

A: The ConnectX-5 is offered in various configurations, each with different connector types. The most popular are SFP28, used for 25GbE, and QSFP28 for 100 GbE. Both are suited for the ConnectX-5 EN network adapter PCI. These connectors guarantee compatibility with varying designs and categories of network cables.

A: Several features make the Mellanox ConnectX-5 excellent at networking, such as NVMe over Fabrics offloads, which minimizes latency and CPU processing in storage networking. While serving advanced technologies, it also enables hardware offloads for RDMA, TCP, and UDP processing to improve networking performance further.

A: The Mellanox ConnectX-5 is currently available for use on Dell servers and other products. Dell supplies Mellanox ConnectX-5 network cards as part of the server product line; hence, it guarantees usability on Dell systems and supports infrastructure.

A: The standard ConnectX-5 undergoes several improvements and becomes the Mellanox ConnectX-5 EX. The two offer high-performing networking, but only the ConnectX-5 EX offers additional capabilities, such as an embedded PCIe switch and more sophisticated security and virtualization technology.

A: Definitely, the Mellanox ConnectX-5 fits well with data analytics applications. Its strong bandwidth (up to 100Gbps), together with low latency capabilities, make it perfect for dealing with massive data sets along with real-time analytics workloads. Also, support of the adapter’s RDMA boosts performance for common distributed computing data analytics environments.

A: One common model number for a dual-port 100GbE Mellanox ConnectX-5 adapter is MCX516A-CCAT. However, specific model numbers may vary depending on the exact configuration and vendor. For example, Dell may have their own part numbers for the NVIDIA Mellanox ConnectX-5 EN network adapter PCI Express in their server product line.