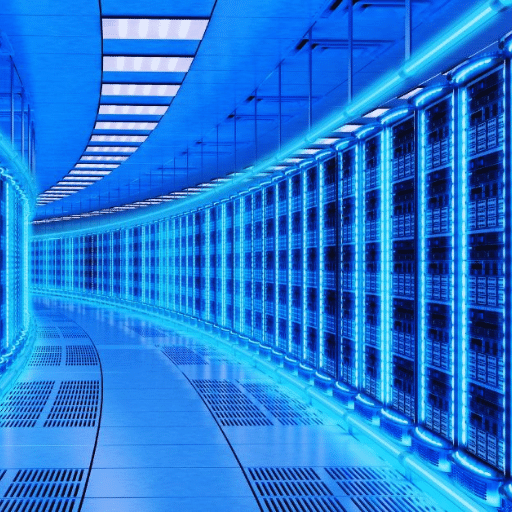

During the digital changeover, hyperscale data centers have become the core of the Internet as they provide support for all global industries that require a lot of computing power. For them to be able to handle tremendous amounts of information and guarantee continuous service delivery, they are built to be efficient, flexible, and high-performance oriented. This piece seeks to explain what usually happens beneath these massive structures in terms of infrastructure and operation principles. Looking at areas such as networking, cooling systems, and power distribution, among others, we shall give an overall picture of how these mammoth establishments work while ensuring their robustness and efficiency levels remain top-notch. No matter whether you work in the IT field designing data centers or just love technology wonders, this manual will enlighten you about the inner workings of hyperscale data centers.

A hyperscale data center is a large facility created to increase computing resources proportionately as demand rises. They have the ability to scale horizontally, adding more servers quickly for expansion without affecting performance. Some key features are extreme automation, high-density computing and storage arrays, robust networking infrastructure, and sophisticated cooling systems to handle all the excess heat generated. The usual architecture is modular and standardized which allows for easy maintenance or upgrades if necessary. What sets hyperscales apart from other types of data centers is their use of distributed computing models together with advanced virtualization that can support millions of users concurrently while dealing with petabytes worth of information effortlessly.

Knowing how to differentiate hyperscale from traditional data centers is an essential step toward comprehending the various benefits and uses of each. Here is a brief comparison based on the main features and technical parameters:

Scalability:

Automation:

Compute Density:

Networking:

Cooling Systems:

Energy Efficiency:

Virtualization and Distributed Computing:

A comprehension of these distinctions enables stakeholders to gain a deeper understanding of what sets hyper-scale data centers apart from other facilities in terms of capabilities and efficiencies, thus allowing them to make informed decisions matched with their operational and business requirements.

China Telecom Inner Mongolia Information Park

Switch SuperNAP Campus

Digital Realty Lakeside Technology Center

To guarantee the best performance and reliability of a hyperscale data center, you must reflect on some important factors. Among them are:

Hence, these factors work together towards making robustness effective in meeting modern digital infrastructure requirements by hyperscale data centers.

Keeping hyperscale data centers efficient needs cooling systems and power usage. The operation can be made more effective through efficient cooling methods and proper management of power which also reduces downtime hence being environmental friendly.

Cooling Systems

To handle the high temperatures produced by many servers and other hardware components in data centers at scale, advanced sustainable cooling technology is required. These are some common methods for cooling:

Power Usage

Energy requirements in a big way must be managed when dealing with power supply within these types of environments because they consume a lot of electricity.. The following should be taken into account:

Better cooling efficiency and optimized energy use within hyperscale data centers can be achieved through consideration of these technical parameters hence making them sustainable while improving their overall performance.

Hyperscale data centres need to be scalable and flexible to fit the expanding requirements of present-day enterprises.

Through these scalable, flexible solutions for integration with fluctuating workloads as well advanced technologies support; reliable performance remains assured in hyperscale data centers.

Scalability is the hallmark of hyperscale data centers as against enterprise data centers which are modular by nature and thus can be expanded over time without any discontinuities. They have massive server clusters that use a lot of computing power working together under one management system to give unmatched processing capacity. On the other hand, most enterprise data centers have set limits on how much they can hold at once, meaning that in order for them to grow bigger, there may need to be some major renovations done or new equipment bought – both of these options usually cost more money and cause downtime too. By so doing, resources get shared out better and things run faster; this keeps up performance levels while making everything easier to change around if necessary – virtualization means you can do more with fewer physical machines since each one does multiple jobs at once, while software-defined lets you move stuff about quickly without needing lots of different bits hardware everywhere.

Hyperscale data centers are built to store and manage significant volumes of information using distributed storage technologies. They attain this by employing systems such as Ceph and Hadoop Distributed File System, which can increase the size of their storage clusters horizontally by adding more nodes whenever necessary. Here are some technical parameters:

Computational capabilities within hyperscale data centers are also powerful, where they leverage parallel processing along with sophisticated computing frameworks like TensorFlow or Apache Spark. These frameworks enable quick analysis of large datasets. Key technical parameters include:

By integrating these state-of-the-art storage and processing technologies, hyperscale data centers deliver unmatched performance, scalability, and reliability that far surpasses what traditional enterprise data centers can offer.

Hyperscale data centers may be expensive at first, but they provide long-term cost savings. These data centers lower storage and processing costs per unit as a result of their large-scale infrastructure and operations. The main advantages are:

In addition to this, they also enable businesses to reduce downtime while improving agility which ensures a higher ROI through continuity planning enhancement as well accelerating time-to-market for new services according to hyperscale data centers.

Large-scale data centers save money by gaining cost efficiency through economies of scale. They achieve significant reductions in both capital and operational expenditure. This is possible because they buy hardware in bulk quantities and optimize the way it is used. They can lower the overall cost per terabyte of storage or watt of computing power. Advanced energy-saving systems like modern cooling methods also contribute by using minimum amounts of electricity hence reducing operational costs. These centers have the ability to vary resources with demand, ensuring that they are optimally utilized, therefore being cost effective. In this way, total ownership costs are greatly reduced which makes them financially attractive for processing and storing massive amounts of information at once.

Hyperscale data centers are equipped with strong infrastructures to cope with immense amounts of data efficiently. This capability is necessary for improved big data applications and data analytics as it enables agencies to handle, evaluate, and draw conclusions from large volumes of information. Below are the technicalities that enable these functions:

These parameters not only help organizations handle big data better but also make it possible for them to discover insights that drive operational optimization or even lead to new innovation opportunities.

Cloud computing services and hyperscale data centers are intertwined so that organizations have flexible and scalable infrastructure. This seamless resource allocation, dynamic scaling as well as cost-effective operations were confirmed by popular sources like AWS, Azure, and Google Cloud Platform. These are some of the key things about this integration:

Integration with cloud services enables hyperscale data centres increase their capabilities thus providing enterprises a robust environment where they can handle large applications & workloads while at the same time supporting growth through innovation within various industries.

The growth of Microsoft and other hyperscale players can be attributed to a number of reasons. The first reason is that there is an increasing demand for cloud services and data storage, which in turn calls for the expansion of hyperscale infrastructures. Another factor driving this growth is Azure, Microsoft’s cloud platform. It continuously evolves so as to meet the complex needs of present-day businesses such as artificial intelligence (AI), machine learning, among others like big data analytics. Moreover, these investments in strategic locations around the world enable them to deliver fast-performing services at affordable prices due to proximity while collaborations with industries also help improve their standing within markets and advance managed service capabilities, likewise contributing towards reinforcing its position even further. Thus, every time it does any one thing better than before or different from others, after some time, it gains more share in large-size DC/center space where competition may not have caught up yet because its ecosystem is strong together with technological advancements made by them too over these periods.

Efficiency and sustainability are increasingly prevalent in trends surrounding power and cooling solutions for hyperscale data centers. One key trend is the use of liquid cooling systems. Rather than air, these systems use a coolant to disperse heat, making them better at removing it. Moreover, they allow denser server configurations too; thus ideal for High-Performance Computing (HPC) and Artificial Intelligence (AI) workloads with high heat production.

The other trend involves powering data centers through renewable energy sources. Solar panels, wind turbines or hydroelectric generators can be integrated into the power supply system of a data center to reduce its carbon footprint as well as operational expenses related to electricity bills. Additionally, the Power Usage Effectiveness (PUE) rating should be taken into account when assessing energy consumption efficiency within such establishments where it is becoming increasingly important year after year. A PUE of 1 represents total efficiency; however, most large-scale facilities have ratios around 1.2 – 1.4.

Lastly, advanced cooling techniques like immersion cooling which submerges servers in thermally conductive dielectric liquids are gaining popularity due to their near perfect efficiency in waste heat removals and reduced reliance on traditional air conditioners for cooling purposes. This means that failure to monitor environmental factors, including but not limited to thermal resistance, heat flux rates, or even the flow rate of coolants, may render these new methods useless before they are fully operationalized.

Technical Parameters:

In the data center market, hyperscale companies like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) are important drivers of innovation and efficiency. They do this by using economies of scale to provide powerful cloud computing services that can be scaled up or down as needed while remaining affordable. As the biggest player in the field, AWS continues to improve on its already world-class infrastructure with chips designed specifically for it and AI-controlled management tools that save power. Microsoft Azure is leading the way in renewable integration; it has pledged to use nothing but renewable energy within four years and is testing underwater data centers to see if they can be cooled more efficiently this way. Google Cloud excels at machine learning, so it uses AI technology to optimize its cooling systems– a move that has given them PUE values below what most other providers achieve. These three organizations set new bars for sustainability, efficiency, and technological advancement in data-centering services — thereby shaping where this industry is headed next.

A: A hyperscale data center is a facility that is very big and can handle lots of data processing and storage capacity. Such centers are often used by giants such as Google, Amazon, Microsoft which deal with heavy workloads on a global level.

A: Hyperscale data centers are huge – their sizes extend to hundreds of thousands of square feet. This provides for large numbers of servers and other essential infrastructure required for intense or demanding data processing.

A: While traditional DCs may adopt an “edge” or “colocation” model, they can’t scale fast enough compared to their counterparts because their design does not allow them. On the other hand, these facilities have been designed for scaling out with thousands upon thousands of servers in order to match up with rising demand levels.

A: These include advanced network infrastructures; liquid cooling systems (which use coolants); strong security measures like firewalls among others; all these ensure best performance possible while dealing with high volumes of reliable processed information.

A: Large cloud providers such as AWS by Amazon Web Services, Google Cloud Platform, Azure -Microsoft’s service. Also many other companies operate this type of facility worldwide but mainly those focused on delivering wide range cloud services globally together with comprehensive datasets solutions will have centres established across various regions.

A: They make sure energy efficiency is maintained through innovations like liquid cooling where coolants are used instead of air conditioners which consume more power; optimized power usage strategies among others; also known as green measures aimed at reducing environmental impact during operations without compromising performance speed reliability processing capabilities thus saving costs in long run.

A: Colocation plays a role by providing an opportunity for different companies to rent space within one big DC building. Hyperscales and colos work together offering scalable cheap options needed for vast amounts information processing under various conditions.

A: The success (or failure) of any hyper-scale operation largely depends on the underlying foundations that support its network storage cooling systems – this is where facilities infrastructure comes into play; without robust, efficient backup power solutions, Rackspace capacity planning services WAN optimization strategies etcetera there can be no meaningful growth scalability or performance reliability levels achieved within such ultra-high-demand environments.

A: Over the past decade, there has been a significant increase in the number of these facilities. According to Synergy Research Group, this is due to more people requiring cloud services and wanting their information processed faster because they want it now!