What is a data center?

The Data Center Design Code (GB 50174-2017) defines a data center (data center) as a building place that provides an operating environment for centrally placed electronic information equipment, which can be one or several buildings or a part of a building, including mainframe rooms, auxiliary areas, support areas and administrative areas, etc.

Wikipedia defines a data center as “a complex set of facilities. It includes not only computer systems and other accompanying equipment (such as communication and storage systems), but also redundant data communication connections, environmental control equipment, monitoring equipment, and various security devices.

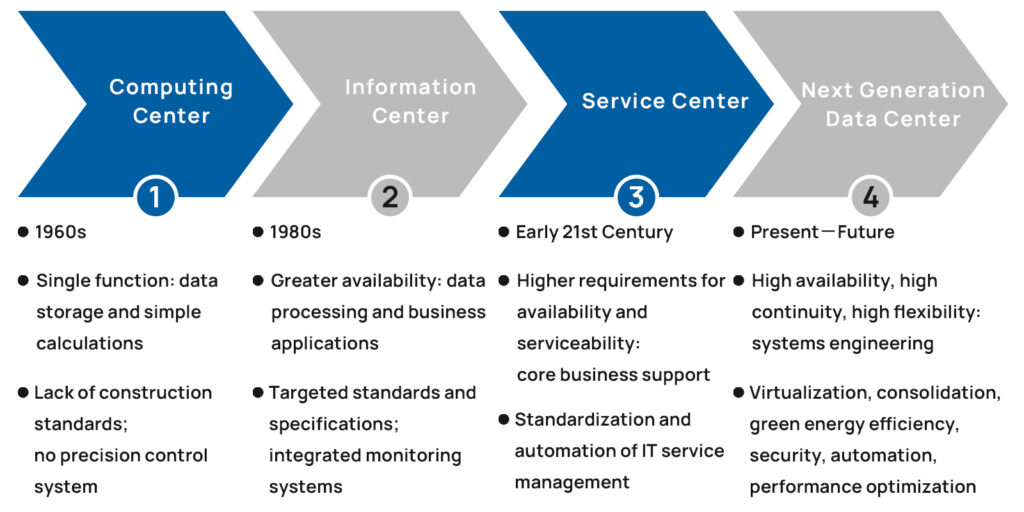

With the development of information technology and the acceleration of resource integration, the demand for data centers has been continuously growing. The functions of data centers have also been changing and evolving, roughly experiencing the the following forms of development.

What is cloud computing?

There are various definitions of cloud computing. What exactly is cloud computing? The following are the widely accepted definitions at this stage.

Cloud computing is a pay-per-use model that provides available, convenient, on-demand network access to a shared pool of configurable computing resources ( including networks, servers, storage, applications, services). These resources can be rapidly provisioned with minimal administrative effort or interaction with service providers. (National Institute of Standards and Technology (NIST)).

Cloud computing is an Internet-based computing method through which shared hardware and software resources, and information are made available on demand to computers and other devices. (Wikipedia).

Cloud computing (cloud computing)refers to the model of network access to a scalable, flexible pool of physical or virtual shared resources, and on-demand self-service access or management of resources. (“Information Security Technology Cloud Computing Service Security Capability Requirements” GB/T 31168-2014)

What is a cloud computing data center?

Cloud computing data center is a new type of data center based on cloud computing architecture. It features loosely coupled computing, storage, service and network resources, virtualization of various IT equipment, high degree of modularity, automation and green energy saving.

Different from traditional IDC services such as cabinet leasing and server hosting, cloud computing data centers generate their main operating income from providing cloud services . They posses characteristics such as flexibility, green energy saving, modularity, automation, security, stability and virtualization.

Cloud Computing Data Center Construction

Data centers with different business positioning have different characteristics and requirements, and differ in planning and construction.

Data center planning should meet the current usage requirements while fully considering the expansion needs of long-term business development, including power supply, network access capacity, number of network access carriers and network path redundancy, in order to meet the requirements of reliability, availability and sustainability.

Cloud Data Center Operations and Maintenance

Model Reference

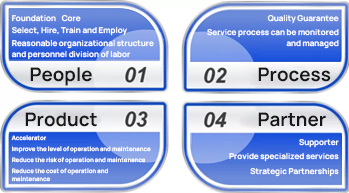

Data Center Operations and Maintenance Management Framework 4Ps

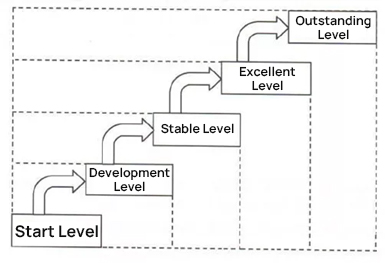

Data Center Service Capability Maturity

Based on the division of management domains by ISO 20000 standard, the data center has established a management model that covers the following 15 aspects: service planning and implementation, service level management, service reporting management, service continuity management, availability management, IT services budget and financial management, capacity management, information security management, business relationship management, supplier management, incident management, problem management, configuration management, change management and release management. The management maturity of the data center is assessed in 15 aspects.

Business-to-base linkage impact

Information sharing is very important

The operations and maintenance of business applications and data center infrastructure are usually handled by two companies or two departments within the same company, resulting in delayed or even unavailable information such as changes and events. Mechanical and electrical equipment, as well as facilities, require regular servicing and maintenance in spring and autumn every year, with occasional maintenance (which carries the risk of reducing data center reliability at this time).

However, there may be time conflicts during individual application and business releases, online operations, year-end and other key points because the O&M team cannot obtain the information in a timely manner.

Put your eggs in different baskets

Data center cabinet deployments are typically powered by the same set of two power distribution column headers for every two rows of cabinets. If any equipment with these two rows of cabinets fails, it may cause the main switch in the column headers to break, resulting in a loss of power or single circuit operation for both rows of cabinets. Therefore, when deploying IT equipment, the backup devices should be deployed in at least two separate columns of cabinets to reduce the risk of simultaneous downtime.

Energy Efficiency in Cloud Data Centers

At present, data centers consume a significant amount of energy, and as infrastructure, their sustainability has become an important factor of high concern for governments and enterprises. Some data centers are facing problems such as policy relocation or facility renovation.

The Energy Conservation Law of the People’s Republic of China defines energy conservation as the strengthening of energy management and the adoption of technically feasible, economically reasonable and environmentally and socially affordable measures across all stages from energy production to consumption. These measures aim to reduce energy consumption losses and pollutant emissions, prevent waste and promote effective and rational use of energy.

Therefore, the energy efficiency evaluation of data centers and the implementation of reasonable energy-saving measures are of great importance.

Select IT equipment with high energy efficiency ratio

IT equipment energy efficiency ratio = data processing traffic per second of IT equipment / energy consumption of IT equipment.

A higher energy efficiency ratio of IT equipment means that for each unit of power consumed by IT equipment, it can process, store and exchange more data, resulting in significant reductions in the capacity and energy consumption of UPS and air conditioning systems.

Increase server CPU utilization

Research has shown that there are “ghost servers” in data centers, which consume power but do not perform any work. A common model of servers in the data center was tested for power consumption under different loads, and the results showed that the server’s standby power consumption was 500 watts , 580 watts of 30% load power, 630 watts of 50% load power, 760 watts of 80% load power, and 810 watts of 100% load power, showing that the servers still have high power consumption even under no-load standby and low load rate.

Timely replacement of old equipment

On strengthening the “Thirteenth Five-Year Plan” information and communications industry, as well as the guidance energy saving and emission reduction , the main objectives have been clearly defined: by 2020, information and communications networks should fully adopt energy saving and emission reduction technologies, and high energy-consumption outdated communications equipment should be largely phased out.

Older equipment usually exhibits characteristics such as high power consumption, outdated technology, and low integration, while the cost of updating servers is actually much lower than the energy cost of older equipment.

Try to avoid local hot spots

High heat cabinets should not be mixed with regular heat generating cabinets in the same area as far as possible, and necessary measures should be taken regarding air volume and airflow. The empty U in the cabinet without the server installed should be installed with a blanking panel to avoid the hot air discharged from the server returning from the empty U to the front air inlet of the server, thus causing hot spots.