Over the past decade, data centers—such as those operated by Microsoft, Alibaba Cloud, and Amazon—have expanded rapidly, with the number of servers far surpassing that of traditional supercomputers. These data centers predominantly use Ethernet for interconnection, with transmission speeds evolving from the early 10Gbps and 100Gbps to today’s 400Gbps, and are now progressing toward 800Gbps and even 1.6Tbps.

However, the growth in CPU computing power has lagged behind the increase in network bandwidth, exposing two major bottlenecks in traditional software-based network protocol stacks: first, CPUs must frequently handle network data transfers, consuming significant computing resources; second, they struggle to meet the demanding requirements for high throughput and ultra-low latency in applications such as distributed storage, big data, and machine learning.

Remote Direct Memory Access (RDMA) technology has become a key solution to this problem. Originally developed for high-performance computing (HPC), RDMA enables data to bypass the CPU and be transferred directly between server memory, significantly reducing latency and improving throughput. The widespread adoption of 400G/800G Ethernet RDMA network interface cards (NICs), such as NVIDIA ConnectX and Intel E810, has enabled large-scale deployment of RDMA in data centers.

These NICs not only support ultra-high-speed Ethernet but also enable efficient RDMA communication via protocols such as RoCE (RDMA over Converged Ethernet) or iWARP. This allows applications to fully utilize the bandwidth of 400G+ networks while reducing CPU overhead. For example, distributed storage systems (such as Ceph and NVMe-oF) and AI training frameworks (such as TensorFlow and PyTorch) have widely adopted RDMA NICs to significantly enhance data transfer efficiency.

Before diving into 400G Ethernet RDMA NICs, it’s important to understand what RDMA is.

RDMA (Remote Direct Memory Access) is a networking technology that enables servers to directly read from or write to the memory of other servers at high speed, without involving the operating system kernel or the CPU. Its core objectives are to reduce network communication latency, increase throughput, and significantly lower CPU resource consumption.

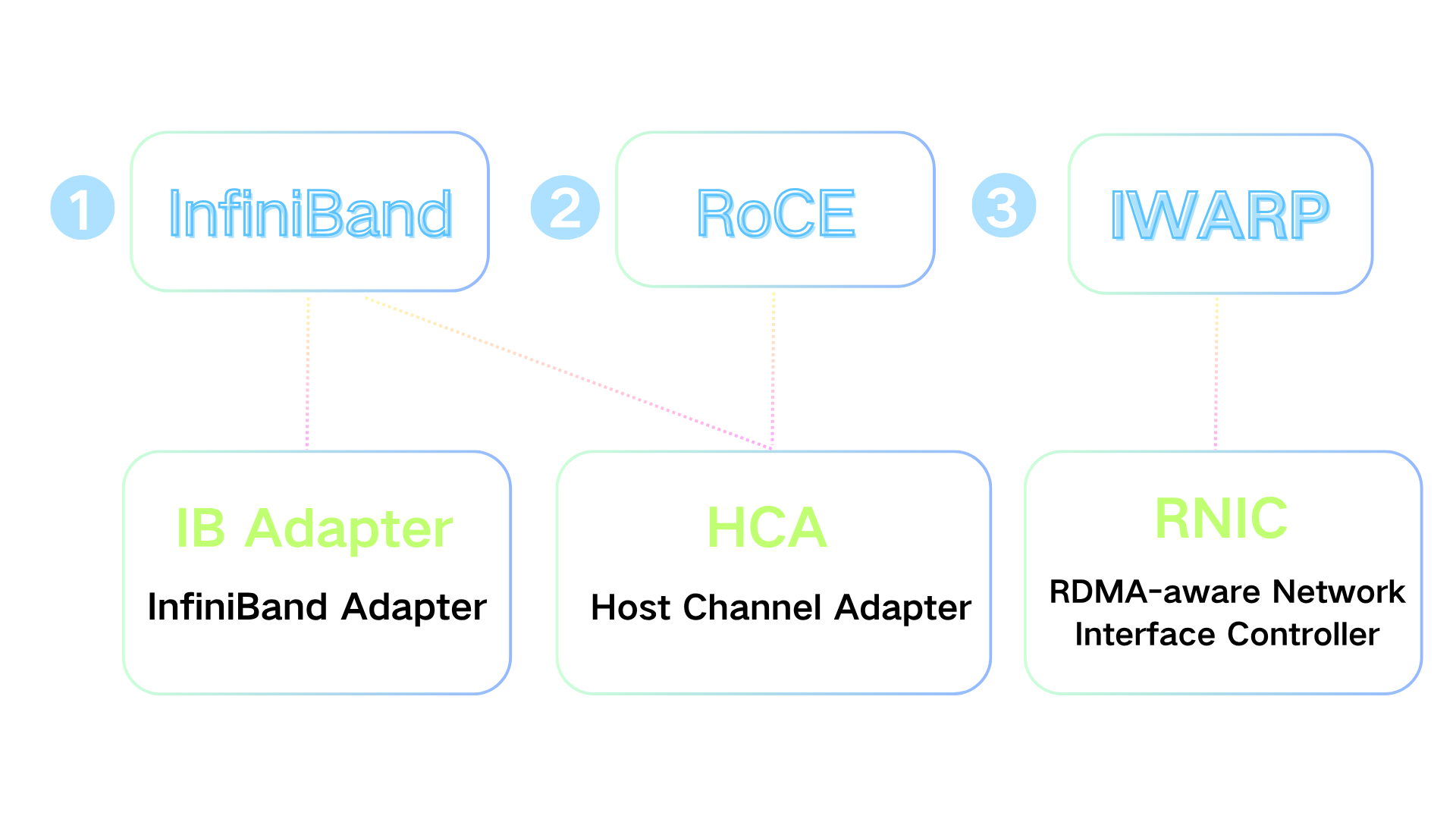

Currently, there are three mainstream implementations of RDMA: IB (InfiniBand), RoCE (RDMA over Converged Ethernet) and iWARP.

InfiniBand (IB)

RoCE (RDMA over Converged Ethernet)

iWARP (Internet Wide Area RDMA Protocol)

RDMA allows data to move directly from the memory of one computer to another, bypassing the CPU and operating system kernel. This results in:

Zero-copy data transfer: Eliminates data replication between user and kernel spaces.

Low latency: Achieves microsecond-level delays, compared to milliseconds for traditional TCP/IP.

High bandwidth: Fully utilizes network speeds up to 400Gbps or higher.

CPU offloading: Reduces CPU involvement, freeing resources for other tasks.

Two primary RDMA protocols dominate Ethernet environments:

RoCE (RDMA over Converged Ethernet):

iWARP (Internet Wide Area RDMA Protocol):

Built on TCP/IP, it integrates with existing Ethernet infrastructure but incurs slightly higher latency and CPU overhead compared to RoCE. It is better suited for wide-area networks.

Ethernet RDMA NICs are high-performance network hardware that allow a computer to directly access the memory of a remote host without involving the CPU. This significantly reduces latency, increases throughput, and lowers CPU overhead.

As Ethernet RDMA technology gains widespread attention and deployment in data center scenarios such as network storage and cloud computing, Ethernet RDMA NICs—being the key components for enabling this technology—are playing an increasingly vital role.

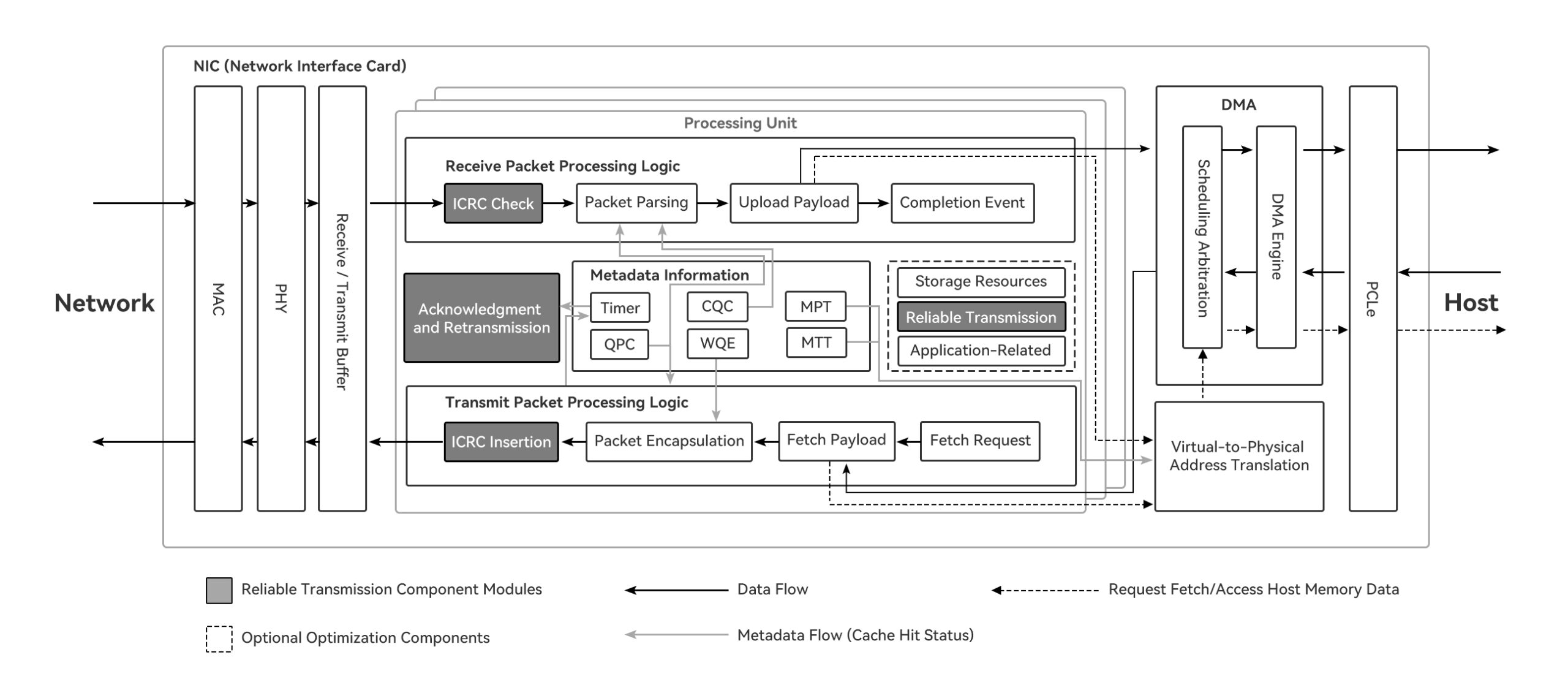

On one hand, the NIC is responsible for implementing the most complex aspects of Ethernet RDMA technology, such as protocol offloading, reliable transmission, and congestion control. In contrast, switches typically require only relatively simple functionalities, such as priority-based flow control (PFC) and explicit congestion notification (ECN).

On the other hand, offloading processing to hardware means the NIC must store state information needed for reading and writing remote memory. However, the on-chip cache and processing logic resources of the NIC are limited, making it essential to have a solid understanding of the NIC’s microarchitecture for effective design and optimization.

400G Ethernet RDMA network cards represent a pinnacle of high-performance networking technology, designed to meet the escalating demands of modern data centers, high-performance computing (HPC), and cloud environments. By enabling direct memory access between systems without CPU intervention, these cards deliver ultra-low latency, high throughput, and significant CPU offloading. This article explores the core features, applications, technical details, and future trends of 400G Ethernet RDMA network cards.

Ultra-High Bandwidth: Single-port 400Gbps (e.g., QSFP-DD or OSFP interfaces), replacing multiple low-speed NICs.

RDMA Support: Enables zero-copy, kernel-bypass data transfer via RoCEv2 (UDP/IP-based) or iWARP (TCP/IP-based).

Hardware Offload:

Low Latency: End-to-end latency can be as low as <1 μs (depending on network configuration).

Multi-Protocol Compatibility: Typically supports Ethernet (400G), InfiniBand (e.g., NVIDIA ConnectX-7), and storage protocols (e.g., NVMe-oF).

Leading vendors provide advanced 400G RDMA solutions:

400G Ethernet RDMA network cards are primarily used in network environments that require high bandwidth and low latency, such as high-performance computing (HPC), artificial intelligence (AI), big data analytics, and cloud computing. Below are some specific application scenarios:

All these application scenarios demand high-bandwidth, low-latency network connections. With their high performance and low latency, 400G Ethernet RDMA network cards can significantly enhance the performance and efficiency of these applications.

Issue: 400G traffic bursts can easily cause packet loss, and RoCEv2 is highly sensitive to packet loss.

Solutions:

Enable DCQCN (Data Center Quantized Congestion Notification) or TIMELY (latency-based congestion control).

Configure lossless Ethernet using PFC + ECN, while avoiding Head-of-Line (HOL) blocking caused by PFC.

Issue: 400G line rate requires PCIe 4.0 x16 or PCIe 5.0 x8 bandwidth (~64GB/s).

Solutions:

Use PCIe 5.0 slots (32GT/s per lane).

Optimize with multi-queue configuration, binding each CPU core to an independent queue.

Issue: 400G NICs can consume 25–40W, requiring robust cooling.

Solutions:

Choose NICs with heat sinks or active cooling fans.

Optimize chassis airflow design.

As data center networks rapidly evolve from 100G/200G toward 400G and beyond, Ethernet RDMA network cards are becoming core components of next-generation high-performance infrastructure. The following trends highlight the current trajectory and future direction of the RDMA ecosystem:

While InfiniBand has long been the gold standard for high-performance communication, its proprietary nature and high cost have limited large-scale adoption. In contrast, RoCEv2, an open and Ethernet-based RDMA solution, offers a compelling alternative due to its performance, affordability, and ecosystem maturity.

Hyperscale cloud providers such as AWS, Alibaba Cloud, and Microsoft Azure are increasingly integrating RoCEv2 into their infrastructures to accelerate AI training, storage access, and multi-tenant computing efficiency.

Lower Deployment Costs: RoCEv2 runs on standard Ethernet hardware, enabling reuse of existing switches and cabling infrastructure.

Simplified Operations: Ethernet is well understood and easier to maintain for most engineering teams.

Better Scalability: RoCEv2 supports Layer 3 routing, making it suitable for large-scale, multi-rack, and cross-subnet deployments.

These attributes make RoCEv2 a high-performance yet cost-effective choice for modern data centers.

(1) 800G/1.6T Ethernet Is on the Horizon

Driven by innovations in switching silicon (e.g., Broadcom Tomahawk 5) and PHY standards, the industry is preparing for 800G and 1.6T Ethernet adoption. Next-generation RDMA NICs—such as NVIDIA’s upcoming ConnectX-8—will support higher port densities, lower power consumption, and increased throughput, further enhancing RDMA’s role in scalable computing infrastructures.

(2) DPU Integration: RDMA + Intelligent Offloading

Data Processing Units (DPUs) represent the next step in smart networking, combining traditional NIC capabilities with programmable compute. By embedding RDMA functionality into DPUs, these devices can offload network, storage, and security processing from the CPU—enabling end-to-end acceleration for AI workloads and disaggregated storage.

For example, NVIDIA’s BlueField DPU integrates RDMA, IPsec, and NVMe-oF acceleration, and is becoming a key component of next-gen hyperconverged infrastructure.

(3) SmartNIC Intelligence: In-Network Computing

SmartNICs are evolving from simple data movers to programmable in-network compute nodes with features like:

This trend transforms RDMA NICs into intelligent execution engines for distributed systems, far beyond traditional network acceleration.

400G Ethernet RDMA network cards are transformative for data centers, HPC, and AI-driven applications. By leveraging hardware offloading, RoCEv2 or iWARP protocols, and advanced congestion control, these cards deliver unparalleled performance with minimal latency and CPU overhead. Careful attention to network configuration, PCIe bandwidth, and thermal design is critical for optimal deployment. As the industry moves toward 800G and DPU-integrated solutions, RDMA technology will continue to redefine the boundaries of distributed computing and storage performance.