In the era of rapid development in Artificial Intelligence (AI), High-Performance Computing (HPC), and large-scale data centers, network interconnect technology has become the backbone for massive data transmission. As a low-latency, high-bandwidth interconnect protocol led by NVIDIA and other industry leaders, InfiniBand has emerged as the standard choice for AI training and HPC clusters.

By 2025, with the widespread adoption of the NVIDIA Blackwell platform, 400G (NDR single-port) and 800G (dual-port 2×400G) optical modules are experiencing explosive growth. These modules support data rates of up to 800Gb/s, significantly improving system efficiency and meeting the surging bandwidth demands of AI model training. According to market analysis, the market size for 800G modules is expected to grow by more than 50% in 2025, driven primarily by the expansion of AI data centers.

Compared with Ethernet, InfiniBand places greater emphasis on Remote Direct Memory Access (RDMA) and zero-copy transmission, enabling nanosecond-level latency. This makes 400G/800G optical modules not only suitable for internal interconnects but also capable of supporting data center interconnections across sites. This article will explore their technical specifications, key advantages, application scenarios, challenges, future trends, and provide practical recommendations.

ith the rapid advancement of artificial intelligence (AI), high-performance computing (HPC), and big data applications, data centers are facing increasing demands for higher network bandwidth and lower latency. InfiniBand (IB), as a network technology specifically designed for high-performance interconnects, has introduced the NDR (Next Data Rate) generation with 400G and 800G speed standards, further enhancing data transmission efficiency.

The 400G/800G InfiniBand optical modules are key physical-layer components for achieving these high speeds, primarily used for fiber optic connections between servers, switches, and storage systems. They support Remote Direct Memory Access (RDMA) and multi-lane parallel transmission. These modules employ advanced PAM4 (Pulse Amplitude Modulation 4-level) modulation and multi-wavelength technologies, delivering aggregate throughputs up to 800 Gb/s while maintaining low power consumption, making them ideal for large-scale AI training clusters and cloud data centers.

Technical Background and Specifications

The InfiniBand NDR standard was launched in 2022, offering an effective throughput of 100 Gb/s per lane. The 400G configuration typically uses 4x lanes, while 800G can be achieved via 8x lanes or dual-port 2x400G setups, supporting compatibility with both InfiniBand and Ethernet protocols. These optical modules operate at typical wavelengths such as 850 nm (multimode) and 1310 nm/1270-1330 nm (single-mode), with transmission distances ranging from 50 m to 2 km, and power consumption generally within 15-20 W.

Common form factors include:

OSFP (Octal Small Form-Factor Pluggable): Supports 8 lanes, with a larger size and excellent heat dissipation, commonly used for 800G modules, such as 800G OSFP SR8/DR8.

QSFP-DD (Quad Small Form-Factor Pluggable Double Density): Double-density design supporting 8 lanes, compatible with existing infrastructure, suitable for mixed 400G/800G deployments.

QSFP112: Optimized for 400G, supporting 112 Gb/s per lane, ideal for single-port NDR InfiniBand configurations.

These modules are typically equipped with Digital Diagnostic Monitoring (DDM) features for real-time monitoring of temperature, power, and signal integrity, ensuring system reliability.

| Item |

400G InfiniBand Optical Module |

800G InfiniBand Optical Module |

| Data Rate |

Single-port 400Gb/s (NDR) |

Dual-port 2×400Gb/s = 800Gb/s |

| Form Factor |

QSFP112 / OSFP |

OSFP (dual-port design) |

| Modulation |

PAM4 |

PAM4 |

| Fiber Type |

Multimode Fiber (MMF) / Single-Mode Fiber (SMF) |

Single-Mode Fiber (SMF) / limited MMF for short reach |

| Typical Power Consumption |

8–9W |

~15W |

| Supported Platforms |

NVIDIA Quantum-2, ConnectX-7, BlueField-3 |

NVIDIA Quantum-2, Quantum-X800, ConnectX-8 (upcoming) |

| Common Types |

SR4 (≤100m MMF), DR4/FR4 (2–10km SMF), LR4/ZR (40–80km SMF) |

SR8, DR8, FR4, 2×DR4, supporting breakout into 2×400G |

| Use Cases |

|

|

| Advantages |

Mature, lower power, strong compatibility |

Double bandwidth, higher port density, future-ready |

| Evolution |

Migration to 800G |

Evolution toward 1.6T (expected 2026–2027) |

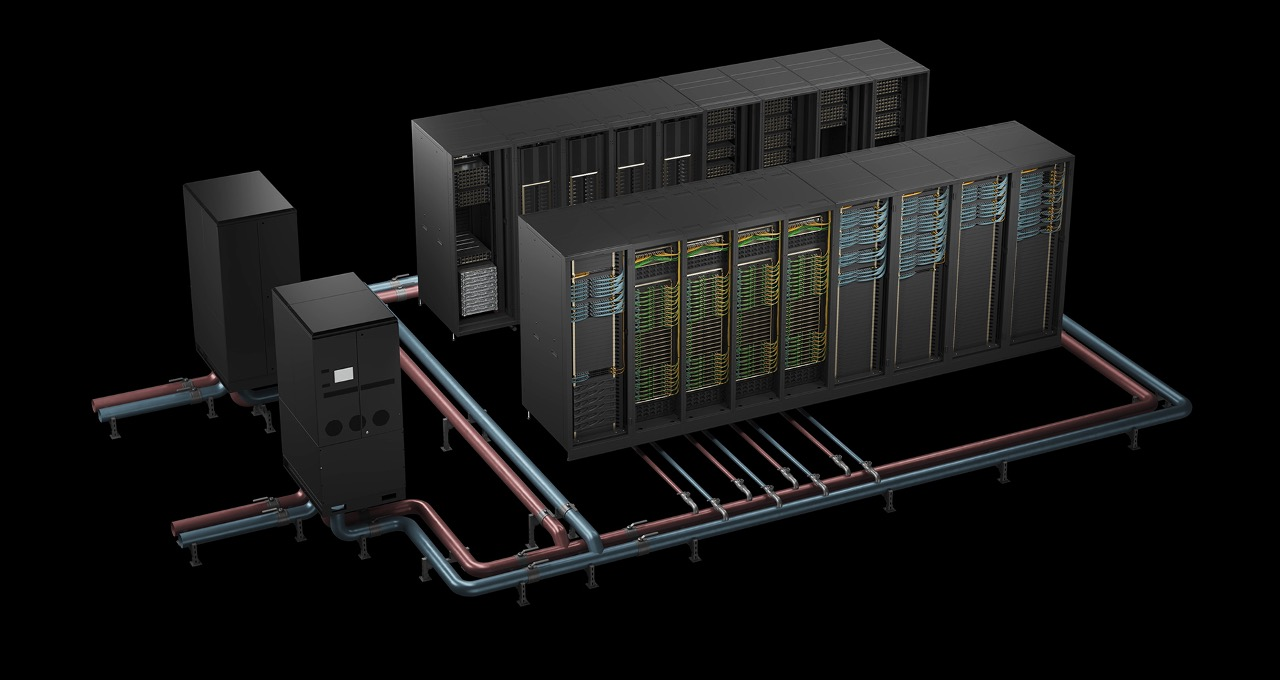

In practical deployments, 400G/800G InfiniBand optical modules are widely used in HPC systems like NVIDIA DGX SuperPOD for high-speed GPU-to-GPU communication, ensuring parallel efficiency in AI model training. Compared to traditional Ethernet modules, InfiniBand modules offer lower latency (<0.5 μs) and higher bandwidth utilization, reducing CPU overhead and enabling large-scale expansion. By 2025, with the rise of 1.6T modules, these will serve as transitional technologies, driving data centers toward even higher speeds.

In summary, 400G/800G InfiniBand optical modules represent the pinnacle of networking innovation, optimizing optical and electrical interfaces to meet the extreme demands of modern computing and laying the foundation for future intelligent infrastructures.

Within the NVIDIA ecosystem, 400G/800G optical modules are widely deployed to build large-scale GPU clusters. For instance, in AI data centers, the 800G SR8 module helps reduce cabling complexity while supporting the interconnection of thousands of GPUs. In early Blackwell systems, 400G NDR modules are being used as a transition toward 800G.

A typical use case can be found in supercomputing projects, where DR8 modules enable inter-data center connectivity over distances of up to 500 meters, powering applications such as weather simulation and genomics research. Moreover, Corning’s EDGE8 solution further optimizes cabling infrastructure for these modules, supporting the continuous evolution of AI and machine learning workloads.

Despite their advanced capabilities, these modules face challenges such as high power consumption and compatibility issues. Supply chain disruptions—for example, the silicon shortage during 2024–2025—could also impact large-scale deployments.

Potential solutions include adopting third-party compatible modules and implementing optimized cooling systems to address thermal concerns. In addition, standardized testing and proper fiber cleaning and maintenance can help reduce the Bit Error Rate (BER), improving overall reliability.

In conclusion, the adoption of 400G/800G InfiniBand optical modules marks a pivotal step toward ultra-high-speed, efficient data centers, empowering next-generation AI and HPC workloads with unparalleled performance and scalability. As technology evolves, these modules will continue to drive innovation in global computing ecosystems.

A: 400G modules typically use QSFP112 form factor with lower power consumption, ideal for medium-distance interconnects, while 800G modules adopt dual-port OSFP design, offering higher bandwidth for large-scale AI clusters and supercomputers.

A: They are widely used in AI training clusters, HPC supercomputers, hyperscale data centers for GPU/server interconnects, and high-speed inter-data center connections.

A: InfiniBand delivers ultra-low latency (<0.5 μs), higher bandwidth utilization, and supports RDMA and zero-copy transmission, greatly enhancing AI/HPC efficiency.

A: Key challenges include high power consumption, thermal management, compatibility, and supply chain risks. Solutions include liquid cooling, optimized thermal design, third-party modules, and standardized testing.

A: With the rise of 1.6T modules, 400G/800G will serve as transitional technologies, driving data centers toward faster, smarter, and more scalable interconnect architectures.