NVIDIA, with its advanced GPU platforms, has become a leader in the fields of Artificial Intelligence (AI) and High-Performance Computing (HPC). Its flagship products, the DGX and HGX systems, are specifically designed to accelerate AI workloads, deep learning, and large-scale data processing. These systems are widely used across industries such as healthcare, finance, automotive, and scientific research.

Choosing between NVIDIA DGX and HGX depends on an organization’s specific needs, such as workload requirements, scalability, and budget. This article provides a detailed comparison of the two platforms, highlighting their differences in architecture, performance, use cases, and cost to help decision-makers select the most suitable solution.

While both NVIDIA DGX and HGX are designed to accelerate AI and high-performance computing workloads, they differ fundamentally in design philosophy, deployment models, and target users. The following sections begin with their definitions and core purposes to highlight the key differences between these two platforms.

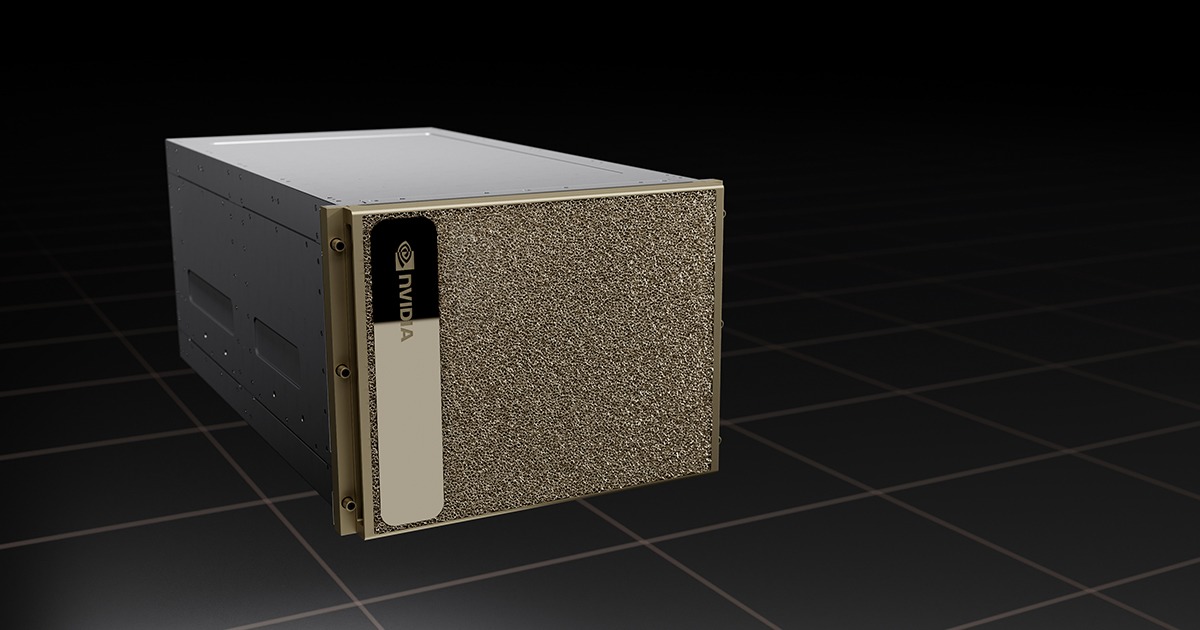

What is NVIDIA DGX?

NVIDIA DGX is an integrated, turnkey AI supercomputer designed for deep learning and AI research. It combines high-performance GPUs, pre-configured software, and optimized hardware into a single system, offering a plug-and-play solution for enterprises and research institutions.

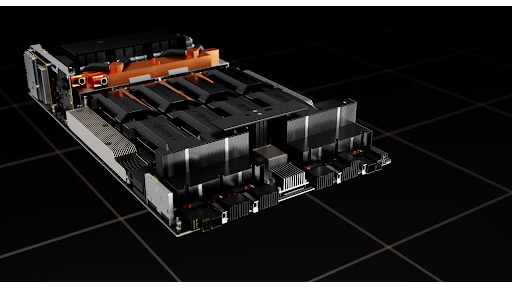

What is NVIDIA HGX?

NVIDIA HGX is a modular, GPU-accelerated platform intended for hyperscale data centers and large-scale AI applications. Unlike DGX, HGX serves as a reference architecture, allowing system integrators to build custom servers with NVIDIA GPUs, interconnects, and networking components.

DGX: Fixed Configurations

DGX systems come with pre-defined configurations, limiting customization options. This standardization ensures reliability and ease of deployment but may not suit organizations requiring specific hardware or software setups.

HGX: Scalable and Customizable Options

HGX’s modular design allows for extensive customization, enabling organizations to scale GPU count, storage, and networking based on their needs. This flexibility makes HGX suitable for hyperscale environments with diverse workloads.

DGX: Optimized for Deep Learning

DGX is engineered for deep learning tasks, delivering exceptional performance for training large neural networks. Its integrated design and optimized software stack ensure low latency and high throughput for AI model development.

HGX: High-Performance Computing and Large-Scale AI

HGX excels in large-scale AI and HPC applications, such as scientific simulations and generative AI. Its ability to scale GPUs and leverage advanced interconnects like NVSwitch supports massive parallel processing and high-performance workloads.

NVIDIA DGX and HGX are GPU platforms designed for high-performance computing (HPC) and artificial intelligence (AI) workloads, with significant differences in their architecture and application scenarios:

DGX is NVIDIA’s fully integrated, out-of-the-box AI system, purpose-built for deep learning tasks. It offers a tightly coupled system where GPUs, CPUs, storage, and networking components are pre-optimized to work seamlessly together. This all-in-one design reduces deployment time and ensures consistent performance for AI workloads.

(1)Hardware Configuration:

(2)Software Support:

Pre-installed with the NVIDIA AI software stack (CUDA, TensorRT, DGX OS), enabling rapid deployment.

(3)Scalability:

Supports multi-node clustering (e.g., DGX SuperPOD), with high-speed interconnects via NVLink Switch or InfiniBand.

Examples:

The HGX platform is built with modularity at its core, allowing data center operators to customize server configurations. HGX supports a variety of GPUs, NVSwitch interconnects, and flexible options for storage and networking—ideal for tailored HPC and AI solutions.

(1)Hardware Configuration:

(2)Flexibility:

Supports both air-cooled and liquid-cooled designs, enabling vendors to adjust hardware configurations as needed.

(3)Interconnect Technologies:

DGX systems (such as DGX A100/H100) are typically equipped with NVIDIA ConnectX-6/7 NICs or BlueField DPUs, supporting InfiniBand (IB) or high-speed Ethernet (e.g., 200/400GbE). These network cards require optical transceivers, such as QSFP-DD/OSFP to enable:

Hardware Components

Both NVIDIA DGX and HGX systems utilize high-performance GPUs such as the A100 or H100, but they differ in interconnect architecture and scalability. DGX systems use NVLink to enable high-speed communication between GPUs, optimizing intra-system bandwidth and making them ideal for deep learning training and other bandwidth-intensive applications.

In contrast, the HGX platform extensively employs NVSwitch technology, providing full GPU interconnect with higher bandwidth and greater scalability. This makes HGX well-suited for building large-scale, multi-node AI or HPC clusters to meet the demanding needs of modern data centers.

Software Stack

In terms of software support, NVIDIA DGX offers a turnkey experience with the NVIDIA AI Enterprise software suite pre-installed. This includes popular frameworks like TensorFlow, PyTorch, and DGX BasePOD, streamlining deployment and ensuring compatibility with AI workflows.

In contrast, the HGX platform emphasizes flexibility, requiring users to integrate their own software stack—including the operating system, AI frameworks, and toolchains. While this allows for highly customized solutions tailored to specific needs, it also demands greater expertise in system configuration and software management.

Connectivity and Networking

NVIDIA DGX systems come with standardized, pre-validated networking options—such as InfiniBand or Ethernet—optimized for AI workloads. These configurations ensure reliable performance out of the box.

In contrast, the HGX platform offers flexible networking choices, supporting high-speed InfiniBand, Ethernet, and custom interconnects. This flexibility allows data centers to tailor network architectures to their specific infrastructure and performance requirements.

DGX: All-in-One Cost Structure

DGX systems have a higher upfront cost due to their integrated design and pre-installed software. However, this all-in-one pricing simplifies budgeting and reduces hidden costs associated with custom builds.

HGX: Modular Pricing Flexibility

HGX’s modular nature allows for cost flexibility, as organizations can select components based on their budget. However, additional costs for integration, software, and maintenance may arise.

DGX: Plug-and-Play Setup

DGX’s pre-configured design enables rapid deployment, often within hours. This ease of setup is a significant advantage for organizations prioritizing time-to-value.

HGX: Custom Integration Requirements

HGX requires significant setup time and expertise for integration, testing, and optimization. This complexity makes it better suited for organizations with dedicated IT teams.

NVIDIA DGX and HGX are AI computing solutions designed for different application scenarios, each with distinct characteristics in terms of deployment convenience, scalability, and cost structure.

The core strength of the DGX lies in its plug-and-play design, with a pre-installed, optimized deep learning software stack and standardized hardware configuration. This significantly lowers the barrier to deployment, making it ideal for enterprises or research institutions looking to quickly enter production. However, this convenience comes with trade-offs—the fixed hardware setup limits flexibility for specific requirements, and the relatively high upfront cost may be a concern for budget-conscious users.

Additionally, the closed architecture of DGX lacks the flexibility needed for ultra-large-scale deployments, making it more suitable for small to mid-sized high-performance AI training environments.

In contrast, HGX adopts a modular design that supports scalability from single-node systems to massive clusters through flexible hardware combinations. It is particularly well-suited for high-performance computing (HPC) and large-scale AI model training that require customized compute resources, such as specific GPU/CPU ratios or interconnect topologies. However, this high degree of customization also means that users must have technical expertise to configure and maintain the system. This leads to longer deployment times and the potential for increased total cost of ownership (TCO) due to the complexity of integration and ongoing management.

In summary, DGX offers turnkey efficiency, while HGX excels in scalability and customization. Choosing between the two ultimately depends on how users prioritize deployment speed, technical capabilities, scalability needs, and cost considerations.

NVIDIA DGX and HGX are powerful AI platforms with distinct strengths. DGX offers a turnkey solution for deep learning, ideal for smaller organizations or those needing rapid deployment. HGX provides scalability and flexibility for large-scale AI and HPC, catering to data centers and cloud providers.

The choice between DGX and HGX depends on organizational needs. For ease of use and deep learning focus, DGX is the better option. For scalability and customization, HGX is more suitable. Assessing workload requirements, budget, and IT capabilities is crucial for making an informed decision.

A: DGX is an integrated AI supercomputer for deep learning, while HGX is a modular platform for scalable AI and HPC in data centers.

A: DGX is better for small enterprises due to its plug-and-play setup and lower integration demands.

A: Yes, HGX can be used for deep learning but requires custom software integration, unlike DGX’s pre-optimized stack.

A: DGX uses NVLink for GPU-to-GPU communication within a single system, while HGX often leverages NVSwitch for larger, multi-node GPU clusters.

A: HGX may have lower initial component costs but can incur higher expenses for integration, software, and maintenance compared to DGX’s all-in-one pricing.