In today’s world, where technology is advancing rapidly, organizations are always looking to improve their data centers in terms of efficiency and performance. An advanced tactic in meeting the increasing demand for computing power while conserving floor space and energy is through high-density servers. This paper aims to discuss in detail the structure design and physical characteristics of high-density servers in the capacity of a robust, cheap, and expandable infrastructure that leads to the evolution of the data center. In looking at the working concepts, potential gains, and strategies for achieving such gains, readers would learn how best to configure their data centers to advance business goals in an increasingly interconnected society.

High-density servers are computer servers designed to concentrate the maximum processing power and storage capability into the least possible amount of physical space. Such servers are generally equipped with several processors, several memory modules of a larger capacity, and heating and cooling mechanisms to facilitate proper functioning in tight spaces. This is achieved through virtualization, which allows a single server to host numerous virtual machines or applications, improving resource allocation and minimizing idle resources. By eliminating unnecessary overhead, high-density servers enable data centers to grow together with the business while eliminating the need to expand physical space, ultimately increasing productivity and the scalability of the growing industry.

High-density server technology allows for the economy of space through advanced processor architecture combined with effective utilization of power and cooling requirements, enabling several computing units to be integrated within a single compact space. On the other hand, these servers employ virtualization and multiprocessing technologies to simultaneously perform multiple operations on different environments of a single server. Other components include multi-core CPUs, high-density memories, and fast network interface cards for high data processing and transmission. As a result, the configuration uses minimum physical space while achieving better energy efficiency to provide a flexible and robust infrastructure that satisfies today’s growing requirements for data centers.

High-density servers improve computing efficiency by allowing better performance with judicious use of resources within a given area. Coupled with high core count processors, several tasks can be completed simultaneously, which leads to a reduced time on a job and thereby increases throughput. Increased memory capacity translates to high data access rates, essential in memory-demanding tasks. In contrast, support for advanced virtualization enhances the management of resources and optimally exploits servers’ features. Also, their small size and low power consumption help reduce running costs and decrease carbon footprint, so such servers are in high demand, especially in data centers that look to boost performance within the same space. In addition, robust network interfaces complement efficient data transfer across the entire system, enabling seamless communication between components and quicker response time during compute-intensive tasks.

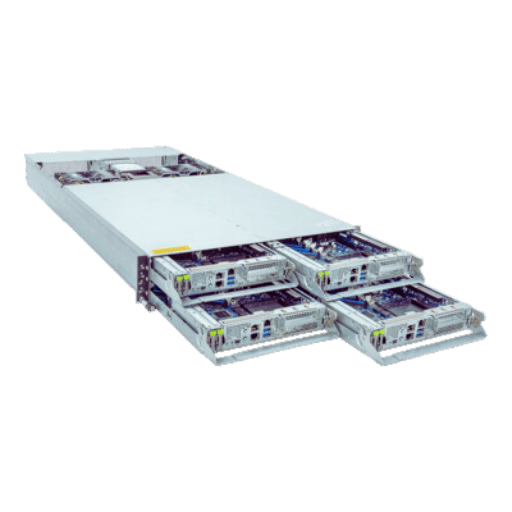

Multi-node architectural configurations within a server comprise more than one server on a single physical space, which significantly increases the entire system’s computational capacity. Since there are more than one server, also known as nodes, working together, there is an aspect of redundancy as well as parallel processing, which are all desirable attributes in enhancing the performance of a given system. Multi-node servers come equipped with some standard components, including power supplies and cooling systems, therefore addressing energy consumption issues and cost-effective operations in data center infrastructure. They are scalable by design, allowing more processing capabilities to be added to data centers without expanding the physical area. The structure has a design that supports high reliability since the quick re-assignment of tasks in case one node fails occurs. These features render multi-node server architectures suitable for supercomputing tasks and extensive data set processing environments.

Multi-node servers are essential in high-performance computing (HPC) since they make parallelization on large computational tasks feasible. They are also cost-effective since the servers provide scalability, meaning that more processing power can be added without investing in more hardware. Multi-node servers also facilitate high data transfer rates and low latency, which are important for fast and efficient computation in the HPC context. They enhance reliability and resilience by providing redundancy such that if a node fails, the other nodes could take over the jobs. Such servers are crucial in various applications, including scientific computing, financial modeling, and artificial intelligence, since these applications require high levels of computational power to run complex algorithms and analyze big data effectively.

Multi-node servers have various advantages, particularly their ability to work in parallel with large datasets and scale out, allowing big data solutions. They enhance speed and efficiency in manipulating and analyzing the datasets by allowing for the manipulation and distribution of workload between nodes. Thus, the performance is increased. Their architecture supports other significant data ecosystems, such as Apache Hadoop or Spark, which enhances their data processing. Moreover, multi-node architectures are highly available and fault-tolerant, so the operations will not stop even if one of the nodes fails. Such redundancy is pertinent in protecting the data and enhancing its availability, which is crucial during big data analytics. In addition, the costs lowered in such servers are because these servers are scalable solutions, unlike one big expensive server. These properties empower multi-node servers to be the most effective for enterprises that wish to use big data analytics to make informed decisions.

The strength of high-performance servers is unquestionable, especially compared to standard servers. This, in turn, provides a higher level of dependability and efficiency to cope with such applications as data centers, cloud computing, and virtualization tasks. Unlike traditional servers, which were never designed to merge high data bandwidth with minimal latency, high-performance servers can efficiently handle a business environment workload that requires real-time data analytics. Additionally, these servers often employ management tools and other features that provide a powerful complex for integration into an existing environment and use automation tools to maximize performance. On the other hand, servers of this type may exhibit limitations in scalability, flexibility, and speed and, therefore, not be capable of effectively addressing large-size workloads. Hence, for companies planning to scale their infrastructure and raise the effectiveness of operational activity at a maximum level, such an investment in the organization’s capacity promises to be beneficial.

The new growth of modern data centers has brought the need for scalability to cater to changing business and computational requirements. Scalability allows resources to grow or adjust in the event of heavier loads without any performance or efficiency losses. Some of the most prominent experts in the field have pointed out several methods to achieve scalability in data centers.

To begin with, adopting modular design and architecture permits stepwise increases, which means adding specific components that do not cause much disturbance.

Second, virtualization technologies improve resource allocation and allow more full use of hardware, allowing data centers to meet operational requirements quickly. Virtualization allows multiple instances of the same server on a physical server, which is cost-effective and flexible.

Finally, using SDN and storage solutions simplifies resource management and enables faster actions due to automation. SDN separates the network’s control from its hardware, allowing a more scalable and manageable network architecture.

These strategic directions, complemented by present-day trends and expert recommendations, create a solid foundation for developing the previously mentioned scalable data centers of the modern period.

High-density servers, primarily built to perform complex calculations, naturally increase energy usage in the environment of data center facilities. This issue is addressed by experts who argue that there has been an increase in power consumption because high server technology possesses a faster processing drive plus energy-demanding features. Also, with the increase in processing capacity, the demand for a reasonable cooler increases, increasing energy demand. Nevertheless, innovations in power-saving technologies and power management systems are being developed to minimize the consequences. Applying these measures allows one to optimize the performance and energy consumption of the systems so that their operability is sustained at a high level but at a reasonable power cost.

When configuring servers in high-density environments, three specification areas must be emphasized: processor performance, memory amount, and power consumption.

Focusing on these specifications will improve the operating efficiency and density of high-density environments while reducing the excessive consumption of power and cooling resources.

My optimizing strategies for server deployment in terms of modular designs revolve around three key areas considering the latest practices of leading authorities. First, I focus on flexibility since modularity facilitates server configurations to be met for specific tasks, improving efficacy and resource exploitation. Second, I address scalability in the type of server adopted so that with the organization’s requirements increasing, the system can expand by adding or upgrading modules without significant alterations. Finally, I ensure maintenance simplification through these designs because individual modules can be serviced or replaced with potentially little downtime for the rest of the system. Operating along these lines, I aim to deploy an environment in which servers will be both fast and still sturdy enough to cater to the current and, more importantly, future needs.

In my analysis of high-density servers, I distinguish between three main essential storage types. These include solid-state drives (SSDs), hard disk drives (HDDs), and hybrid systems. SSDs stand out for their high performance and speed, which are easily achieved with single servers due to their short access times and low latency bottlenecks. HDDs, conversely, operate at a lower speed but are more efficient in storing data in terms of cost per unit area, which is productive with slowing systems. Including hybrid drives allows the best features of both SSDs and HDDs to come into play. It helps enhance performance while maintaining a larger usable space, and by judiciously choosing these storage options commensurate with the workload, one can ensure a reasonable trade-off between performance, capacity, and cost structure of the server operations.

Using high-density servers during data processing is advantageous in virtualization, where servers’ resources and space are optimized and performed in real time. Firstly, due to their small sizes, such servers enable the installation of many virtual machines on fewer physical machines. This results in a reduction in energy and cooling expenses and satisfying the aspects of sustainability. Secondly, running virtualization on high-density servers improves the overall system by virtualizing the CPU and memory subsystems, which can be used according to changing needs at any given time. Finally, such architecture provides high scalability and flexibility so that applications can be provisioned and scaled out quickly when required. These characteristics also enable high-density servers to improve the virtualization approach and achieve better cost efficiency in data center operations.

Density-optimized servers are designed to use the most computing power within the smallest space on a rack. These servers achieve high space efficiency thanks to integrating several chassis designs and the system’s modular scalability features, which permit the data centers to use more hardware in the same space. Furthermore, improved cooling systems enhance efficiency and limit heat factors, allowing higher-density installations. This configuration style not only saves on the physical area but also aids in the efficiency of operations by simplifying the distribution of electricity and reducing the wastage of energy. Hence, organizations can increase the capacity that can be held within their data center infrastructure without necessitating radical changes in the infrastructure, which reduces capital and operational costs.

To get more information about our specific virtualization products, please get in touch with our professional staff. From the most recent information provided by leaders in the industry, it can be established that virtualization, in today’s view, puts a lot of focus on efficiency and looks for advancements in scalability. Some significant parts include robust cloud integration for better network flexibility, leveraging hyper-converged infrastructure for ease of management and deployment, and state-of-the-art technologies to protect the data. Of course, our products will tend to reflect such developments in the industry to ensure that the infrastructure you have in place is in a stance to adapt to ever-changing technology. At the same time, the cost of operation remains reasonable. Further questions, requests for individual consultation, or any other inquiries should be directed to the contact information below or on our website, where we offer different services.

A: A high-density server is a more power-dense device that can support more computing capabilities and storage within a smaller profile—this is especially useful in data center settings.

A: High-density servers are more compact and possess more computing power and storage than traditional servers, making them more energy-efficient and scalable.

A: High-density servers are pivotal in extending the computing power and storage available in a data center. They maximize space while enhancing the data center’s elasticity and energy consumption.

A: High-density servers contain the necessary elasticity and power to cater to various workloads and server node requirements efficiently, so they scale well in the cloud.

A: High-density servers are highly suitable for high-performance computing (HPC), edge computing, and other workloads that require robust storage space and greater computing power.

A: Owing to their capacity to endure harsh working conditions, high-density servers incorporate redundancy power supplies, multiple processor options configuration, and a compact chassis for improved performance and high reliability.

A: Different applications require varying configurations and performance hardware, which Thinkmate has to tailor. For example, high-density servers require specific hardware assembly and performance tailoring for such applications.

A: This is precisely why high-density servers must have a Compact form factor. This enables these servers to gather more computing and storage power while occupying less space, which is crucial in a data center.

A: High-density servers have adopted power management and cooling solutions to improve energy efficiency while sustaining good performance.

A: High-density servers are integrated with edge computing systems. At the proximity of data, these servers provide adequate processing power and storage, decreasing latency and enhancing efficiency.