As technology continues to change, so does the need for high-performance computing (HPC) and artificial intelligence (AI) capabilities. This blog will talk about some of the most exciting new GPU servers out there. Specifically, we are going to be looking at NVIDIA’s solutions as well as those from Supermicro and GIGABYTE. : These GPU servers have been created in order to meet these needs more effectively than ever before; they offer unmatched reliability, scalability and performance when it comes down to dedicated GPU configurations used for deep learning or HPC workloads that demand a lot from them. In this piece, we’ll cover what sets these machines apart from other options on the market today by taking an in-depth look into their features as well as some potential real-world applications which may help readers understand just how much of an impact such devices could have on driving innovation and improving efficiency within computational workloads.

How to Select an AI and HPC Appropriate NVIDIA GPU Server

What are the Fundamental Properties of a High-Performance GPU Server?

- Computational Power: The contemporary high-performance GPU servers are built with new NVIDIA graphical processing units like A100 or V100 series which have thousands of CUDA cores and can give multi-petaflops performance.

- Memory Capacity: There is huge amount of memory in both CPU and GPU parts that allow these machines deal with enormous datasets as well complicated neural networks easily.

- Scalability: These servers support multiple GPUs configurations and can scale up along with growing computational power requirements if needed.

- High-Speed Interconnects: Servers’ components talk to each other much faster thanks to such integrations as NVLink, InfiniBand or PCIe Gen4 – they reduce latency between graphic cards among themselves or any other part inside server case.

- Efficient Cooling Solutions: Liquid & air cooling systems designed for heavy loads during peak hours will never let machine overheat which guarantees stable performance under extreme workloads all year round.

- Strong Software Ecosystem: CUDA, cuDNN, TensorFlow or PyTorch are just few examples of software stacks compatible with this hardware. It means you won’t have any problems while deploying AI & HPC applications using them together on same server box.

- High Reliability/Redundancy Features: ECC memory alone might not be enough here so don’t worry – you get everything needful included within one device anyway (e.g., redundant PSU + hot swap).

What About AMD and Intel GPUs?

- Performance: In terms of AI and HPC jobs, mainly raw computing power, NVIDIA GPUs are ahead because of the complex designs it uses and having more CUDA cores.

- Software Ecosystem: This is where NVIDIA shines again with its well-established software stack consisting not only of CUDA but also cuDNN, among other optimized libraries for machine learning workflows in the artificial intelligence field.

- Memory Bandwidth: This is another area where NVidia exceeds expectations; it offers larger memory bandwidths than any other company, such as INTEL or AMD, which greatly helps while processing big datasets.

- Energy Efficiency: When talking about energy usage efficiency during high-performance workloads like those encountered within deep learning systems etc., then nothing beats the green giant (NVIDIA) that balances between power consumption and performance best suited for HPCs (High-Performance Computing).

- Market Penetration: Nvidia dominates AI & HPC markets whereas they face stiffer competition from fellow industry players like Advanced Micro Devices (AMD), Intel Corporation etc., regarding gaming or general-purpose computing unit segments.

What is the function of PCIe 4.0 and PCIe 5.0 in GPU servers?

: In terms of multi-core processors, data rates between CPUs and GPUs can be improved by using PCIe 4.0 and PCIe 5.0. This latency reduction per lane is made possible because PCI Express 4.0 has a twice larger bandwidth than PCI Express 3.0 at up to sixteen GT/s (Giga-transfers per second). As a result, systems work better when performing tasks that require high amounts of data processing ability. PCI Express 5 doubles the already increased data transfer speed by increasing bandwidth to thirty-two GT/s, which allows for faster exchanges between datasets AI needs a huge amount of such capabilities for HPC to become efficient hence, large datasets are easily dealt with through this method so that they may not become bottlenecks thereby ensuring that all these developments will make it possible for computational efficiencies to be optimized when necessary but without any limit depending on what kind of workload we have

Making Your GPU Server Excellent for Deep Learning and AI Workloads\

Which NVIDIA GPUs are Good for Deep Learning?

NVIDIA A100 Tensor Core GPU: It functions so well due to multi-instance GPU support, mixed precision capability, and high memory bandwidth.

- NVIDIA V100 Tensor Core GPU: This one is very versatile because it can handle heavy memory loads which makes it perfect for training or inference on gpu-accelerated servers as well as other places too.

- NVIDIA RTX 3090: This card balances power with price, making it an ideal high-end desktop deep learning card.

- NVIDIA Titan RTX: This card packs a lot of punch when it comes down to performance during deep learing applications – 24 GB should be enough right?

- NVIDIA Tesla T4: Inference workloads don’t necessarily require all that much power; therefore, this specific card was designed in order to optimize efficiency while using lower amounts of energy.

How to Set Up an AI Model Training GPU Server

- Choose the Correct Hardware: Decide on GPUs that are optimized for AI workloads like Nvidia A100 or V100 Tensor Core GPUs.

- Memory Maximization: Have enough RAM, ideally equal to or more than the capacity of GPU memory in order to handle large datasets effectively.

- Best Storage Solutions: Use high-speed storage solutions such as NVMe SSDs so as to reduce data retrieval times.

- Efficient Cooling Systems: Install powerful cooling systems designed for gpu-accelerated servers which can help you maintain optimal temperatures levels of your gpus and avoid any thermal throttling in them.

- Updating Drivers and Software: Keep updating your GPU drivers frequently while using AI frameworks such as TensorFlow and PyTorch which have been tailored for your specific hardware needs.

- Leverage Parallelism in 3rd gen CPUs and dedicated GPU systems.:

- Utilize multi-GPU setups and MPI (Message Passing Interface) configurations to parallelize tasks for better performance, especially when working with third-generation central processing units alongside dedicated graphics processing unit environments.

- Network Configuration: Establish a network with low latency but high bandwidth connections between distributed training nodes; this will ensure faster data transfer rates during distributed training.

Improving Server Performance with Scalable Processors

To boost GPU server performance using scalable processors, follow these technical tips:

- Choose High-Performance CPUs: High core counts and strong single-thread performance processors like Intel Xeon or AMD EPYC should be used in addition to your GPU setup.

- CPU-GPU Ratio Balance: The number of CPU cores should match the number of GPUs to eliminate bottlenecks and ensure even distribution of workload processing.

- NUMA Architecture Utilization: Configure Non-Uniform Memory Access (NUMA) settings for better memory access patterns that reduce latency.

- Hyper-Threading and Turbo Boost: These technologies should be turned on so that multi-threaded performance is improved and workloads can dynamically change according to need.

- Memory Bandwidth Consideration: DDR4 or DDR5 memory must be employed for enough memory bandwidth between CPU and GPU which speeds up data exchange rate.

- Efficient Scheduling Algorithms: Use advanced scheduling algorithms that will effectively manage workload distribution across CPU and GPU resources.

- Monitor And Optimize Performance Continuously: Keep track of system performance through profiling tools while making adjustments based on workload characteristics for best configuration possible.

Following these principles will result in higher performing as well as efficient GPU server deployments.

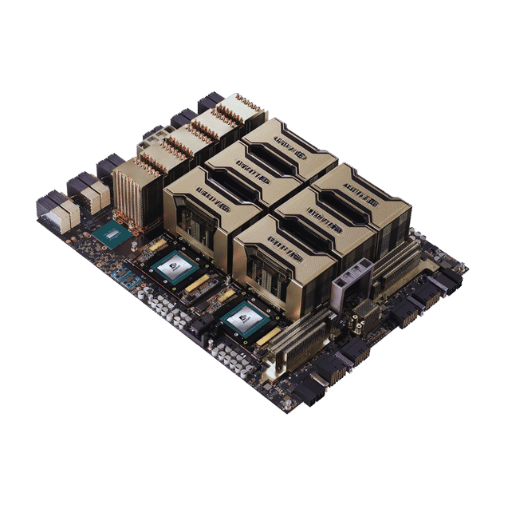

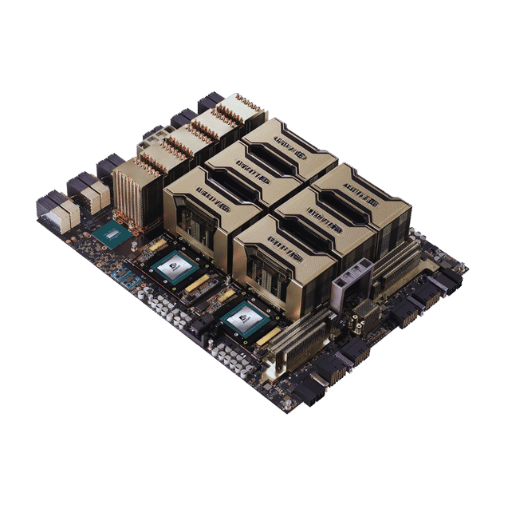

The Impact of NVIDIA H100 and A100 GPUs on High-Performance Computing

What Makes NVIDIA H100 and A100 GPUs Ideal for HPC?

Advanced plan, high power and versatile tensor cores make the NVIDIA H100 and A100 GPUs excellent for high-performance computing (HPC). The bottom line is that both these graphics processing units offer unmatched computational abilities because they are based on different architectures. They also support mixed-precision calculations, which can be used to improve performance or efficiency or both at once. Moreover, thanks to their large amounts of memory combined with high bandwidth rates it becomes possible for them to efficiently deal with huge datasets during simulations. Another thing about such devices is that AI has been integrated into most, if not all, aspects, so any artificial intelligence/machine learning task you might have in mind shall be accomplished right here without any challenges whatsoever.

Comparing the Performance of NVIDIA H100 vs A100 GPUs

These two graphic cards have only one similarity, which is being used in high-performance computers, but again, they cannot be compared directly because each serves its own purpose better than the other, depending on what needs doing. When it comes down to numbers though; people will always want more so among these two i would like us to take a closer look at them regarding this matter where i think there might occur some interesting differences between these two hardware parts. I think that when we talk about HPC computing, then, nobody can deny the fact that the h100 gpu by Nvidia with Hopper architecture may perform better than a hundred GPUs using ampere architecture designed by the same company. This is because they are built with different purposes in mind as already mentioned earlier on this text so let me not repeat myself over and over again but instead provide you guys out there reading my article right now some concrete evidence why am saying so confidently without any fear of contradiction from anybody else who might disagree with me later after reading through everything which has been written here up till now.

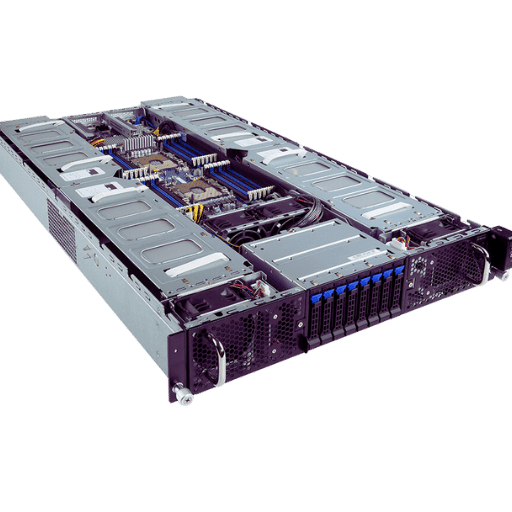

Scaling the GPU Infrastructure with GIGABYTE and Supermicro Servers

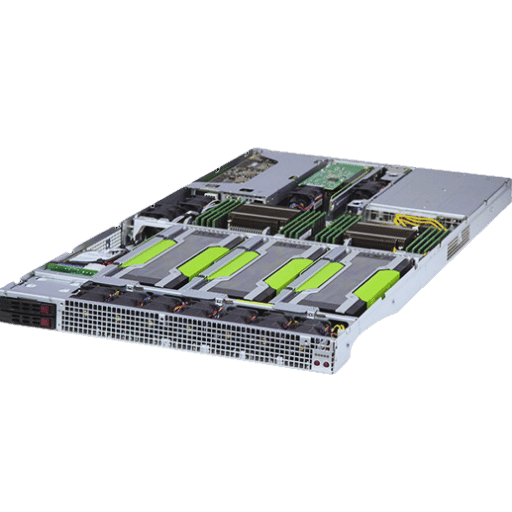

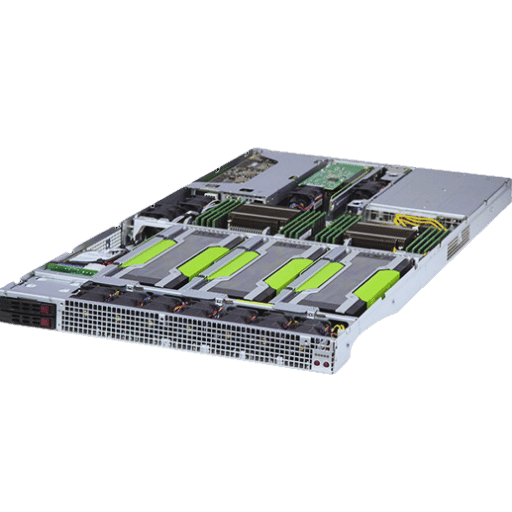

Benefits of Utilizing AI and Deep Learning Data Center Hardware from Supermicro

There are many advantages to using Supermicro servers for artificial intelligence (AI) and deep learning (DL). First, they have high-density configurations that allow for maximum utilization of computing power in an energy-efficient way – this is essential when working with resource-intensive AI workloads. The thermal design in these systems is optimized which enables them to operate reliably during heavy-duty processing. Moreover, supermicro provides scalable solutions supporting multi-GPU setups necessary for training models on a large scale as well as making inferences at such levels. Theirs are also equipped with multiple storage options so that data can be accessed quickly and interconnects that transfer it rapidly between different parts of a system; both features are important for performance-intensive tasks involving AI applications. In general terms, then, what sets Supermicro apart from other server brands targeted at DL/AI needs is their focus on reliability along with scalability while still striving towards efficiency gains.

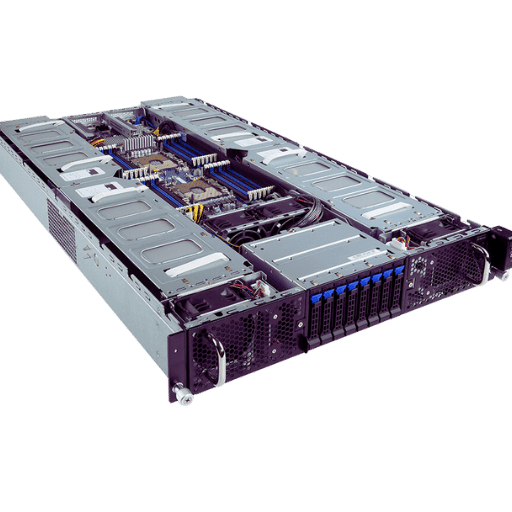

GIGABYTE’s Contribution Towards GPU Accelerated Computing

GIGABYTE achieves this through various ways: Firstly by ensuring maximum performance & efficiency through optimization architectures thus making them ideal partners where Graphics Processing Units (GPUs) or any other type of accelerator card might need space within a single chassis design.. Secondly by providing customisable system configurations which can meet specific requirements related to Artificial Intelligence or High Performance Computing needs thereby delivering tailored solutions according to client demands.. Thirdly, having cooling mechanisms that are designed to be robust enough not only to help cool down these cards when they run hot but also to keep them cooled throughout their usage durations, especially during intense graphical processing unit operations. Furthermore, GIGABYTE supports wide arrays of different models under its brand name alone, thus guaranteeing more versatility during deployment across one’s computational needs, irrespective of whether it involves gaming machines or scientific research methods requiring huge amounts of computation. Lastly, various advanced networking options that allow for quick data transfers between devices and low-latency communication links between different parts of a computing infrastructure are available in GIGABYTE servers. These enable real-time processing capabilities required for handling large streaming datasets within environments where there is a need to process them as soon as they get generated; for example, those dealing with live video feeds or sensor networks generating continuous streams of measurements that must be analyzed instantaneously.

Establishing a Scalable GPU System in a Data Center

There are some important steps to follow when setting up a scalable GPU system:

- Requirements Evaluation: The volume of GPUs, the nature of tasks and performance goals should be assessed.

- Choosing the Right Hardware: Servers should be selected that have optimized support for GPUS, such as Supermicro or GIGABYTE servers which offer compatibility as well as scalability.

- Network Configuration: To allow for fast low latency communication it is necessary to use high-speed interconnects like InfiniBand or Ethernet.

- Thermal Management: Implement advanced cooling solutions so that optimal operating temperatures can be maintained for longer hardware life span.

- Storage Solutions: Integration of high-speed storage options which provide quick data access while also being able to handle large datasets concurrently.

- Scalability Planning: Designing the infrastructure with future growth in consideration allowing seamless addition of more gpus among other resources when required.

- Implementation & Testing: Rigorously test after installation and configuration has been done following stability and performance testing guidelines

These procedures guarantee a strong, effective and scalable GPU infrastructure that suits AI and HPC applications explicitly.

Configuring Servers with NVIDIA GPU for Better Performance

Steps to Optimize Your NVIDIA GPU Server for Different Workloads

- Install the Latest Drivers and Software: Make sure that you have the most up-to-date NVIDIA drivers as well as any other necessary software so that they are compatible with one another and perform at their best.

- Adjust BIOS Settings: Change BIOS settings to power-saving mode or use an option which allows the card to be powered off when not in use for longer durations. The PCI-E slot should be set to run at maximum x16 speed. Set Memory Allocation Mode to “Auto” or “Fixed”

- Enable GPU Boost in dedicated GPU systems for peak performance.: Use NVIDIA’s technology called GPU Boost which automatically increases clock speeds when it is needed so that you can get more out of your card.

- Configure CUDA Settings: Set the following parameters in order of priority – device count, threads per block, number of blocks per grid; then try different combinations until you find what works best for you. This will allow workloads on 3rd gen i-series processors (ivybridge) with compute capability 3 or better to utilize cuda cores effectively.

- Implement Resource Isolation: Employ nvidia-docker2, a tool developed by NVIDIA to enable resource isolation among containers on gpu-accelerated servers. This helps allocate GPU resources efficiently across various tasks running within containers.

- Memory Management: You can manage memory transfer and allocation more effectively by using CUDA Unified Memory. This is particularly useful in environments where there is a single dedicated GPU.

- Monitor and Analyze Performance: Continuously monitor system performance using nvidia-smi command line utility along with other profiling tools provided by NVIDIA corporation. There may be times when adjustments need to be made based on these readings; hence, keeping track of them all throughout would be very helpful indeed.

- Thermal and Power Management: In server rooms with limited cooling capacity, ensure proper heat dissipation from accelerated computing devices like GPUs (Graphics Processing Units). Use Nvidia-semi to monitor temperatures and power consumption. If necessary, install additional fans or liquid cooling solutions to prevent overheating while maintaining high-performance levels in GPU-accelerated servers.

Matching CPU and GPU Power for the Best Performance

To use processing units to their fullest potential, one has to balance CPU and GPU power. This can be achieved by understanding what workloads need and how systems work. Start by profiling applications so that you know how much computation each of these components takes up. Ensure that neither becomes a bottleneck through task distribution optimization – this should be done when both are equally utilized in terms of workload processing or parallelism where applicable; divide jobs efficiently enough to maintain constant activity on both devices. Implement dynamic scaling strategies like MPS which is an acronym for Multi-Process Service developed by NVIDIA, among other workload management plans that will ensure resources are used optimally. Besides, try using tools for power management so as to adjust power limits dynamically thereby maintaining consistent performance while minimizing energy usage. To attain quick fixes and maximum efficiency in system operation, it is important to monitor various performance metrics on a regular basis and analyze them frequently, too.

Software and Tools to Manage NVIDIA GPU Servers

The following software and tools are necessary for managing NVIDIA GPU servers:

- NVIDIA CUDA – A parallel computing platform and programming model.

- NVIDIA Container Toolkit – Enables containerized applications that use GPUs for acceleration.

- NVIDIA TensorRT – Optimizes deep learning inference apps.

- NVIDIA Nsight Systems – Provides in-depth performance analysis and profiling capabilities.

- NVIDIA Management Library (NVML) – A C-based API used to monitor different states of the NVIDIA GPUs.

- NVIDIA Data Center GPU Management (DCGM) – This is a suite of tools designed for managing and monitoring GPU servers within data centers.

- Kubernetes with GPU Support – Used to orchestrate containerized applications that leverage the power of GPUs for acceleration.

- Prometheus + Grafana — For real-time monitoring and visualization of system metrics

- Slurm — Workload manager & job scheduler that integrates with GPU clusters

- PyTorch/TensorFlow — These are deep learning frameworks that utilize GPUs for accelerated training/inference.

Reference Sources

Graphics processing unit

Nvidia

Supercomputer

Frequently Asked Questions (FAQs)

Q: What are the benefits of utilizing High-Performance GPU Servers for AI, HPC, and Deep Learning?

A: They offer significant computing power which is useful for tasks requiring a lot of resources like AI, HPC, and deep learning. These servers have dedicated GPUs that accelerate data processing as well as model training thereby giving quicker insights or outcomes.

Q: How do NVIDIA Supermicro and GIGABYTE servers support AI and machine learning applications?

A: NVIDIA Supermicro and GIGABYTE servers come with powerful GPUs plus high-performance components designed specifically for AI and machine learning applications. These servers feature improved processing power coupled with enhanced memory bandwidth so that they can efficiently handle complex AI algorithms with big data sets.

Q: What advantage does a 4U rackmount server have in AI applications?

A: 4U rackmount servers provide enough room for high-performance parts such as multiple GPUs; better cooling systems; larger storage capacities among others. This makes them suitable for managing resources in artificial intelligence where there is need for heavy computation.

Q: What role does the gen Intel® Xeon® Scalable processor play in these servers?

A: Gen Intel® Xeon® Scalable processors are known to be fast hence delivering efficient performance while securing information which makes them perfect candidates for use within any server employed in an area involving AI, HPC or even deep learning. These chips allow large memory configurations thus enabling them process workloads easily especially on GPU accelerated servers that require high processing powers.

Q: What are the features of the NVIDIA A100 GPU in these AI servers?

A: The NVIDIA A100 GPU is designed specifically for high performance when it comes to carrying out different functions related to artificial intelligence and deep learning, which brings about significant improvements, especially within projects that touch both HPC and AI. It provides outstanding acceleration during machine training together with inference workloads, thereby leading to faster processing while reducing time taken towards gaining insights. The A100 also supports multi-instance GPU technology, which allows concurrent training or inference of several networks.

Q: What effect does PCIe 5.0 x16 have on the performance of servers?

A: In comparison to earlier models, PCIe 5.0 x16 features much higher data transfer rates. This allows the CPU, GPU and other server parts to communicate more quickly so that latency is reduced and overall performance is increased, especially in AI and HPC applications where large amounts of data need to be processed.

Q: Are these servers able to be used in virtual environments?

A: Yes, they are. These high-performance GPU servers are ideal for use in virtual environments as they can provide enough computing power for virtualized workloads while also being capable of efficiently running multiple VMs thus making them suitable for different types of applications across data centers and cloud infrastructures alike.

Q: What characteristics do AMD EPYC™ processors possess which make them suitable for these uses?

A: With their many cores, improved I/O capabilities, and advanced memory support, among other things, it’s not hard to see why AMD EPYC™ processors would be considered best-suited for AI, HPC, or even deep learning applications. They offer great multi-threaded performance as well as being perfect for high-throughput data processing tasks.

Q: What kind of server motherboards are used with these high-performance GPU servers?

A: The server motherboards employed by such systems are designed around supporting cutting-edge components like several GPUs at once along with fast memory speeds plus quick data transfer interfaces too; all while still maintaining reliability & performance in rack systems featuring 4U or 2U configurations thanks to robust connectivity options.

Post Views: 6,644