Finding the proper rackmount server configuration and size can be challenging, considering the myriad of options available. For a small business as well as a large-scale data center, the selection of precise server form factor is critical to achieving optimal performance, scalability, and space efficiency. This article will examine the entire range of rackmount servers with 1U to 4U profiles and assist you in determining the ideal server for your organization. We’ll discuss the important brackets, distinctive attributes, and distinct advantages of each size so you are adequately informed. In the end, building a powerful and well-structured server ecosystem will be thorough from A to Z.

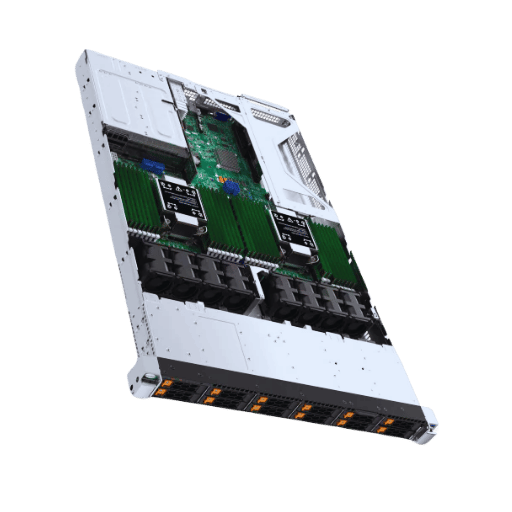

A rackmount server is a server class meant to be set up in a fixed-effort rack structure, which is often used in data centers and IT facilities. A rackmounted server is a compact device scaled horizontally subdivided into sections called rack units or “U.” Essential features of rackmount servers include economized physical space, high modularity, improvement in cable management, equitable airflow, facilitated maintenance, and advanced cooling, making it easier to support high computing workloads in a confined space.

Rackmount servers are built to standard dimensions for fitting into rack systems with size defined in rack units (U), where 1U is equal to 1.75 inches in height. They are available in many sizes, such as 1U, 2U, or 4U, which allows for scalability for computing needs. Unit spaces are designed to enhance space effectiveness within data centers as well as enable easy access for servicing and upgrading. The universal shape also helps Modular System Integration with other industrial standard components so they can be put together and used in a variety of different settings much easier.

For modern infrastructures, rack servers offer numerous advantages, making them vital in data centers as well as enterprise networks. The critical advantages are highlighted below:

High-Density Computing

Among nonespace-occupying devices that optimimlize space usage within a data center, rack servers stand out. Businesses can vertically stack multiple servers in standard 19 inch racks which signifignlty increases the computing power to space ratio within the data center. Dozens of servers can be accommodated in a single rack which further enables the creation of high-performance computing environments.

Scalability and Modularity

Organizational requirements regarding the IT infrastructure can effortlessly evolve with the help of rack servers. Separate servers can be added, removed, or replaced, resulting inmodified operational workloads without significant interuption. Adaptation to expansion demands is also possible. This modular method enhances cost efficiency alongside upgrade simplicity.

Efficient Cooling and Power Management

Rack servers posses advanced methodes of efficient cooling, dedicated fans, and airflow management designs that lessen heat buildup. Furthermore, energy cost reduction can be achieved using centralized power supply options that are availabe for rack configurations. Recent studies have shown that data centers employing optimized rack server configurations have reduced power consumption by 20%, even enhancing sustainability targets.

Enhanced Performance and Reliability

The latest processors and memory modules can be integrated into the rack servers alongside the newest technologies in storage, for demanding applications. Many models offer redundancy features such as dual power supplies or RAID-configured storage which ensures reliable operation and offers reliability during failures.

Streamlined Maintenance and Accessibility

Technicians do not need to disturb other servers in the rack with slide-out trays or rail designs that allow them to quickly access individual servers. This organizational maintenance efficiency translates into critical reduced server downtime and improved operational continuity in high-availability environments such as web hosting or financial applications.

Industry Standardization and Compatibility

Standardized dimension adherence to these guidelines fosters compatibility with countless hardware components, networking devices, and software platforms. Whether on-premises or a part of hybrid cloud structures, this universality promotes interoperability and simplifies deployment across varying IT environments.

With these benefits, businesses are able to build a resilient, adaptable foundation and scalable IT architecture that supports their operational objectives while ensuring cost efficiency and future innovation.

When choosing a type of server, businesses typically consider scalability, space, efficiency, performance, and cost while evaluating tower and rackmount servers. Each system offers unique benefits suitable for different operational needs.

Rack Mount Servers

These servers are engineered to fit within specific server racks, making them a great solution for businesses needing scalability and highly efficient space usage. They tend to be used in data centers and enterprise IT settings where enormous server deployments are a must. Typically, rack mount servers are 1U to 4U in height, which provides configurational and rack space optimization flexibility.

Benefits:

Applications:

Tower Servers

Tower servers function as independent units that resemble traditional desktop computers, however, they are specially made for performing server tasks. These servers serve as standalone units which sustain a low cost for small to medium businesses as well as individuals with limited IT infrastructure within their working environment.

Advantages:

Use Cases

Key Considerations

Limited physical space: Tower servers are Easier to set up and provide less clutter thus making them easier to use in open areas whereas rackmount units suit those who are short on room.

Spaces that require heavy performance workloads target rack-mounted cubicles as they are modular and provide an easy scalable and deployable design.

Budget: These towers provide smaller perimeter setups without minimal inflexible functions.

In the end, the final choice will depend on what the specific business features as priorities. Evaluating matters like legroom, anticipated workloads, and subsequent long-term growth can aid in determining the right option for a business.

Data center 1U rackmount servers are an economical, compact option for businesses that want to save on space while still keeping up productivity. Here are a few of their exemplary features:

“Leveraging for performance, compact structure, versatility, and dense usability, 1U servers provide an unbalanced yet needed attribute to modern IT structure frameworks.”

The latest 1U servers have multi-core processors with large RAM and NVMe SSDs. This increased computing power, massive memory, overheating liquid cooling, and NVMe SSDs achieve exceptional performance within compact regions. These high-density designed 1U Units consume reduced amounts of energy while retaining absolute cooling velocity and sustaining the height of productivity required for modern workloads, so Master AMD EPYC or Intel Xeon Scalable indices are used epically, boasting upwards of 96 cores per socket, terabytes of sustaining memory, ERP, CRKM, RFPSW, and PGPAC-sustained high-compute workloads for maximum receptiveness efficiency and mid-EI-succored streamlined performance.

Modern IT teams are in the market for extreme energy efficiency and combined multifunctionality, indicators at the industry’s current position claim up to 96 core full-load per rack and full structural cooling of commonly required shelving allows high-density ZIGF clearance configuration altitude increase of up to 40% sales space with hyper-optimal SCR for 1U server meter racks.

Support for advanced networking interfaces, such as 25 GbE, 40 GbE, or 100GbE, provides accelerated data transfer rates, which make these servers suitable for virtualization, cloud hosting, and high-performance computing (HPC) workloads. In addition, many models now offer IPMI and other proprietary management tools, allowing remote monitoring, which reduces downtime and improves efficiency.

These features all come together to highlight the importance of 1U servers in high-density, high-performance computing in today’s IT architecture. This helps organizations expand their IT resources in a cost-effective manner while fulfilling operational needs driven by digital transformation.

The cooling systems and power supplies are central to the effectiveness and reliability of 1U servers, as well as their operational efficiency. A strong power supply will avert system failures from interruptions in power, blocked circuits, or even voltage fluctuations by ensuring energy delivery. Usually, 1U servers feature redundant power supplies (PSUs) to enhance uptime by offering critical scenario backup power.

Teamwork compressors are crucial in managing the compactly 1U designed servers’ heat. Properly configured with consistent upkeep, advanced cooling systems like optimized airflow designs, high-performance fans along with dedicated heat sinks slot, can ensure safe operational temperatures. Besides safeguarding the servers arch, these lowers ensure consistent performant and prolonged hardware lifespan.

With regards to efficiency combining performance, expandability, and rack space, 2U rackmount servers are superb performers. Standing at double the height of 1U servers, 2U servers have enhanced advantages in hardware arrangements making it suitable for demanding workloads and flexible deployment scenarios. 2U servers usually have refrigerators, lower memory, and addional scaling possiblilities in comparison to 1U servers.

A standard 2U server can host multiple hard drives or solid state drives, often permitting arrangements with as many as 24 2.5-inch drives or 12 3.5-inch drives, depending on the model. As a result, it is ideal for data intensive operations such as virtualizations, database management, and extensive backup solutions. Furthermore, many 2U servers feature superior cooling capabilities because of the sheer size of the chassis; enhanced airflow and heat dissipation make it better for high-performance computing components.

Modern 2U servers come with state of the art offerings such as redundant power supply units, NVME enabling quicker data transfer rates, and higher memory bandwidth which can easily face demanding tasks. They easily adapt to the flexible and scalable needs of modern enterprises, institutions, and IT frameworks, as they are often used in enterprise environments for cloud computing, machine learning, and big data analytics.

2U rackmount servers show how they can meet both present and future business needs by providing an ideal blend of performance and expandability within a reasonable size.

4U rack servers stand out due to their particular benefits, amplifying high-performance computing and significant expansion capability for enterprises. Provided below is an enumerated list of the primary benefits of the 4U rack servers:

Greater Expandability

4U servers offer additional chassis space that can be used to install a higher number of components like upgraded GPUs, additional hard drives, and multiple expansion cards. This is particularly useful for resource-demanding activities like data warehousing or training large AI models.

Advanced Cooling Efficiency

The added size of 4U servers improves airflow for cooling, providing better thermal management and protecting the server from overheating, even when under heavy loads. The enhanced cooling contributes to increased reliability and longevity of the hardware.

Exceedingly High Storage Capacity

The numerous drive bays available with a 4U server facilitate far higher storage capacity in comparison to smaller rackmount servers. It is suited for applications like video rendering or big data analytics that require extensive data storage because it can house many SSDs or HDDs.

Hardware Configuration Flexibility

Having a larger chassis permits better adaptable server configurations that allow for the integration of tailored components such as the specific processors, RAM, and GPUs needed for operational requirements. This is useful for many businesses that need an agile IT solution due to constantly changing business needs.

Options With Higher Redundancy

The 4U server offers extensive internal space which makes room for increased redundant configurations, for example, extra power supply units and elaborate failover systems. All these features increase system uptime, availability, and redundancy.

Accommodating High Power Needs

4U servers can mount parts with higher power requirements, peripherals like high-end GPUs and field-programmable gate arrays (FPGAs). This allows them to perform exceptionally well in computation-intensive tasks such as sophisticated simulations or machine learning.

Maintenance Simplified

The design of the 4U servers is user-friendly. It is easy to perform upgrades, repairs, and routine maintenance due to the server’s accessible interior features. Components in the server’s spacious housing are easy to reach which reduces the time needed for routine and emergency maintenance.

Diverse Uses

4U rack servers can be employed in numerous operations, including but not limited to managing databases, virtualization, AI, scientific explorations, and ERP – enterprise resource planning systems.

All 4U rack servers features outlined above make them a worthwhile investment for businesses that need to ensure flexible scaling of IT resources, perpetual system reliability, and impeccable server performance, all concurrently.

In customizing server chassis to fit distinct needs, I first evaluate the workload and operating environment. This involves choosing the right dimensions, Cooling, Power, and drive configurations that both fit together and work seamlessly. I also Information such as scalability, maintenance, and interfacing with other Information systems in place to refine the approach. Adjusting the chassis specifications expands organizational objectives and gives reliance and efficiency in the setting.

Both Intel® Xeon® and AMD EPYC™ processors have a distinct impact on the performance of servers, however, each has an area that is more advantageous than the other. In my view, Intel Xeon processors outperform competitors in single-threaded performance and possess a multitude of application optimizations reliant on high clock speed and ecosystem support compatibility. AMD EPYC processors, conversely, are exceptional with multi-threaded performance and are best suited for virtualization workloads, data analytics, and cloud computing due to their advanced architecture and higher core counts. I make decisions on performance benchmarks and characteristics of workload to choose the preferred processor series that will best suit the operational needs.

Choosing the right processor entails a detailed assessment of business-critical performance indicators while considering how the specific applications interact with the workload requirements. Intel Xeon processors particularly shine in single-threaded performance and, therefore, are best suited for applications with sequential processing dependencies. Newer benchmarks report that Intel Xeon’s latest Scalable Gen 4 processors perform exceptionally well in high-frequency trading and real-time analytics. These processors also offer a wide range of memory options and are tuned for high throughput input/output intensive workloads.

On the other hand, the multi-threaded performance offered by AMD EPYC processors is unrivaled. The newest EPYC processors, like the AMD EPYC 9004 series, feature an industry-leading 96 cores per chip, which greatly enhances its parallel processing capabilities. This makes it ideal for complex problem-solving tasks, especially for data analytics, artificial intelligence, and virtualization. In addition, AMD EPYC processors feature enhanced memory bandwidth, A PCIe 5, extremely low-tier latencies, and scalable architecture, which makes them unrivaled for dealing with data-intensive tasks.

Furthermore, energy efficiency and return on investment value impact decision-making. AMD EPYC processors are designed to work more efficiently than competing models, and their power consumption per unit of work performed is lower than Intel counterparts, helping organizations achieve a lower total cost of ownership (TCO). On the other hand, Intel Xeon keeps workload-specific accelerators, such as Advanced Matrix Extensions (AMX) and other AI acceleration engines, which improve performance on certain tasks like AI inferencing and also reduce operational cost.

When selecting between these two families of processors, evaluating workload features is critical – for example, balance between single-threaded execution speed and multi-threaded scalability, memory bandwidth, and power are indispensable. Blending these considerations with the strategic alignment factors leads to a processor choice tailored for uninterrupted performance and sustained business profitability.

While examining scalable processor choices, the alignment of specific workloads and operational requirements to appropriate processors needs to be evaluated through multiple technical and performance metrics. For instance, modern processors like the latest offerings from Intel Xeon and AMD EPYC families emphasize scalability for purposes such as cloud computing, virtualization, AI-driven applications, and even data analysis.

Performance Metrics:

Core Count and Threading:

Equipped with 128 cores, AMD EPYC processors accommodate multi-threaded and parallelized workloads as well as high-performance computing (HPC). Intel Xeon processors, on the other hand, have a more balanced core and thread management and offer up to 60 cores in some models, which allows them to perform both heavily multi-threaded applications and single-threaded applications that require a lot of resource efficiency.

Memory Bandwidth:

AMD EPYC processors paired with in-memory databases, big datasets, or AI training sessions benefit from programmable workloads due to their high socket memory bandwidth paired with DDR5 technology and increased memory channels. Additionally, Intel Xeon processors employ new technologies such as Intel Optane memory, which improves memory capacity and speed, providing quicker data access in various situations.

Power Efficiency

Cost-efficient scalability hinges on thermal and energy efficiency. Now, AMD EPYC chips shift towards energy-conscious designs with features that optimize power consumption at the core level for better value in power-intensive, high-density data centers. Likewise, Intel Xeon processors integrate energy-saving technologies such as Intel Speed Shift and Deep Power Down States to optimize energy use during lower utilization periods.

PCIe Support:

Peripheral Component Interconnect Express (PCIe) lanes are vital for connecting GPUs, NVMe storage, as well as the networking cards. AMD EPYC processors feature abundant PCIe lanes with the latest models boasting up to 128 lanes which enhance scalability for AI, ML, and high-bandwidth storage servers. Also, Intel Xeon processors offer advanced PCIe 5.0 for adapting to evolving hardware compatibility needs.

Performance Benchmarks in an Industry Context:

As per the benchmarks performed on different workloads:

AMD EPYC processors have shown between 20 to 40 percent greater performance-per-watt than previous-generation processors, allowing companies to cut TCO dramatically in cloud infrastructures.

Intel Xeon processors have competitive single-threaded performance with common business applications and robust security features with SGX (Software Guard Extensions) for confidential computing environments.

Predicted Developments in Scalability:

The capability of the processors to remain potent in future landscapes of computing relies on how well they integrate fundamentally new technologies like AI Accelerator integration, hardware security, and edge computing support. Both Intel and AMD continue to advance these territories to ensure their processors are ready for next-generation workloads.

Thinking through these elements carefully not only enables immediate performance benefits but also positions an organization for long-term adaptability, expenditure efficiency, and dependable value in IT environments which change rapidly.

The unparalleled competence of GPU servers in carrying out intensive computation tasks is single-handedly the reason why they are becoming a modern pillar in the architecture of data centers. Performing parallel tasks is a strong suit of GPUs, unlike traditional CPU architectures that are meant for sequential tasks. This parallel efficiency is particularly notable in industries such as Artificial Intelligence (AI), machine learning (ML), high-performance computing(HPC), and real-time analytics. For example, convert AI models to GPU-compatible code because OF NVIDIA A100 Tensor Core GPUs, so the performance x20 increases in AI training performance and previous generation in the computing population.

In virtualization, on the other hand, GPU is a technology that aims to partition resources on a single computer in a manner that multiple users can simultaneously share them. More so, with GPU virtualization, it is now possible for several VMs to use one physical GPU, making it possible for workloads such as AI interring, desktop virtualization, and 3D rendering to expand. Corporations such as VMware and NVIDIA have created enterprise class VMs designed specifically for large corporations.

Current market research suggests the worldwide GPU market is anticipated to expand at a CAGR of 33.6% between 2023 and 2028, which highlights the growing dependence on GPU-powered infrastructure. Furthermore, the use of hybrid cloud models intertwines virtualization with GPU capabilities and enables even more agile deployment and scaling across private and public cloud infrastructures. These movements are indicative of how GPU servers and virtualization technologies are innovating to address the needs of next-generation systems and applications, enhancing innovation and operational flexibility in data centers.

NVMe (Non-Volatile Memory Express) storage solutions have transformed the high-performance storage landscape in today’s datacenters. NVMe elogates the use of flash memory in high speed storage systems by operating over PCIe interfaces. It outperforms legacy systems like SATA and SAS in data transfer rate, efficiency, and latency from 3-10x.

Ongoing development has confirmed that NVMe storage performance can achieve read-write speeds of over 7GBpp, eclipsing other storage interface options. Such enhancement enables real-time analytics, artificial intelligence, 4K/8K video analytics, and other data-intensive applications. One research suggests, enrolling NVMe Solutions allows some businesses to get 6x data procesamiento speed and 5x higher latency reduction, enabling real-time operational scaling.

Multi-core processors are ideal for NVMe architecture since it supports multicue command parallelism (64,000 command queues), which enhances parallelism further. This provides additional flexibility for virtualization environments, which require agile handling of massive amounts of I/O requests. The benefits can be further passed by deploying NVMe-over-Fabrics, which enables usage of NVMe’s low latency, high-speed performance over ethernet or fiber networks.

Integrating NVMe into management strategies opens new opportunities for enterprises by enhancing performance, scalability, and reliability. Its growing role in modern IT solutions signals that NVMe is becoming important for performance-oriented workloads and cutting-edge technologies.

The importance of big data, the Cloud, and IoT applications have rapidly advanced everything in high-speed networking. 400G Ethernet Technology is a prime example, with 400G offering four times the bandwidth of 100G, allowing enormous traffic in data centers and supporting giant super scale network structures. Furthermore, the widespread adoption of 400G Ethernet has been steadily increasing and is projected to reach a compound annual growth rate of over 30% until 2028.

With the continuous developments in software-defined networking and network function virtualization, the flexibility and efficiency are being leaps and bounds. With SDN decoupling hardware from network management, control is simplified and configuration centralized, which further aids quick deployment. This aid for enterprises is huge while managing complex multi-cloud environments. Additionally, the tendency of NFV to virtually implement traditional network apparatus such as firewalls or load balancers leads to reduced reliance on physical hardware, which furthers the cost and scaling efficiency.

Breakthroughs in fiber optics technology have also greatly improved network speeds and lowered latency. As Dense Wavelength Division Multiplexing (DWDM) is being deployed, it is further enhancing existing optical fiber infrastructure by enabling the merging of different light wavelengths onto a single fiber. This provides an unmatched data throughput and net efficiency, which is essential for 5G networks and beyond.

Now, integrating edge computing with high-speed networking is changing how data is processed and sent. Edge computing entails moving computation closer to the data it takes as input, thus drastically improving response time while reducing bandwidth use across the network. This makes edge computing a key enabler for 5G and applications sensitive to latency, such as self-driving vehicles and real-time data processing.

All these innovations together allow the development of fast, reliable, and highly scalable networks. These developments are vital for anticipating future transformations, including Artificial Intelligence (AI) tasks, quantum computing, and the ever-growing worldwide interconnected devices, and digital dependency.

A: Operating within the 1U to 4U range, rackmount servers offer great performance as well as scalability with multiple configurations. There is also generous space optimization for expansion, such as GPU slots, additional PCIe Gen 4 drive bays, and several other configurational options. These servers exhibit great flexibility which caters to specific demand for great degree of tailoring in computing resources.

A: The 1U rack server is sleek and compact. It is best suited for space-restricted environments. A 2U rackmount has greater throughput and can support more PCIe cards, enhanced memory, multi-node setups, and more GPUs, which further enhance its performance.

A: As with all AMD processors, EPYC 7003 supports high core count and Gen 4 PCIe. These make it a recommendable choice for HPC workloads needing great processing power and efficiency. AMD EPYC’s unparalleled compute power and efficiency make it one of the best choices for HPC applications.

A: Intel Xeon Scalable processors enhance the performance for rack servers with features such as gen3.0 and gen4 pcie lanes, high memory bandwidth, and support for multiple gpus. They are optimized for high-performance server environments where workloads are intensive.

A: A 4U server has considerable space for internal components which maximizes expansion and customizability. It allows for several gpus to be used, and increases the number of drive bays, designing it for intensive applications that need high compute power along with advanced cooling systems.

A: Absolutely. Rackmount servers are configured to support hpc applications. They feature robust performance metrics, modular configurations, and the integration of advanced technologies like PCIe Gen4 and AMD Epyc 7003 series processors, boosted facilities, and cutting-edge technologies, alongside other Essentials,

A: Modular configurations are associated with flexible server architectures that have expansion or upgrade capabilities. This versatility aids in their ef i-cient resource circu lation, meeting optimized demand, future requirements g re w th, and technological integration with minimal disruption.

A: Essential components of a high-performance 2U rackmount server are multi-processor capability, numerous pcie gen4 expansion slots, high memory capability with ddr4, and integration of several GPUs to achieve optimal performance. These solutions mitigate bottlenecks in current and future workloads within demanding applications.

A: Yes, brands like Dell, Asus, and Intel are known for providing reliable rackmount server solutions. These brands offer a range of products from 1U to 4U, powered by Intel Xeon and AMD EPYC processors, ensuring high performance and dependability in various server environments.

1. Analysis and Characterization of Thermosyphen-Based Rack Level Cooling Systems

2. Simulation modeling for AI rack landing in large-scale data centers

3. Optical Controlled Intra- and Inter-Rack Switching Framework for Incrementally Scalable, Low-Latency Data Center Networks

5. 19-inch rack