In today’s era of rapid artificial intelligence (AI) development, the improvement of computing power has become the core driving force behind technological innovation. With the rapid growth of AI and high-performance computing (HPC), the demand for computing power is increasing exponentially. NVIDIA’s latest-generation GB300 GPU, with its breakthrough performance, has become a key engine driving large-scale AI model training and inference.

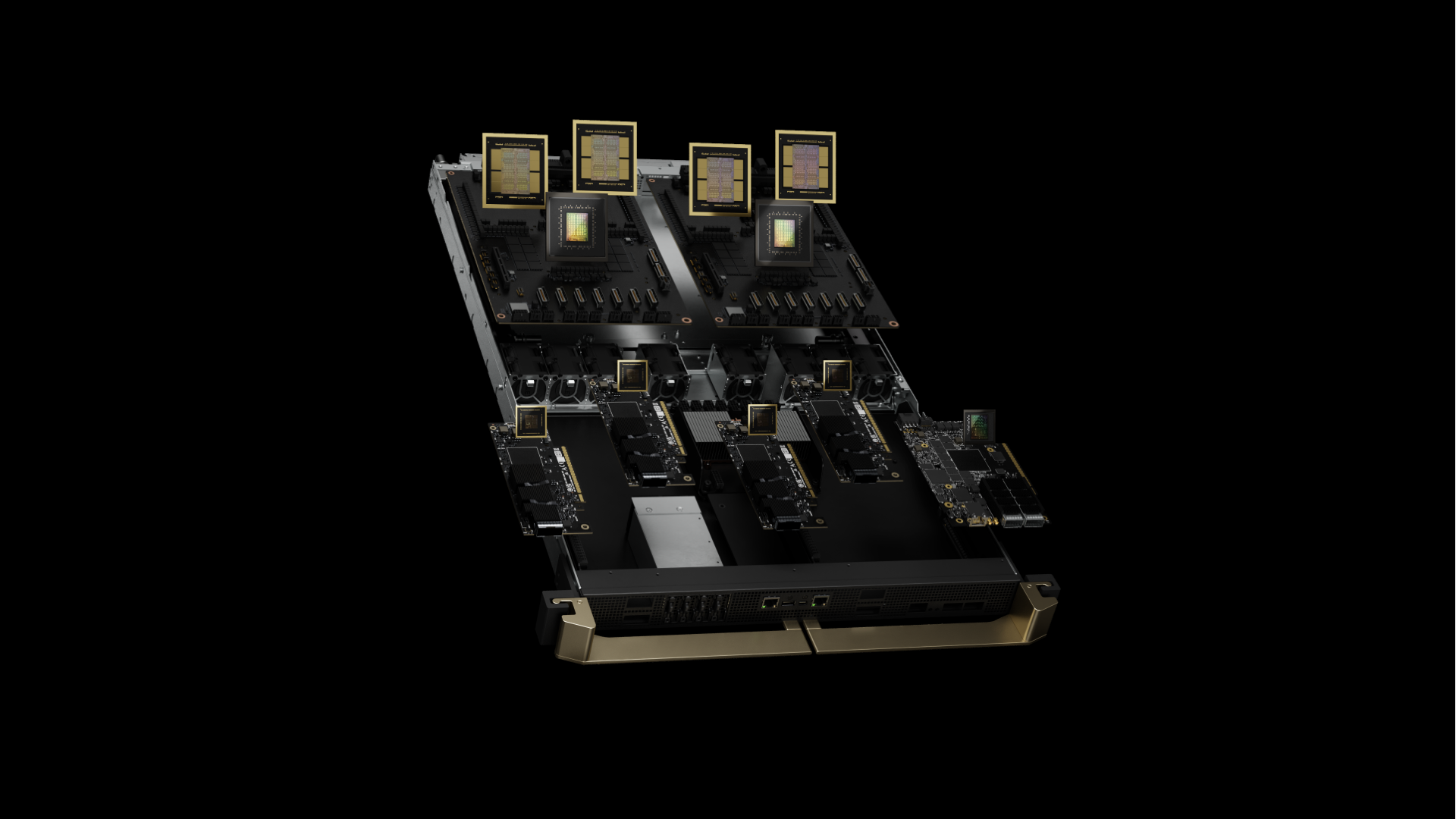

As a global leader in GPU manufacturing, NVIDIA has launched the revolutionary GB300 NVL72 system, which is reshaping AI infrastructure with its innovative design. This system is not merely a stack of hardware, but an optimized solution specifically tailored for large-scale AI training and inference workloads.

The NVIDIA GB300 (also known as Blackwell Ultra) is a major upgrade over the GB200, delivering enhanced AI computing performance and memory capacity. Specifically, the FP4 performance of a single GB300 card is 1.5 times that of the GB200.

In addition, the memory has been significantly upgraded to a 12-layer stacked HBM3E, expanding the capacity from 192GB on the GB200 to 288GB. These improvements make the GB300 particularly well-suited for demanding AI and high-performance computing (HPC) applications, especially those involving large-scale datasets and complex models.

The NVIDIA GB300 NVL72 is a fully liquid-cooled, rack-scale AI platform that integrates 72 Blackwell Ultra GPUs and 36 Arm-based Grace CPUs. This design is purpose-built for AI factories and enterprise-grade applications, delivering unprecedented computing density and efficiency.

According to NVIDIA’s official description, the system achieves significant improvements in AI performance—for example, offering up to 1.5× performance gains in inference tasks and enabling up to 50× revenue potential growth for AI applications.

The core advantage of the GB300 lies in its innovative approach to handling high power consumption. Traditional air-cooling systems struggle to manage multi-kilowatt power levels, while the GB300 adopts a fully liquid-cooled design that efficiently dissipates heat, ensuring stable operation even under heavy workloads.

This not only reduces data center energy consumption (with a PUE as low as 1.1) but also enhances system reliability and scalability. For example, companies such as CoreWeave and Dell have already begun deploying the GB300 NVL72 to build large-scale AI clusters, supporting a wide range of workloads from training large language models (LLMs) to real-time inference.

The NVIDIA GB300 is built on advanced process technology and a new chip architecture, delivering significantly higher performance per card compared to its predecessor. It is widely used in large-scale model training, inference acceleration, scientific computing, and cloud data centers. Its key value lies in:

However, the powerful performance of the GB300 also brings new challenges—particularly in terms of data transmission speed and stability. With single-card power consumption approaching or even exceeding the kilowatt level, GPU clusters built on the GB300 face unprecedented demands on interconnect bandwidth and cooling. In such environments, traditional air-cooling solutions are no longer sufficient:

With the explosive growth of AI computing demand, data center interconnects require higher-bandwidth optical modules for support. Traditional optical modules are prone to overheating in high-temperature environments, leading to signal attenuation or failures. As a result, liquid cooling technology has gradually become the optimal choice for high-performance interconnects. In this context, liquid-cooled optical module product lines have emerged as the perfect complement to high-performance systems such as the GB300.

With the introduction of cold plate liquid cooling in GB200 racks and its continuation in GB300, it has become clear that traditional air cooling is no longer sufficient to meet the thermal demands of data centers under the trend of continuously increasing power consumption. As data center integration intensifies, cooling solutions must evolve accordingly.

A cross-comparison of current networking trends among leading GPU vendors shows that whether it is NVLink, UALink, SUE, RoCE, or CM384, the core principle of their architectures is to reduce the number of nodes while enabling broader, low-latency interconnects.

The evolution of GPU interconnect solutions is continuously raising the requirements for data center integration. Traditional air-cooling approaches cannot meet the economic and density demands of compute centers dominated by accelerator cards. In contrast, liquid cooling—whether cold plate, direct-to-chip spray, or immersion—offers a far more effective solution for high-density deployments.

The liquid cooling architecture of GB300 highlights the strategic importance of cooling technologies in modern data centers. AI servers like GB300 generate massive amounts of heat, requiring not only the cooling of CPUs and GPUs but also the extension of cooling solutions to network interconnect components. As the core element of data transmission, optical modules are responsible for signal conversion between servers. However, traditional air-cooled modules cannot adapt to immersion or spray-type liquid cooling environments. This is precisely the opportunity for liquid-cooled optical modules to emerge.

By immersing modules in dielectric coolant or using dedicated liquid cooling interfaces, liquid-cooled optical modules achieve efficient heat dissipation. This design not only enhances module durability but also significantly reduces power consumption and noise. Compared with traditional optical modules, liquid-cooled versions can save up to 70% in energy, while supporting longer transmission distances and higher bit rates—perfectly matching the high-speed interconnect demands of GB300.

Liquid-cooled optical modules adopt an internal liquid circulation system to directly remove heat, offering significant advantages over air cooling:

Currently, liquid-cooled optical modules have been gradually deployed in scenarios such as supercomputing centers, AI/GPU clusters, and green data centers. With the commercialization of GB300, liquid-cooled optical modules will become a key enabler in the field of compute interconnects.

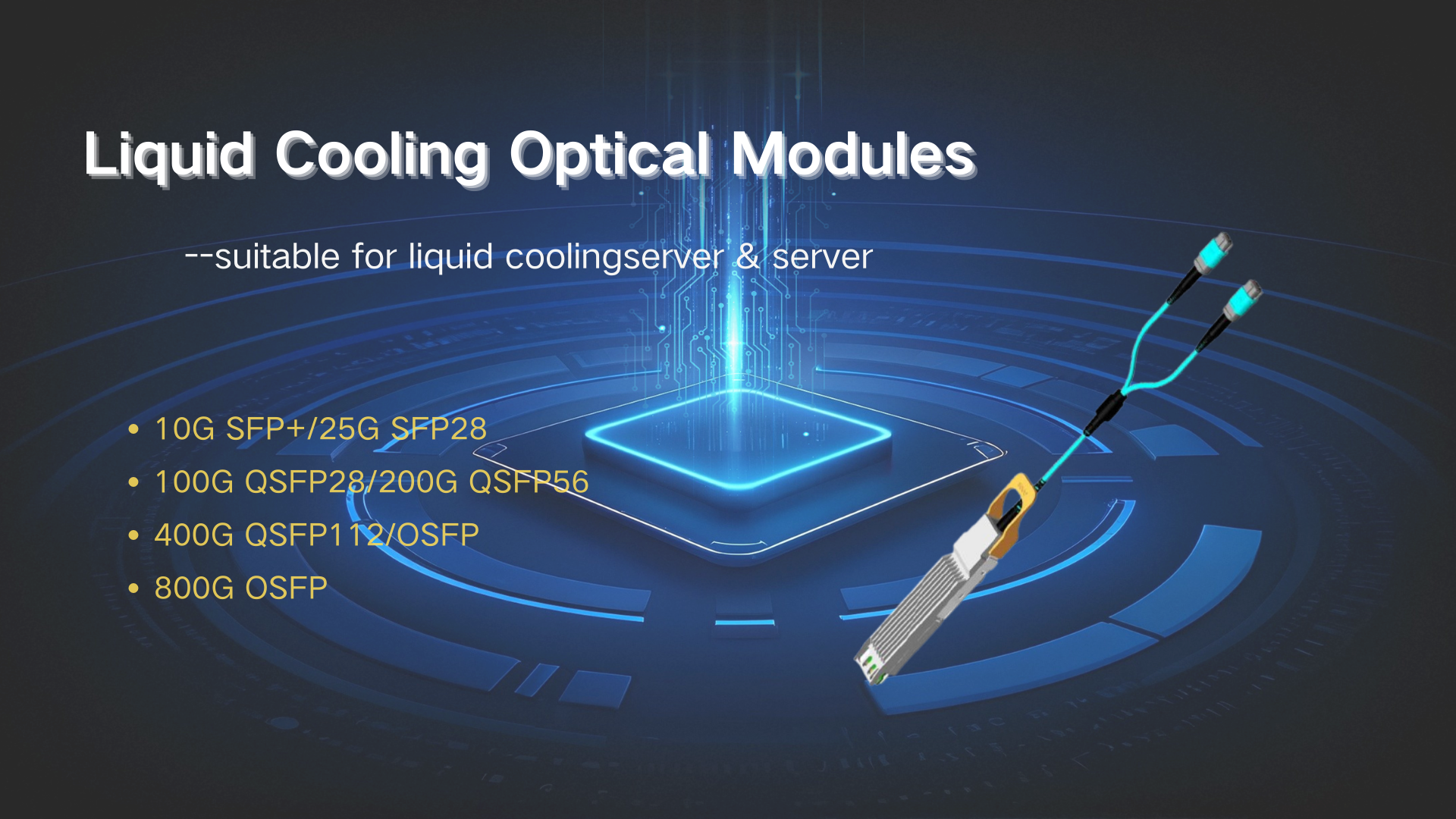

The liquid-cooled optical module ecosystem has already taken shape, with multiple vendors such as Gigalight, InnoLight, Source Photonics, and AscentOptics introducing products that span a wide range of speeds from 10G to 1.6T. Below is an overview of the representative product series:

10G SFP+ and 25G SFP28 liquid-cooled optical modules typically adopt VCSEL lasers and support multimode fiber transmission, with power consumption as low as 1W. These modules are LC-interface compatible and hot-pluggable, making them ideal for short-reach data center interconnects. In entry-level AI server clusters, they provide a stable and cost-effective cooling solution, laying the foundation for the initial adoption of liquid cooling technology.

At the mid-speed level, representative products include 100G QSFP28 SR4 and 200G QSFP56 SR4 liquid-cooled optical modules. These modules support PAM4 modulation with transmission distances of up to 100 meters, and they feature customized pigtails to enhance airtightness, ensuring reliability in liquid-cooled environments. Compared with traditional air-cooled designs, they can operate stably in immersion depths of up to 1 meter and are RoHS-compliant with a lead-free, eco-friendly design. This series is widely deployed for internal interconnects in GB300-like systems, effectively supporting data parallelism in AI training workloads.

High-end products include AscentOptics’400G QSFP112 SR4, 800G QSFP-DD/OSFP,and 1.6T LPO (Linear Pluggable Optics) liquid-cooled modules. These modules integrate silicon photonics technology and employ DR-series modulation, supporting silicon photonics pathways to reduce costs. Designed for AI-driven high-performance requirements, they reduce signal processing latency and increase bandwidth density, while significantly optimizing PUE, making them ideal for hyperscale data centers. Typical applications include integration with GB300 NVL72 to build AI inference factories, enabling efficient edge-to-cloud data transmission.

All of these product series emphasize high-reliability packaging, such as using advanced airtightness technology, to ensure stable operation in liquid-cooling environments.

The launch of NVIDIA GB300 NVL72 marks the entry of AI computing into the liquid-cooling era, with liquid-cooled optical module series providing a solid foundation for interconnects. These modules not only address thermal management and energy efficiency challenges but also drive data centers toward greener and more sustainable operations. As AI applications continue to deepen, liquid-cooled optical modules are expected to experience explosive growth after 2025, injecting new vitality into super-systems like the GB300.