Modern digital infrastructure relies on data centers, which make it possible for information to move around the world without interruption. However, the design of data center networks has changed as more storage space and faster connections are required. In this article, we will look into different types of data center network architectures used today, what technologies they consist of, and how they should be implemented properly. Starting from software defined networking (SDN) up until integrating various advanced hardware components – all of these aspects will be covered in our blog post! We hope that after reading it, you’ll have enough knowledge about current and future data centers’ designs so that you can easily deal with any problems coming your way while working with them. Let’s explore why these advancements have been made and how they improve performance, scalability, and reliability in networks throughout data centers worldwide.

A data center network is an important part of a data center that allows connected servers, storage systems, and other computing devices to share information and resources efficiently. The main aim of a data center network is to ensure good performance, fast speed and strong connection. Advanced technologies such as software-defined networking (SDN) are used in modern data centers, which enable traffic management from one central point, hence making it possible to control the whole network flexibly. On top of this, they also have high-speed Ethernet and fiber optic cables for higher bandwidths demanded by large applications and services. With the help of these design principles alongside some technological combinations, scalability, reliability, and efficiency can be achieved within them so as to meet all requirements necessary for today’s digital economy.

Contemporary foundations rely on data center networks because they act as the main medium for sharing information and managing resources within a data center. Among servers, storage systems, and other devices, it is through them that communication is made easy to ensure fast and safe access to data. Such effectiveness is necessary for meeting the needs of low latency and high throughput which are required by modern applications that have high availability demands. Data center networks improve flexibility, scalability, and resilience in the overall infrastructure by using technologies like SDN & high-speed connections; this enables the smooth running of operations under dynamic digital environments where they are most needed.

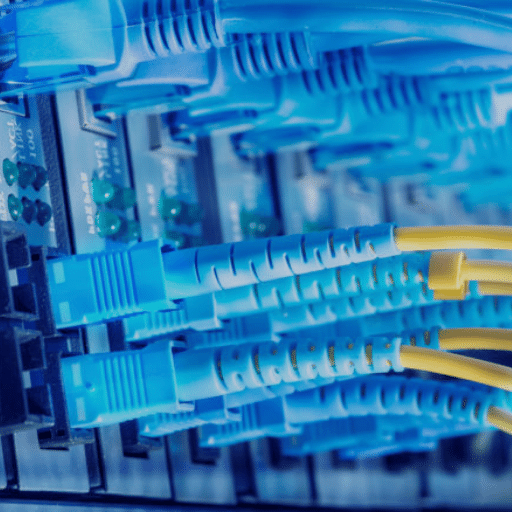

Considering the components and technologies that make up a data center’s networking infrastructure is key to understanding how networks are interconnected. At the heart of this interconnection lies the network fabric which consists of switches, routers as well as links forming a highly resilient and low-latency mesh. Commonly, high-speed Ethernet and fiber optic cables are utilized for attaining necessary bandwidths and performance levels.

Usually, the architecture adheres to a tiered model with core, aggregation and access layers that ensure orderliness in data flow as well as efficiency in its processing. The main function of the core layer is to facilitate the fast transfer of data between different network nodes while at the same time aggregating them from several access switches where they could be coming from. Through this layering approach, servers at an access level can directly connect with storage systems through a network, thus enabling localized communication among them.

Interconnectivity is also enhanced by more sophisticated technologies such as spine-leaf architecture or SDN. Latency is reduced within spine leaf design since all leaf switches (access) are interconnected to every core switch (spine) thereby providing equal bandwidth throughout the whole network. With SDN, there is central control, which means resources can be optimally used because they’re assigned dynamically. Management becomes automated, leading to increased agility on networks. These two combined together allow for strong yet flexible interconnections within data centers that are also scalable enough to cater to future growth needs.

Connectivity and bandwidth are necessary for the efficient running of data centers in today’s world, where everything is digitized. It is important that there is high connectivity because this will guarantee uninterrupted communication between servers, storage devices, and end-users, thereby maintaining performance at its best as well as reliability. On the other hand, effective bandwidth management helps in dealing with large amounts of information that could be transmitted over a network, thus supporting quick data transfers with less delay time (low latency) and making sure that the user has a seamless experience while using any application or service.

Furthermore, strong connections together with adequate capacity are required by scalability so that even when there are more workloads being handled by these centers, they won’t experience any degradation in performance. In addition to this, they also enable the realization of advanced technologies like virtualization and cloud computing, among others, which demand high throughput rates coupled with minimum downtimes (availability). Therefore, it can be said without fear of contradiction that no matter what happens tomorrow, today’s investment into better connectivity and wider bandwidth within any given data center will not only make them more useful but also ensure their ability to cope with future technological advancements.

Powerful data center networking is very important for workload and compute performance. You can use high-speed connectivity together with a lot of bandwidth to distribute computational tasks among various servers and nodes, thus avoiding bottlenecks and ensuring that workloads are balanced, which ultimately improves the performance of compute resources while enabling them to handle heavy operations as well as complicated data processing without any delays.

To further optimize workload management, advanced networking technologies like Software-Defined Networking (SDN) and Network Functions Virtualization (NFV) dynamically allocate resources according to current needs, thus decreasing congestion within networks and enhancing overall system performance. Moreover, the integration of low latency connections with high throughput supports big data analytics, AI, machine learning, etc, by ensuring quick access to information and processing capabilities.

In a nutshell, strong data centre networking not only underpins current computing needs but also ensures provision of scalability plus efficiency required by future technological advancements thereby guaranteeing sustainable operations in highly performing centres.

The standard model in data center networking, which ensures traffic management efficiency and scalability, is known as three-tier network topology or hierarchical network design. These layers are the core layer, distribution layer, and access layer.

By employing three tiers, these structures make it possible for data centers to achieve faster processing rates, more adaptable design features or better future readiness thereby meeting current demands in addition to those arising later in connection establishment efficiency.

Spinal cord and leaf network architecture is a newer, more scalable design used in data centers to increase scalability while decreasing latency. Instead of having three tiers like traditional networks, the spine-leaf architecture uses two layers: the spine layer and the leaf layer.

With its ability to handle east-west traffic flows efficiently, this type of network design is ideally suited for contemporary applications that have large bandwidth demands coupled with low response time requirements e.g., cloud computing or big data analytics among others. It also simplifies scaling since new leaf switches can be brought online without making major alterations elsewhere in an already-established infrastructure setup.

A single, streamlined infrastructure is formed by hyper-converged networking solutions that combine computing, storage, and networking resources. These use software-defined networking (SDN) and virtualization technologies thereby simplifying management and increasing scalability. The advantages of hyper-converged networking include:

Hyper-converged networks are ideal for modern data centers that support cloud computing environments, virtual desktop infrastructure (VDI) and applications needing strong scalable foundations.

To optimize efficiency, agility, and scalability in the data center network, Cisco uses a set of highly developed products. The following are the main ones:

These systems enable Cisco data centres to satisfy the requirements of cloud-based services together with high-performance applications by providing strong network foundations which are scalable and secure.

Case Study 1: The University of California, San Diego (UCSD)

With the aim of encouraging extensive research activities and academics, UCSD put in place Cisco’s Application Centric Infrastructure (ACI) to improve its data center performance. They were able to cut down network provisioning time by 30% among other accomplishments like reducing operational processes through improved automation capabilities. This allowed them scale their network resources dynamically to meet varying demands as well as efficiently handle heavier data loads.

Case Study 2: Shutterfly

Cisco’s Nexus Series Switches and Unified Computing System (UCS) were adopted by Shutterfly, an online photography service company to transform their data center operations. The mix lowered latency significantly while improving agility needed for high-demand periods such as holidays, where peak loads may have otherwise been difficult to manage with any amount of speediness. A good design always leads to good things happening, so this robust infrastructure created a high-performance environment that ensured services were delivered smoothly without any interruption, hence enhancing customer experience.

Case Study 3: Hyundai Motor Company

Nexus series switches alongside ACI were among the cutting-edge technologies incorporated into Hyundai Motor Company’s network infrastructure following a successful performance upgrade, which was anchored on Cisco data center solutions. This move greatly enhanced system reliability. In addition, it also brought about more efficient resource allocation, thus enabling effective management of large-scale information transmissions within an organization dealing with cars, among other things related to automobiles. Through this approach, real-time analysis became possible while at the same time making supply chain operations faster, leading excellence operationally even as innovation continues taking shape within the automotive industry.

The best way to ensure the efficient working of these centers is by addressing the above-mentioned problems, using new ideas, and following standard procedures that have proved successful in other places as well.

When solving problems in the data center network, it is important to be methodical. The following steps are a good way of addressing issues quickly:

Data centre administrators who follow these steps will save time and solve problems efficiently thus ensuring smooth running of operations within their centres networks.

To address the question of “Effective Network Management Strategies”, what we can do is take insights from reliable sources and sum it up in a few words:

A resilient strategy for effective management of networks is achieved when organizations integrate proactive monitoring, automation and enhanced security protocols.

The use of network automation and virtualization can make data center networks highly efficient, flexible, and scalable. The advantages of these methods were stated by reputable sources as follows:

With these technologies at their fingertips, companies can establish stronger, cheaper, yet safer architectures for data centers.

Organizations should adopt such trends so as to come up with flexible, high-capacity, and future-ready infrastructures for their centers where data will be stored or produced frequently.

For scalability, your data center fabric needs to be optimized with a modular design, high-speed connections, and robustness, which are strategic ways. This can be achieved by the use of leaf-spine topologies in current data centers that improve scalability, thereby simplifying network design and reducing latency. In this kind of architecture, spine switches are connected directly to leaf switches, thus ensuring uniform performance at all times as the network scales up. Furthermore, adoption of more advanced network protocols like Ethernet VPN (EVPN) and Virtual Extensible LAN (VXLAN) help in creating flexible layer two overlays for easy expansion.

To continue being scalable, it is necessary to incorporate automation tools into the efficient management of networks. These tools automate monitoring tasks such as configuration management and troubleshooting, among others, thereby significantly reducing human errors during such routine work. Moreover, software-defined networking (SDN) should be implemented so as to centrally control the network resources dynamically, allocating them and adapting quickly to changing requirements. Organizations may achieve this by concentrating on these areas. Not only will they have a scalable data center fabric but also one that can withstand future technological changes easily.

A: Routers, switches, servers, and different networking systems are among the main components of a modern data center network. These work together to ensure efficient data transfer and robust network services needed for the successful operation of any given data center.

A: Switching in a data center is designed specifically for handling large amounts of data with low latency requirements within that environment. It differs from traditional switching in that it leverages automation, analytics, or virtual network segmentations among other advanced features aimed at streamlining operations and improving performance of networks.

A: Automation has become very essential in today’s business operations by enabling automatic configuration, management as well as optimization of IT infrastructure itself. It simplifies tasks which frees up staff time so they can focus on higher-value work like creating new applications while reducing errors made during manual processes thereby increasing overall efficiency levels within an organization.

A: There are many benefits associated with integrating virtual networks into your existing physical infrastructure, such as increased flexibility, improved scalability, enhanced resource utilization, etc.; better isolation between different segments of a network, more efficient use of devices located within the same physical site but connected via different logical paths or subnets etc.; faster deployment times for new apps/data services where no substantial hardware changes need to be done due to already being supported through existing routing tables etc.

A: Interconnect technologies for centers dealing with storage and processing huge volumes of information will enhance performance across networks because they support high-speed links that have low latency between various points. This facilitates quick sharing or transfer without delays, thus leading to efficient process capability. Redundancy is also known for its reliability aspects when it comes to availability across many locations.

A: To protect private information and keep network systems functioning properly, data center security must be taken into account when designing modern data centers. This involves implementing strong measures against cyber-attacks, unauthorized entry and data leakage among others since they can threaten storage systems where data is kept as well as network services and applications themselves.

A: Campus networks are designed to cover larger physical areas such as universities or business parks by linking many buildings together over long distances, while a modern-day DCN works towards connecting servers within one or more data centers. Both types require high-speed connections, but the latter aims to achieve low latency coupled with high availability, besides being able to handle huge amounts of traffic due to big-size enterprises.

A: These statistics facilitate optimization of different parts of the infrastructure like improving efficiency in terms of performance by showing areas experiencing congestion which might cause delays thereby preventing them beforehand through capacity planning so that there could be no impact on service delivery; identification points that contribute towards bottlenecks thus enabling quick rectification etcetera.

A: In context, it refers to the section responsible for forwarding packets between devices within distributed computing environments such as switches/routers etc., this ensures efficient transfer across these components.

A: With advanced networking resources along with automation tools provided by them; rapidity combined with convenience becomes possible during setup phases whereby necessary configurations may easily be established, additionally this enables flexibility since scalability will not be limited hence meeting enterprise requirements dynamically while also considering operational needs relating to various enterprises.