When you have your head reversed inside-out on another world, please pay attention to data flow and facility management for companies of all sizes to compete. “The Ultimate Guide to Modern Data Center Design: Best Practices and Innovations” comprehensively studies innovations of present-day methodologies and breakthrough technologies to provide an industry-leading overview that sets a new paradigm. This book strives to position IT staff, data center administrators, and technology enthusiasts equipped with adequate guide logic to plan, construct, and keep their data-store infrastructures in good working order for efficiency, reliability, and cost savings over time. Sustainable development for all. How was your tour managed? As you have seen from Table 1, our guide covers a full range of modern data center advancements, from the latest green energy solutions and advanced cooling methods to automatics, plus security enhancements. Join us on a journey that takes away the mystery of designing today’s modern data centers and discovering how to use these innovations for your business.

At its foundation, modern datum enter architecture is an organized planning layout that combines physical and virtual resources that store, process, and disseminate data applications across various platforms. Its importance lies in this architecture making all of today’s digital operations’ steel framework possible. It enables enterprises to deliver content, services, and applications at a speed unheard of before with remarkable reliability. Hope for the development of modern information systems lies in it. The architect integrates state-of-the-art technologies such as scalable computing resources, energy-efficient power and cooling solutions, and high-level security devices, guaranteeing that operational transitions are seamless and perfect. In other words, the provided data center architecture suits all current technological requirements and future advancements, so the business remains nimble in an ever-changing digital landscape.

Efficient design is crucial to the success and sustainability of data center operations. One clear trend I’ve noted from my long experience in the industry is that optimized design can save vast amounts of energy and money. It’s about creating an environment where hardware and software work together perfectly, minimizing waste and maximizing output. A well-designed data center can keep pace with technically driven changes without too much money outlay. Also, in today’s environmentally-conscious world, efficiency equals lower carbon footprints, aligning operational goals with global sustainability efforts. In short, efficiency by design ensures that data centers can meet the ever-increasing demands of digital traffic while remaining resilient and responsive to the evolving needs of businesses and their customers.

Traditional data center designs are mainly aimed at obtaining floor space and the maximum amount of computing power, often at the expense of energy efficiency and flexibility. These facilities typically use raised floor environments for cabling and airflow management, with power and cooling systems not adjusted to actual demand. In contrast, modern data center designs focus on flexibility, scalability, and sustainability. The move towards hot aisle containment systems and modular, scalable infrastructure means that energy consumption is significantly lowered. Modern research shows that these designs can achieve a Power Usage Effectiveness (PUE) ratio close to 1. 1, an improvement stark against the average of 2.0 in traditional plant designs. This transformation encapsulates technological advances and a broader grasp of how data centers fit into global information infrastructure and environmental stewardship.

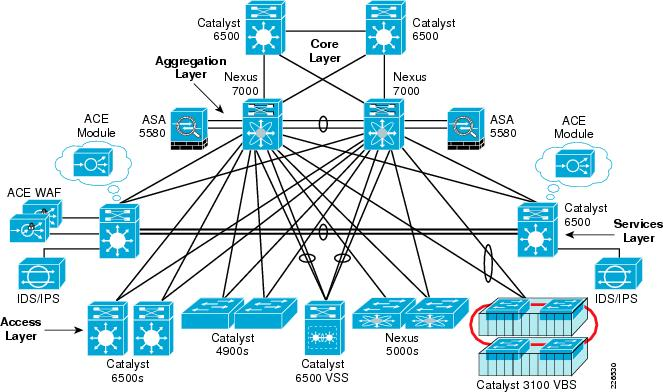

As you review the needs of a data center’s workload, computing, and connectivity, it is necessary to consider how much data will be processed and stored comprehensively. Look at the applications and services running in the data center and consider such things as the computational intensity of those applications, their memory requirements, and their I/O patterns. This assessment will determine what computing resources are required, including performance levels for CPU/GPU, how much RAM is available, whether you use SSD or HDD storage types, etc., and what kind of architecture is used (SAN, NAS, or DAS). Furthermore, connectivity is a critical consideration, encompassing not just external Internet and Intranet links but also the speed of internal data transfer. Current needs should be met, and future growth should be anticipated so the data center can grow without frequent, disruptive upgrades. A network architecture must be scalable and flexible to accommodate increased workloads and data traffic. Knowing these parameters makes it possible to create a data center that is efficient, robust, and well-suited to the future, capable of changing along with an evolving business or corporation.

Modular data centers are crucial in building scalability and flexibility in an IT environment. From my experience overseeing construction and advice on data center strategy, I believe that modularization techniques can help businesses quickly develop their data processing capacity. Instead of constructing a large traditional data center whose resources will not be used up initially, an organization can choose modular units appropriately sized for current requirements and quickly expand as needs change or increase. This dramatically shortens capital expenditure and gives companies more flexibility to take advantage of advancing technology profitably; it also means enterprises can quickly adapt to changes in business strategies. Moreover, modular data centers can be deployed faster than traditional ones and often run with greater energy efficiency, making it an excellent choice for companies wishing to scale up their digital infrastructure cost-effectively.

Choosing a suitable location for a data center requires consideration of many factors. Key factors include geographic stability to minimize the risk of natural disasters, accessibility for maintenance and operations staff, and robust infrastructure in terms of providing reliable power supply and high bandwidth network connections. In addition, the climate local to the site is critical to optimizing cooling strategy and preserving energy efficiency. Regarding a data center’s infrastructure, installing high-quality, robust components that provide redundancy, low power consumption, and scalability is essential. This applies to everything from the physical building materials to networking equipment and reserve power supplies. An optimal infrastructure arrangement meets current technology needs and is adaptable for future development and load increase; it’s an example of a cost-effective long-term operation.

Every modern strategy for running a data center has to be efficient in power usage. That means integrating the power consumption in the data center. It also means applying uninterruptible power supply (UPS) technology to ensure constant power delivery and power distribution units (PDUs) that keep track of the power usage effectiveness (PUE). The data center runs better with advanced energy monitoring tools and technology that can provide accurate time and power consumption information. With that information, you can adjust your strategies to reduce unnecessary waste and improve efficiency overall.

Whether to choose an airflow or liquid cooling system is an essential question for trying to manage the thermal environment of a data center. While generally effective in most set-ups, traditional airflow cooling may not suit a high-density climate where the thermal load is much higher. Liquid cooling systems, by contrast, offer a higher cooling efficiency through direct contact with heat-producing parts and less overall energy consumption. Choosing the right cooling strategy is especially important in evaluating what is required for the data center in a specific configuration.

Given today’s environmentally aware society, businesses must adhere to Environment, Social, and Governance (ESG) standards and incorporate renewable power sources. Solar panels, wind turbines, and other renewable energy systems reduce our carbon footprint and promote sustainability. This entails ESG standards that consider the environmental impact of our operations, the sociopolitical implications, and governance practices to manage responsibly. Such a holistic approach to sustainability stands to reason and significantly raises a data center’s operational performance in all aspects and its corporate reputation.

Data center security is essential to safeguard the physical infrastructure and protect the irreplaceable information housed within it. Based on my experience in the industry, keeping a robust data center security system means letting it take cognizance of physical and cyber threats.

First, physical security measures are essential. It is necessary to strictly control access to the data center using biometric access controls, and surveillance cameras should offer full coverage of all activities. Such measures help deter unauthorized access and provide a record of who has come and gone.

On the cyber security front, firewalls and intrusion detection systems are fundamental components. They serve as the first barricade against outside threats. Regular software updates and patch management also provide essential protection against vulnerability. In addition, the implementation of network segmentation can restrict the harm from any intrusion, cordoning off any potential damage.

Data encryption, both at rest and in motion, means that even if data is captured or looked at, it is still not understandable and safe. Regular vulnerability assessments and penetration tests can identify and eliminate weaknesses before harm is done.

Finally, a comprehensive disaster recovery plan is essential. This will ensure that operations can be restored quickly with minimal data loss if a breach or fault occurrence should happen. 123 */

When combined, these measures secure a data center’s physical and virtual resources and establish trust with clients who rely on our data security protocols.

Management software and tools have had a profound and diverse impact in data center operations. With these technologies, most processes are streamlined and automated, from monitoring server health to deploying virtual machines, thus significantly increasing operational efficiency. At the same time, IT staff can spot problems before they escalate with the help of this early warning system, so you should have less hassle keeping things oiled. A final case in point: They can provide insight into capacity planning from resource utilization. Doing so will help lower operational costs even as your efficiency goes way up…Centralized management interfaces also make it easier to adhere to data security standards. Such functions are essential for guaranteeing compliance in today’s cyber-threat environment. The upshot is that data centers can be transformed from simple warehouses for stuff into living, intelligent centers with breathing space and security features and, all with the power to meet modern business demands through advanced management software and tools.

When Data Center Infrastructure Management (DCIM) software is integrated into data center operations, it dramatically increases one’s ability to manage and monitor every aspect of a data center’s physical infrastructure from a single platform. DCIM software integrates IT and facility management, offering real-time information on power usage, environmental conditions, and system behavior. A more comprehensive picture means smarter decisions about utilizing infrastructure resources. Hence, the efficiency of everything goes up. Essentially, DCIM is a data center’s central nervous system. It allows managers to find potential problems in advance, keep everything running reliably, and smooth out maintenance jobs to not impede overall performance or spare too much downtime.

I. Hyperconverged Infrastructure (HCI): Changing Data Center Design in a Way We’ve Never Seen Before A. HCI combines computing, storage, networking, and virtualization. In short, HCI takes four separate elements of the data center and melds them together. This consolidation simplifies the physical architecture of data centers, reducing the need for separate silos of infrastructure. From my experience, HCI’s impact on efficiency is stark. By centralizing resources, we have significantly improved scalability and flexibility, enabling rapid deployment of resources depending on demand. Moreover, HCI’s streamlined approach lowers the complexity of handling different system types and, saves on operating costs, and sources wrong while reducing the human potential- error. In a word, HCI optimizes the physical layout of data centers and improves their operational efficiency. Thus, it is an indispensable tool in designing modern and future-ready data centers.

In the era of big data, one of today’s most pressing tasks is to enable data centers capable of handling high-speed and high-volume workloads. Only in this way can we maintain an efficient, scalable operation while still ensuring data integrity. There is a need to adopt new-age technologies like advanced storage solutions and high-thruput networking technology as data gradually multiplies. Using solutions such as solid-state drives (SSDs) makes data faster in response. Meanwhile, software-defined networking (SDN) can control data flow better. Good data compression and doughnut techniques Rdco further improves space utilization. In addition, it’s essential to bring out robust data analytics tools that allow real-time insight and action so your data center can be more aware of workloads dynamically. By integrating these technologies, data centers can take a tide of data flows and derive maximal value from their data.

Integrating future technologies into data center design focuses on enhancing efficiency, scalability, and flexibility needed to keep pace with the increasing demands of data processing. As this trend evolves, AI and Machine Learning become part and parcel of predictive analysis and automation, are metensuring that resources and service needs are optimally distributed. Edge computing architectures cut latency substantially by processing data closer to the source, greatly enhancing response times and broadening bandwidth usage. Besides this, the move towards green technology, which includes renewable energy units to cool the Zich centers, is intended for environmental protection.on incorporating these technologies, data centers cannot only withstand future data volumes but also squeeze every ounce of worth out of them they contain.

The world has changed. Companies used to be content housed within a single building. Now 55% of staff no longer has a fixed office, which will increase to 75% within the next ten years.1 It is no longer possible or desirable to store all data on a single computer. In a world where your company’s data may be scattered between servers far apart and overseas, it makes sense to make this process in shorter, cheaper steps. With the advent of cloud services and mobile technology, it is evident that we have entered an era in which mobile phones can serve as remote visual displays of databases. Today’s competitive brands should favor flexible cloud solutions over more conventional but ultimately less flexible forms of service delivery.

TNUpL: US Companies’ Software Design Practices. With the popularity of cloud platforms such as GPON and FTTx, outsourcers in the engineering and construction industry are now taking a more proactive approach toward eliminating waste. Their practices can be described as “TNUpL,” down to avocado green: Think one step ahead before designing your plan. Strip out non-value-added steps, such as over-documenting or too much formality. Ahead of time during the static planning phase. Lay down cutting-edge technologies for computerization and documentation. Pack designs before products depart from concurrence norms; let users see orders off the assembly line.

When companies operate their e-commerce website, they don’t need to store any user details on that site. An exciting feature of e-commerce websites is that most applications are connected to their databases through the Internet. This is known as tailoring and integrating. Remote Database Technology means that people are freed from the tyranny of PCs when they want to work. This trend has nothing to do with progress itself but reflects how far our services have come.

Managing Costs: With adaptive strategies such as a move to cloud-based services or squeezing more out of what you’ve already got on the premises, data centers can attract data for far less money. For example, Amazon Web Services saved Windows workloads by up to 70% in human resources costs by optimizing which staff worked on them.

Administrative Practices: Adapting to fresh technologies and environmental strategies like using renewable energy sources and green data centers cannot only cut your carbon footprint to practically nothing but also accord with global sustainability initiatives. As Microsoft’s commitment to be carbon-negative by 2030 shows, the future will be one of doing business sustainably.

Above all, any data center that wants to stay competitive through continuing improvement must implement moderate strategies. This is the only way to guarantee that it meets today’s demands while positioning itself for tomorrow’s challenges and opportunities in a fast-changing digital world.

The future of data center design and technology is a new frontier filled with exciting possibilities and new technology. As an industry expert, I predict a move toward more resilient, efficient, and sustainable operations. Here are the parameters that will drive this development:

Power Efficiency: The trend toward sustainability will support using more energy-efficient technology. Among these will be cooling systems that consume less electricity, renewable energy sources such as wind and solar power added directly to data center operations by working overtime to turn the cranks, etc.

Sizing and Development: Data centers will increasingly comprise modular designs that permit simple extension or expansion. This will include augmenting capability as needed rather than all in advance, with less upfront costs and loss of resources. Edging and homing systems

Edge Computing: The Big Data Age plus the need for instant data gathering spells the rise of edge computing. In this method, data centers are set up at sites where the data is generated. Thus, speed is accelerated, and response time is essentially dispensed.

With AI and automation playing critical roles in future data centers,, what the future certainly holds is ours.. Using AI as a tool will help achieve such things as predictive maintenance and real-time power and cooling optimization. It will be a massive force in making data centers more efficient and more reliable than they are today.

Improved security measures: As cyber threats continue to be more complex and concise, data centers will no doubt invest in advanced security measures such as digital security protocols and physical security enhancements. These measures are all designed to protect sensitive data from any source of threat at any time.

The design and technology of future data centers will focus on innovation, aiming at sustainability, efficiency, and security. It’s an excellent time to be involved yet work hard to meet tomorrow’s digital demands.

Based on the search results, three relevant and reliable sources provide comprehensive information on “The Ultimate Guide to Modern Data Center Design: Best Practices and Innovations.” These sources have been selected for their credibility, depth of content, and range of perspectives, from industry insights to technical best practices.

Vertiv – Data Center Design | Modern Data Center Best Practices

Source: Vertiv

Summary: This article from Vertiv, a global provider of critical digital infrastructure and continuity solutions, discusses five essential data center design best practices. It also discusses the importance of climate-controlled environments tailored to safeguard IT resources efficiently. The piece is valuable because it merges practical advice with insights into modern challenges such as energy efficiency and scalability. It makes it a crucial read for professionals aiming to stay abreast of current trends in data center technology and design.

TechTarget – How to Design and Build a Data Center

Source: TechTarget

Summary: TechTarget’sGuidee provides a detailed overview of the best practices in designing and building a data center. It emphasizes that there’s no one-size-fits-all approach, focusing on customizable designs to meet unique business needs. This source is beneficial for its comprehensive coverage of the topic, including considerations for architectural layout, IT resource allocation, and addressing specific operational requirements. It is a practical manual for IT professionals and architects involved in data center projects.

IBM Blog – The blueprint for a modern data center

Source: IBM

Summary: IBM’s blog post offers a forward-looking perspective on designing modern data centers, incorporating advanced technologies like AI and containerization. It explores how these technologies can optimize data center operations, enhance efficiency, and support evolving business demands. This source stands out for exploring cutting-edge innovations and their implications for future data center design. It’s particularly relevant for decision-makers and IT strategists seeking to leverage new technologies for competitive advantage.

These sources collectively offer a well-rounded view of modern data center design, from foundational best practices to the latest technological innovations. Each provides unique insights, making them invaluable resources for anyone interested in data center design and management.

A: Scalability is one of the best practices in modern data center design. To facilitate future growth, a center must make it easy to expand in size; energy efficiency should be a high priority, too, reducing operational costs; and using advanced management techniques, data centers can be managed more easily. Robust cooling systems that maintain optimal equipment temperatures in data centers and modular designs for easy expansion are also critical. Moreover, full compliance with data center design standards helps ensure data center facilities’ safety and reliability.

A: To improve data center efficiency, use energy-efficient power and cooling systems. For example, free cooling technology and chillers specifically designed for the data center environment can significantly reduce electricity consumption in any given year. Reducing physical machines required and using virtualization, enhancing airflow efficiency and advanced rack layouts, can all play a significant role. In addition, more efficiency is obtained through real-time monitoring and resource optimization using data center management tools.

A: In data center operations, cooling systems play a crucial role. For example, by helping servers and other computer equipment keep their temperature within an acceptable range, they can prevent overheating that leads to decreased performance and time off-line hardware failures. Effective cooling solutions can ensure equipment performs steadily at the most optimal level and contribute to the overall reliability of center operations. They also significantly affect energy use and, therefore, the efficiency of a data center.

A: As a matter of fact, the entire data management and access efficiency in new data centers depend directly on network design. It will affect scalability, security, and how we can use them. Until now, a well-planned data center network design guarantees high availability, low latency, and ample bandwidth to suit the company’s business requirements. Integrating power and redundancy systems for data backup into the design will keep it running during outages or malfunctions – all these aspects are essential in building a data center.

A: Including recycling or renewable energy sources to power operations. Enhanced management of data centers using machine learning and artificial intelligence will predict problems before they happen, and this is already the case in some companies. The new trend is to incorporate liquid cooling systems that use water or refrigerant to deware a very low temperature for more efficient heating management. Moreover, data centers constructed with preassembled or modular components enable the reduction of time spent building them and more significant opportunities for scalability. In addition, edge computing- that places data centers closer to end-users so that service delivery is faster and there are fewer delays- is growing within the industry.

A: Colocation is often critical in the data center market. This allows organizations and individuals to lease physical space in a third-party data center for their server and computing hardware. By doing so, businesses no longer need the capital to build and manage their new facilities. Colocation also provides opportunities for growing operations, greater diversity in connectivity, and better disaster recovery capability–generally speaking, it is an attractive option for many organizations trying to find a data center solution.

Q: Where should business requirements guide the design of a data center? A: Designing a data center to meet specific business requirements calls for considerations such as capacity planning, scalability, reliability, and security. It is understanding current and future computing needs, designing a scalable, flexible data center solution that grows with the business. Ensuring high reliability through redundant systems and backup generators is the definition of continuous computing. In addition, you must provide comprehensive security measures to protect data from physical and cyber threats. Building an infrastructure that reflects the business’s specific needs while considering efficiency and sustainability achieves a robust, thriving environment for data storage purposes.

A: Essential management tools for effective data center management include Data Center Infrastructure Management (DCIM) software, which provides comprehensive oversight of physical and virtual data center assets. Network monitoring tools are also essential for real-time network performance tracking and traffic management. Also, with environmental monitoring tools, you can control the data center climate to ensure that hardware works best at all times. Automation tools further streamline operations, reduce manual work, and improve efficiency. The proper selection of these tools is crucial to supporting the increasingly complex demands of modern data center facilities and achieving smooth, efficient running.