In the current era of computers, efficiency in modern information storehouses is very crucial for business and technological progress. Growth of data at exponential rates coupled with increased demand for computational power have led to greater heat production, which requires the installation of advanced cooling systems. Proper cooling is important as it ensures that machines in the data center are always reliable, efficient and durable as well. This paper examines some popular solutions that can be used for cooling data centers today; this includes a wide range of technologies and methods to address thermal challenges. We will discuss various systems, such as air conditioners (ACs) among others which are considered traditional but still effective ways of cooling, as well as their advantages over other newer approaches like liquid-cooling or free-cooling; we shall also consider when each type should be used based on applicability and where possible look into recent developments in this field too.

Data centers need to keep the temperature just right, or everything falls apart. If things get too hot, hardware can break, slow down or even collapse completely. This is why they must have cooling solutions that work well; otherwise, servers, storage systems, and networking devices will die quicker than they should. Furthermore, correct thermal management is vital for cutting energy usage as well as operational expenses since cooling systems tend to be among the biggest power eaters in any data center operation. Cooling basically guarantees equipment reliability while at the same time ensuring uniformity of performance and energy saving within a data center setting.

Different types of data center cooling systems are available, each with distinct features and advantages. In this part, we will describe some common cooling methods according to current industry standards as well as information obtained from reputable sources.

Air-based Cooling Systems

Air-based cooling systems represent the conventional and most widely adopted approach for heat control in data centers. This category includes direct expansion (DX) and chilled water systems. DX system employs refrigerants to directly absorb heat from the air within the facility then release it outside while cooled water is circulated by a central plant to air handling units, which in turn cool down the air. These systems are inexpensive, easy to implement and are best suited for small or legacy data centers due to their simplicity.

Liquid Cooling Systems

With increasing data densities as well as power requirements, many establishments have started adopting liquid cooling systems that offer better efficiency and performance. Liquid cooling can further be classified into two categories: direct-to-chip and immersion cooling. Direct-to-chip involves circulating coolant near processors so as to directly take away the heat produced, whereas, in the immersion method, entire server components are submerged into thermally conductive liquids. These techniques improve heat removal abilities; they are highly energy efficient and greatly reduce hotspots, making them suitable for high-performance computing environments.

Free Cooling

In free cooling technology, low ambient temperatures are used in order to cut back on reliance on traditional mechanical chilling approaches. Air-side economization brings cool exterior air inside the facility, while water-side economization uses cold external water to maintain internal temperatures. Free cooling is very energy efficient and cost-effective, especially when implemented in regions with colder climates since it reduces carbon emissions from data centers associated with compressor-based coolers, thus lowering operational expenses through decreased utilization of gas-powered chillers.

Considering these different methods of cooling will enable operators choose appropriate solutions that address their specific operational needs thus ensuring continued operation, reducing energy usage and improving equipment reliability within data centres.

The major problem of data center cooling is how to handle the high densities of heat generated by current servers and networking equipment. With advancements in technology, the power used by each server increases, thereby raising the thermal output levels, which affects the ability to maintain optimum operational temperatures. This often leads to elaborate and expensive cooling infrastructures that are required for efficient dissipation of heat as well as prevention of failures.

Energy efficiency is also a big concern with cooling systems. CRAC units among other traditional methods can consume too much energy thus increasing operational costs and leaving behind a bigger carbon footprint. Choosing an energy saving liquid or free cooling solution demands significant initial capital outlay coupled with meticulous planning; this presents financial challenge to many organizations.

More so, data centres face geographical and environmental issues. In places where ambient temperatures are higher, free cooling becomes less feasible, forcing reliance on mechanical cooling, which uses even more energy, leading to increased consumption rates as well as expenses incurred. Also, those located in regions with high humidity levels or prone to dust storms need extra filters together with humidity regulators so that they can safeguard delicate machines – such circumstances complicate matters further for cooling in these centers.

Ultimately achieving uniform airflow distribution throughout data center remains paramount albeit difficult achievement. Poor air supply chain may cause temperature fluctuations hence hotspots that degrade performance and life span of devices. However it is necessary to implement advanced airflow management methods like hot aisle containment (HAC) within cold corridors but only if design execution precision can be guaranteed.

Data centers use liquid cooling to take the heat generated by electronic parts and move it somewhere else. One way is to circulate a coolant through cold plates or heat exchangers that touch hot chips like CPUs and GPUs. The heated liquid then flows to a remote heat exchanger, where the warmth is released into the air or transferred elsewhere in a cooling system outside the computer room. Since it cools directly at its source, this method can save energy because it is more thermally efficient than traditional air cooling systems, which need to lower temperature uniformly throughout an entire space. Additionally, liquid-based coolers allow for better control over high-density heat loads so data centers can keep running powerfully even when there is a lot of number crunching going on around them.

Liquid cooling systems have more than a few advantages over traditional air-based cooling methods. To begin with, they have better thermal efficiency, which means that they can dissipate heat generated by densely packed electronic components. This kind of efficiency helps save energy, thus cutting down operational costs and contributing to the sustainability efforts of an organization. Secondly, liquid cooling allows for higher density management of heat hence making it possible to fit more powerful computers in data centers without expanding the space used. Such capability is particularly useful in high-performance computing (HPC) environments where there may be a need for rapid growth or scale-out options are limited due to physical constraints like power availability or cooling capacity. Noise levels can be dramatically reduced too with liquid-cooled systems as these do not require many fans, if any at all, compared to their air-cooled counterparts, thereby creating quieter operating environments. Finally and most importantly, perhaps, reliability and longevity could be improved through the adoption of liquid cooling because stable temperatures will be maintained, thus minimizing thermal stress on crucial parts.

Although there are many advantages of liquid cooling systems, they also come with their fair share of challenges. One such challenge is the higher initial setup and installation cost as compared to traditional air-based cooling methods. This is because these systems require specialized equipment like pumps, radiators, and coolant channels, among others, which may need modifications to the existing infrastructure in place. Secondly, managing and maintaining a liquid cooling system needs trained personnel with specific knowledge areas. It requires constant watchfulness since any leakages or failures can result in massive damage to electronic components, thus necessitating regular maintenance checks. Another challenge lies in system design complexity that may be brought about by accurate thermal management planning and integration into overall data center architecture as required by most liquid cooling systems. Lastly, there have been concerns about environmental impacts associated with coolant fluids’ disposal, containing some substances that require careful handling for fear of contaminating the environment around us. However, long-term benefits outweigh these initial difficulties, making it applicable even in high-density data centres or HPC environments.

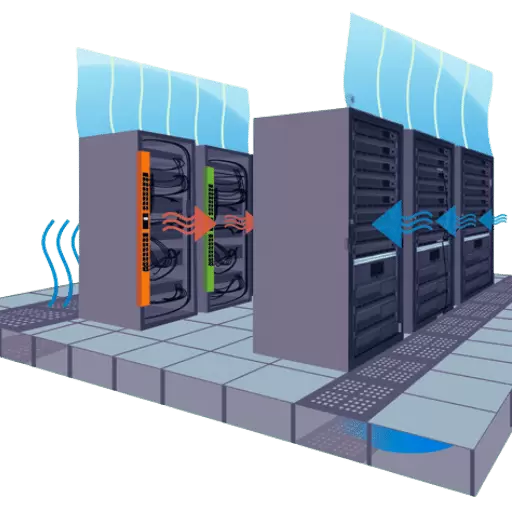

Air cooling techniques in data centers depend on the transfer of cold air over hot parts so that heat can be dissipated. Fans, air conditioners and heat exchangers are commonly used to achieve this. The most popular methods include raised floors – where cool air is supplied from beneath the equipment – and hot aisle-cold aisle containment, which separates hot and cold airflows for optimal cooling. Moreover, direct expansion (DX) systems, as well as chilled water systems, are widely used. In DX systems, refrigerants are employed for air cooling, while in chilled water systems, chilled water is passed through coils to absorb heat. Air cooling has lower costs than liquid cooling and is easier to implement, but it may not work efficiently when a lot of heat is produced within a small space like a high-density data center.

Crucial for the maintenance of ideal operating temperatures and boosting power usage effectiveness is what effective airflow management in data centers is about. The main method comprises hot aisle-cold aisle containment where racks are alternated with one row fronting hot air exhausts to another row that faces cold air intakes; this arrangement helps prevent the mixing of hot and cold streams. In addition, blanking panels can be used to fill up unused rack spaces so that there is no recirculation of air; rather, they direct cooled areas where they are mostly needed. Another strategy may involve using variable-speed fans that adjust their speeds in response to current cooling requirements, thus reducing energy consumption. lastly, underfloor distribution systems should seal cable cutouts against leakage of air while ensuring uniform pressure beneath the raised floor. These measures enable better thermal management within data centers, leading to cost savings on operations.

Many data centers and similar facilities prefer air cooling systems because they have several advantages. First of all, these systems are generally cheaper to set up and maintain than liquid cooling alternatives. With fewer parts and elements involved, it is simpler to look after them, and there is less chance of a breakdown. Moreover, the adaptability of air cooling systems allows for easy scaling according to the fluctuating demands of a data center. Energy efficiency in environments with lower heat density can be realized by utilizing the existing ventilation infrastructure during cooling. Another thing is that such a kind of cooling does not have any risks related to leaks or spills, which may occur with liquid coolants, therefore making this option more reliable and safe for operation in a data center. These units enable cost-effective and efficient thermal management within data centers.

The hot aisle and cold aisle containment strategy is a method of designing data centers that optimizes the efficiency of air cooling systems. The primary idea is to separate hot and cold air in a data center so they do not mix, thus improving cooling performance while reducing energy consumption. In this configuration, server racks are placed in rows facing each other with cold aisles between them where cool air is supplied and hot aisles between them where hot air is exhausted from. These aisles are enclosed by physical barriers that prevent the re-circulation of the hot air produced by servers and their mixture with incoming cold air. This containment approach enhances cooling infrastructure efficiency because it allows for more accurate temperature control and reduces cooling units’ workload. Hot aisle/cold aisle containment not only better manages heat distribution across the whole facility but also saves power and prolongs equipment life expectancy at the same time.

A lot of good things happen when you implement aisle containment solutions in data centers. The first is that it makes them much more energy efficient because it stops warm air from mixing with cold air and decreases the amount of power that is needed to cool down the area. Secondly, by controlling access to each row or cabinet while also keeping temperature levels consistent throughout all parts of the room, this method allows for better cooling management, thus reducing the chances of having expensive equipment shut down due to overheating. Last but not least – it boosts overall cooling capability in the infrastructure, which means higher computing densities can be achieved without compromising on reliability or performance required by such establishments for their operations to run smoothly. Such advantages taken together foster sustainability alongside cost-effectiveness in running data centers.

To achieve optimal performance and efficiency, when hot and cold aisle containment is being implemented in data centers, a number of important steps need to be followed:

If these procedures are followed, better heating and ventilation can be achieved, thus saving energy consumption while supporting heavy-duty computing environments in data centers.

To make computer server cooling more effective, several energy-saving strategies can be used:

Integrating all these energy-efficient methods into working order will greatly improve overall energy performance levels while reducing operational costs in data centers.

Heat exchangers are very important to improve the cooling efficiency of data centers. Generally, they work by transmitting heat from hot air inside the data center to a cooling medium which can be water or refrigerant that carries heat away from it. The following are the two common types of heat exchangers used in most data centers:

Integration of these devices with their cooling systems allows operators to capitalize on natural conditions, thus saving power while also enhancing sustainability and cost-efficiency through improved cooling in data centers.

To precisely quantify and upgrade the coldness of data, here are some of the most important yardsticks:

Using these figures will enable managers to monitor their cooling systems consistently thus cutting down on operational expenses besides promoting sustainability across various aspects like finance among others.

As a way of dissipating heat in data centers, immersion cooling is starting to gain popularity due to its high level of efficiency, especially in areas with dense computers. The technique involves dipping IT equipment, such as servers and storage devices, into a dielectric liquid with thermal conductivity. These fluids that do not conduct electricity flow over the electronics and pick up heat from them directly, then move it away, thus doing away with air conditioning systems.

There are two main kinds of immersion cooling: single-phase and two-phase. In single-phase immersion cooling, the dielectric fluid remains in its liquid form throughout circulation within the system, whereas during two-phase immersion cooling, when it touches hot surfaces, the liquid boils into a gas and then condenses back into a liquid within a heat exchanger. Both methods offer great energy savings by cutting down on reliance on traditional means for cooling, like air conditioners and fans.

One notable merit of this technology is that it greatly enhances efficiency in thermal management, thereby reducing Power Usage Effectiveness (PUE). Moreover, since immersion cooling keeps operating temperatures stable better than any other method does, it can increase the reliability and lifespan of IT equipment. The ability to handle very high heat loads makes this technique an important step towards making data centers fit for future computing needs that are on the rise.

Free cooling methods are one way to minimize energy use in data centers. Air-side economizers, water-side economizers and geothermal cooling are among the most efficient of these techniques.

Each type can be adjusted according to specific geographical features as well as climate conditions thereby making them flexible options suitable for contemporary data centers concerned about energy efficiency.

Evaporative cooling solutions are based on the principle of using water evaporation to reduce heat in the air. These systems work by passing hot air through pads that are saturated with water; as the water evaporates, it absorbs heat from the surrounding air and cools it down before being pumped into the data center. Unlike traditional ACs, which heavily rely on compressors and refrigerants, evaporative cooling is much more energy-efficient and eco-friendly. It works best in hot dry climates where low humidity levels in the atmosphere enable maximum cooling effect to be achieved. When properly applied, this technique can save power and operational costs while still maintaining ideal working conditions for data center equipment.

A: Chilled water systems, in-row cooling units, and computer room air conditioning (CRAC) units are some of the main cooling solutions for modern data centers. These systems help cool servers and other equipment by keeping them at the right temperature and humidity levels.

A: Chilled water systems utilize cold water to soak up heat from the environment around the data center. The central plant cools down this water which is then carried through pipes to cooling units located within the same facility. It is popularly known as an effective method with high-energy efficiency rates that can be used to cool large server racks densely populated with machines.

A: In-row cooling refers to placing cooling units directly between server racks. Such a localized approach allows for more precise air conditioning, thus requiring less energy than traditional practices. Additionally, it helps operators deal with hot spots while increasing their overall energy efficiency.

A: Failure to manage temperatures and humidity within required limits could lead to overheating or condensation, thereby damaging delicate server hardware. Today’s digital infrastructure depends on efficient cooling, which can only be achieved if these two conditions are met in any given data center environment.

A: Mechanical or CRAC based type of air conditioners have potential of saving power by employing smart technologies that respond dynamically to real-time heat load changes alongside environmental conditions variations during operation hours; thus ensuring minimum amount of energy is utilized without compromising necessary standards for smooth running of a typical DCs.

A: New technologies are revolutionizing solutions for data centers with regard to cooling. They achieve this by presenting more exact and effective methods of cooling which in turn help them lower power consumption, save energy and improve overall performance.

A: Cooling efficiency can be improved significantly by having a well-designed data center with an efficient layout. This means that server racks should be properly arranged alongside cooling equipment while airflow management must be taken into consideration; e.g., hot/cold aisle containment which can cut down on the amount of energy used for air conditioning thereby optimizing environmental control within a given space.

A: Data center providers have various options at their disposal when it comes to reducing energy consumption during the process of cooling. Some examples include; using systems that are much better regarding power utilization efficiency for this purpose, optimizing airflow management (which entails regulating air flow through placement/positioning), implementing free cooling (using outside air) as well as integrating renewable energy sources so as to lower total consumption levels while enhancing sustainability measures too.

A: Energy efficiency is crucial for any organization looking forward to cutting down operational costs as well as protecting the environment against adverse effects brought about by unsustainable practices like those applied within most data centers during their implementation phases, where they tend not to consider long-term benefits hence leading into higher utility bills later on. Efficient cooling systems do this by using up less electrical power, thus reducing expenditure incurred on utilities while supporting green initiatives within the industry aimed at ensuring sustainable growth.

A: To handle high-density server environments within their facilities, operators employ advanced techniques such as liquid or in-row cooling among others; they also improve airflow management through monitoring temperature/humidity levels in real-time using some kind of sensors linked to a central unit where all readings are done from there itself which then gives instructions accordingly. As the number of servers increases, these methods will guarantee enough cooling is provided without wasting much energy.