As large-scale model training and HPC workloads continue to grow in size and complexity, interconnect bandwidth and latency between GPUs and accelerators are increasingly becoming decisive factors in overall system performance. In the 400G era, 400G QSFP-DD AOC (Active Optical Cable) solutions, with their high bandwidth, low power consumption, and plug-and-play deployment, are emerging as an ideal choice for short-reach, high-speed interconnects in HPC and AI data center environments.

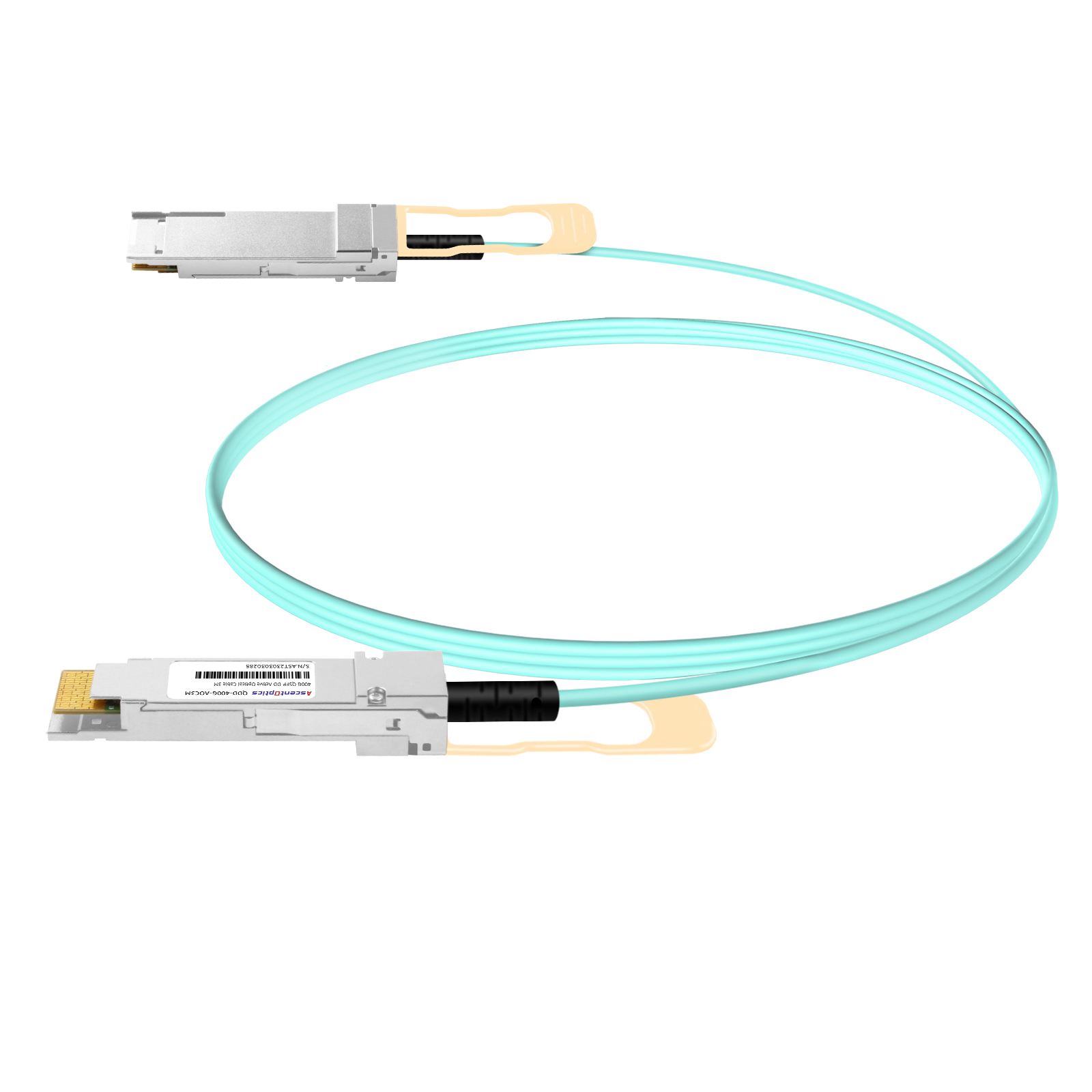

400G QSFP-DD AOC is an active optical cable solution that integrates optical transceivers and fiber into a single assembly. Based on the QSFP-DD (Quad Small Form Factor Pluggable Double Density) form factor, it supports 8×50G or 4×100G PAM4 lanes, delivering an aggregate bandwidth of up to 400 Gbps. Designed as a high-speed Active Optical Cable, 400G QSFP-DD AOC is purpose-built for short-reach 400Gbps interconnects in data centers, cloud computing, and high-performance computing (HPC) environments.

1.Plug-and-play deployment with no on-site fiber termination required

2. Factory-terminated design that simplifies installation and maintenance

3. Low power consumption and low latency, ideal for high-density deployments

4. Typical transmission distance of 1–30 meters (up to 50 meters for certain solutions)

HPC systems are extremely sensitive to inter-node communication performance. Compared with traditional DAC cables, AOC solutions can maintain excellent signal integrity over longer distances, significantly reducing bit error rates. This makes 400G AOC well suited for high-concurrency communication models such as MPI and RDMA, which are widely used in HPC workloads.

In modern HPC clusters, compute nodes are typically deployed in high-density configurations, with dozens of servers integrated within a single rack and interconnected via Top-of-Rack (ToR) or End-of-Row (EoR) switches.

Traditional DAC cables tend to suffer from signal integrity limitations and cable stiffness when distances exceed 2–3 meters, while optical transceiver plus fiber solutions increase deployment complexity and overall cost. 400G AOC effectively covers this short-to-medium distance range while avoiding complicated fiber patching and management, making it particularly valuable for HPC environments that require rapid scaling and flexible expansion.

In supercomputing centers and research institutions, HPC clusters often consist of hundreds or even thousands of high-speed ports. Traditional deployments based on separate optical transceivers and fiber patch cords not only increase hardware costs but also add complexity to inventory management, maintenance, and troubleshooting.

By integrating optical transceivers and fiber into a single assembly, 400G AOC reduces the number of standalone transceivers and patch cords required, while simplifying cabling management and easing operational workloads.

From a long-term operational perspective, this streamlined architecture helps significantly reduce both CAPEX and OPEX for HPC systems, while improving overall network reliability.

As HPC systems continue to evolve toward 800G and even 1.6T, cluster architectures are moving toward higher bandwidth and greater port density. By leveraging the QSFP-DD form factor, which is compatible with mainstream switching platforms, 400G QSFP-DD AOC enables existing deployments to maintain strong continuity and compatibility during future network upgrades.

For organizations planning next-generation HPC platforms, 400G AOC represents not only a cost-effective solution for today’s requirements, but also a reliable, future-oriented deployment strategy.

In AI training clusters, thousands or even tens of thousands of GPUs must be interconnected through high-speed switching networks. 400G AOC is widely deployed in scenarios such as:

GPU servers ↔ Top-of-Rack (ToR) switches

Short-reach high-speed links within Spine–Leaf architectures

High-density interconnects inside AI Pods

Large-scale model training is highly sensitive to east–west traffic performance. 400G AOC provides stable, high-throughput short-reach connectivity, helping prevent GPU utilization degradation caused by insufficient link performance.

Compared with solutions based on pluggable optical transceivers and separate fiber cabling, AOC offers lower overall system power consumption, enabling AI data centers to achieve better PUE (Power Usage Effectiveness) in high–power-density environments.

400G AOC vs. DAC vs. Optical Transceiver Solutions

| Solution |

Typical Reach |

Cost | Power Consumption |

Application Scenarios |

| DAC |

≤2–3 m |

Lowest | Lowest |

Ultra-short reach within the same rack |

| AOC |

1–30 m |

Medium | Low |

Short-reach interconnects in HPC / AI environments |

| Optical Transceivers + Fiber |

≥100 m |

Higher | Higher |

Cross-row, cross-hall, or long-reach links |

In the “golden distance range” for AI and HPC deployments, 400G AOC often delivers the best balance of performance, cost, and power efficiency, making it the most cost-effective solution for short-reach high-speed interconnects.

As HPC and AI data centers transition to 400G networks, 400G QSFP-DD AOC has emerged as a key pillar for short-reach high-speed interconnects, thanks to its high bandwidth, low latency, ease of deployment, and strong cost efficiency. For operators focused on maximizing compute efficiency and deployment flexibility, AOC is not merely a transitional solution, but a practical and long-term choice.