In the present quickly changing technical background, businesses and data centers require sturdy and scalable server solutions to cope with growing workloads and complicated computing needs. 1U to 5U in size, rackmount servers provide strong performance, space-saving utilization, and improved manageability, which are why they remain the number one choice for corporations looking for reliable infrastructure with high-performance levels. This post is meant to offer a complete summary of different options of rackmount servers available along with their characteristics; it will also concentrate on benefits as well as use cases where each type can be applied most effectively. Whether one is considering improving current systems or planning new installations, this information should help point out some insights that would enable them to select the perfect server solution fitting their enterprise requirements.

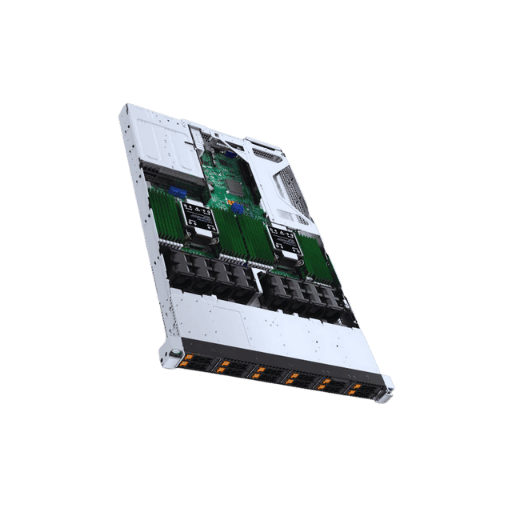

A rackmount server is a type of dedicated server that comes in a rectangular shape so it can fit into a standard 19-inch server rack. These servers are measured by the number of “U”s (or units) they take up in height, which can range from 1U to 5U—1U being equal to 1.75 inches. They provide centralized processing power, storage, and network connectivity for different applications or services across data centers or enterprise environments where space is at a premium. Rackmount servers are built with maximizing space efficiency in mind while also improving cooling efficiencies and simplifying maintenance or upgrades.

Rackmount servers have many benefits when used within data centers; firstly, this type enables space-saving by allowing more units per rack cabinet than any other form factor, thus facilitating scaling outwards rather than upwards, which may be necessary due to limited floor area availability. Secondly, such devices come equipped with advanced cooling systems that guarantee proper thermal management, thereby preventing overheating, which could lead to system failures. Thirdly, their design is modular, making them very easy to maintain especially super micro systems where there are frequent needs for repair parts replacements during operation hours hence reducing downtime significantly besides enhancing operational efficiency as well Fourthly, these machines exhibit high-performance levels coupled with the reliability needed handle heavy-duty applications tasks involving large amounts computation Therefore overall these factors combined together make rack-mountable servers ideal components for building scalable solutions within datacenters even if one requires massive storage such as those provided by 4U racks.

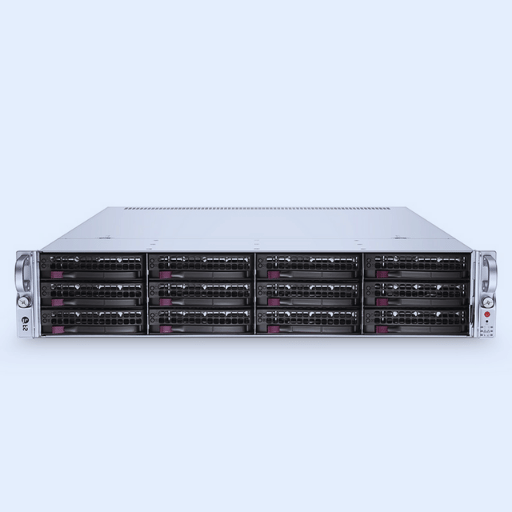

When it comes to rackmount configurations, common options are 1U, 2U, and 4U servers, which each serve different purposes based on performance requirements and space constraints.

The choice of which rack mount configuration to use depends on the specific needs in terms of processing power, storage capacity as well as physical space availability within the data center.

One of the most important reasons why 1U rackmount servers are commonly used in different data center environments is due to their numerous advantages. To start with, they have small sizes that can fit into any limited space available within a server rack, hence enabling high densities. This helps a lot in getting the most out of the floor area in a data center. The second advantage is their power consumption efficiency, which ensures strong performance while cutting down operational costs. In addition, these types of machines are very flexible since they can be set up in different ways depending on what kind of workloads they will handle best. They also come with extra cooling features for better reliability as well as redundancy for improved uptime. Lastly, advanced management tools make it easy to administer them and keep track remotely thereby enhancing smoothness and efficiency during operations.

When comparing the performance of a 1U server to that of a 2U server, there are several important considerations.

Essentially, two U servers offer greater performance potential as well as flexibility of storage and cooling at the expense of slightly more space required over a single You. When choosing between these options, consideration should therefore always take account specific requirements either type might meet based on its ability to deliver different levels within given environments.

Using Intel Xeon processors in your server solutions has the potential to improve performance, scalability, and reliability massively. Designed to handle heavy workloads, Intel Xeon processors deliver exceptional computing power with multiple cores and threads. They feature such advanced technologies as Hyper-Threading and Turbo Boost that allow for faster processing speeds and better efficiency. Another advantage of these processors is their strong security measures like hardware-based encryption or secure boot which protect sensitive data and ensure system integrity always remains intact. Moreover, being compatible with many server configurations as well as virtualized environments ensures versatility thereby making them suitable for any modern server application.

Intel Xeon processors optimize workloads through their advanced architectures and integrated features. With more than one core per chip, it becomes possible to perform different parts of an algorithm simultaneously, thus greatly enhancing computational efficiency, especially when dealing with complex tasks. For instance, Intel’s Hyper-Threading technology can run two threads on a single core so that heavily threaded applications benefit from improved performance levels. On the other hand, when there is a need for extra speed during peak hours, then intel turbo boost technology comes into play by increasing clock frequencies dynamically until all critical jobs have been completed within the shortest time frames possible, therefore saving energy at the same time too because higher clock frequency requires more power consumption compared to lower ones Also caches are made larger thereby reducing latency during data access while higher memory capacities are supported leading to better handling of large datasets thus making them ideal choices whenever much storage capacity is also required large amounts of data need be processed simultaneously hence ensuring reliability alongside high-speed execution capabilities under intense loads.

Comparisons between intel versus amd cpus on rackservers reveal both have unique strengths catering for diverse application requirements. In terms of reputation alone, nothing beats the Intel Xeon processor family, which has been delivering superior performance combined with security functionalities that work flawlessly across almost any server configuration one may think of. These features include hyper-threading technology and turbo boost, among others, designed specifically to improve computational efficiency, especially when multitasking or running resource-intensive applications.

However, AMD EPYC processors stand out due to their high core counts coupled with aggressive pricing strategy making them affordable even for small businesses looking forward to deploying powerful servers capable of handling heavy virtualized environments as well as parallel processing workloads . According to some experts, this means lots of memory would be required together with additional I/O capabilities, all of which are found in abundance within the architecture used by these chips, thereby ensuring optimal performance during data-intensive tasks or high-performance computing scenarios.

To sum up, it is important take into consideration workload requirements before settling on either an intel or AMD CPU for rack server deployment since each company brings something different onto the table depending on whether you need single processor configurations where Intel wins hands down thanks to its excellent single-threaded performances plus integrated security features while if your focus is more towards multi-processor setups, then amd offers better value through higher number cores per socket along lower cost per core thus becoming viable option especially when dealing with heavily threaded applications that require many cores in order deliver maximum throughput.

The above mentioned parts work together to guarantee reliability, scalability and industry leading performance of servers making use of best-in-class technologies from vendors such as Dell or Supermicro.

All these best practices work together to ensure that graphics processing unit configurations are done correctly leading to improved efficiency levels besides maximizing hardware lifespan within rack mount servers.

In the field of high-performance computing (HPC), no other device is as powerful as the NVIDIA A100 GPU, which can be found in Dell and Supermicro systems, among many others. The A100 represents a breakthrough of sorts for the company as it relies on their Ampere architecture; moreover, this graphics processing unit comes equipped with 432 Tensor Cores capable of 6,912 CUDA cores, thereby delivering up to 312 TFLOPS of Tensor performance. This makes it ideal not only for artificial intelligence and machine learning workloads but also for data analytics or scientific simulations that require heavy-duty number-crunching capabilities. With multi-instance GPU technology built into its design so that users can partition this single unit into seven separate instances if they wish — each one having its own memory and storage resources allocated accordingly along with isolation between workloads achieved at an optimal level – there really isn’t much more anyone could ask from such an advanced piece of hardware like this. Another thing worth mentioning about the A100 is its high memory bandwidth courtesy of 40 GB HBM2 VRAMs, which ensures efficient data throughput even for applications with huge input/output requirements, therefore making them perform better than ever before. However, integration into rackmount servers is what truly sets it apart in terms of scalability within HPC environments, seeing how these allow multiple units to be stacked together, thus creating supercomputers that are not only more powerful but also consume less energy while being faster deployable too!.

Commonly, rackmount server chassis come in different sizes depending on their unit (U) measurement, where 1U is equivalent to 1.75 inches tall.

Five U represents the largest standard size available, which offers the greatest amount of expansion capability possible such as multiple CPUs along with many GPUs or storage drives within one system itself; ideal for high-density computing environments where bigger configurations are required, like multi-CPU systems having a large number of graphics cards or storage devices etcetera.

A short-depth chassis is developed for use in places where there is limited space available. These areas may include telecommunication cabinets, onboard vehicles or shallow depth racks. Normally, such a chassis has a depth ranging from less than 12 inches to around 20 inches, which provides compact solutions without much performance compromise. In addition, they allow for easy installation and maintenance within tight spaces while still supporting components like motherboards, storage drives, and cooling systems necessary for operation. Moreover, being light in weight with small dimensions makes them perfect for edge computing applications and remote deployments that need reliability and adaptability in physically limited environments.

High-density rackmount solutions are important for optimizing data center efficiency as they help to increase computing power within a limited physical space. More servers can be hosted within a smaller area, which reduces the need for expansive floor spaces and cuts down on real estate costs. Furthermore, this strategy saves energy by consolidating power and cooling resources, which is necessary for managing operational expenses. Another advantage of high-density configurations is that they enhance scalability – data centers can easily expand their capacity without making significant changes to infrastructure, especially in 4U racks and other larger formats. This approach caters to the increasing data needs of modern businesses; therefore, without them, enterprises cannot achieve high performance or resourcefulness optimization in their respective DC environments.

There are various strategies involved in striking a balance between performance and efficiency at your data center. Firstly, choose energy-saving devices; current models have power management capabilities that can be used for maximizing output while minimizing power intake. Virtualization techniques should also be implemented so as to improve resource allocation thereby reducing reliance on extra physical hardware components. Proper cooling mechanisms such as hot aisle containment or cold aisle containment must be employed; this ensures that temperatures within the facility are maintained at optimum levels. Hence, equipment safety is guaranteed while energy consumption is reduced significantly. Advanced management software should be utilized frequently when monitoring different metrics within the DC since it helps identify areas where inefficiencies may exist and what needs to change so that adjustments can take place accordingly. Last but not least, modular design principles should be embraced as they enable growth scalability alongside economized use of resources that adapt quickly enough to new demands without involving extensive reconfigurations.

A 1U rackmount server refers to a type of server that has been designed to fit in the industry standard 19-inch rack and occupies 1U of vertical space, which is equivalent to about 1.75 inches high. Commonly combined with SSDs (Solid State Drives) and SATA (Serial ATA) for storage, these servers are best suited for high-density data center environments because they are compact in size and utilize space efficiently.

A: A 4U rack mountable server provides more room for additional components such as multiple hard drives, GPUs or advanced cooling systems. It is ideal for applications that require high performance and scalability like HPC, data storage among others which often use Dell or Supermicro hardware.

A: A short-depth server is built with a shallower chassis than regular servers; this makes them perfect for use where there is limited space like telecom cabinets or small data centers. Despite their small size however these servers still deliver good performance levels along with flexibility too.

A: Dual-processor servers have two CPUs which provide better performance and reliability. This configuration works well with heavy duty applications processing, virtualized environment hosting large databases among other scenarios since it ensures resource allocation is done properly while also guaranteeing redundancy where necessary.

A: A 2U rackmount server takes up two units (2U) of vertical rack space while three units (3U) are occupied by the latter. Typically a larger number of drive bays can be found on three-U versions together with extra PCIe slots as well as improved cooling solutions making them suitable for more complex enterprise needs.

The option between 2.5” and 3.5” drive bays is dependent upon storage requirements, with SATA integration being common for both as it ensures stable data transmission. When it comes to speed, they have an advantage over 3.5’’ ones because most use solid state drives that attach via SATA interface, thus allowing faster transfers of data. On the other hand, 3.5’’ bays are provided with larger capacity, which usually welcomes hard disk drives (HDD). It is all about finding a balance between the performance levels demanded by your business applications and the storage space needed.

A: Data center servers should have powerful processors such as Intel® Xeon® or AMD EPYC, large memory capacity like DDR4 RAMs, and support multiple LAN ports which enable high-speed networking among other devices located within the same local area network (LAN) segment while also acting as gateways /routers behind them; hot-swap drive bays make it possible to replace defective hard disks without shutting down server and scalability allows expansion in future.

A: Multi-GPU servers boost computing power and graphic performance through the utilization of several graphics processing units simultaneously. This setup is necessary when dealing with heavy computations or graphics, such as in high-performance computing tasks, complex simulations, etc., where not only are results expected faster, but also efficiency during virtual desktop infrastructure usage needs improvement since users require more responsive systems.

A: To ensure maximum efficiency under mission-critical circumstances, Supermicro designs its server models with reliability as its top priority, featuring advanced technologies combined together alongside strong components, thereby creating great performance throughout these devices, even when subjected to harsh environments repeatedly. They have hot-swap components that can be replaced without switching off power, redundant power supplies, and advanced cooling solutions, among other features that enhance dependability/durability.

A: Easy accessibility of server parts e.g., tool-less design or hot-swap bays allows for swift system maintenance and upgrading where necessary thus minimizing downtime while ensuring continuous operation especially within critical / high-demand scenarios.