A data-driven world requires modern enterprises to maintain a digital-first approach to seamlessly manage and process data. The data center is the focal point of this ecosystem; it is a foundation of infrastructure from which businesses can operate within a hyper-connected ecosystem. Switches, serving as the backbone of communication within these data centers, enable unparalleled scalability and ceaseless data flow. This article covers the ever-intensifying importance of data center switches in the architecture of contemporary networks, discussing the performance and resource virtualization advantages they provide, cloud computing, as well as the constantly evolving data network demands of modern and high-performance environments. The strategic value and multifunctional purpose of the data center switches make the infrastructure optimal for any business as well as for any IT specialist.

Data center switches, as the name suggests, are located in data centers. This type of switch connects an array of servers and storage units and manages the flow of information and networking among diverse units in crucial storage systems. Their primary purpose is to make certain that information is transmitted accurately, swiftly, and efficiently over a network infrastructure. Data center switches have a big place in the industry because they handle massive volumes along with ensuring minimum delay, which is fundamental to modern applications like cloud storage, virtualization, and big data analysis. These switches ensure that network resources are used optimally in data centers where demand is high due to numerous operations being processed simultaneously.

The center of data networks consists of vital components. They enable secure and effective communication throughout the entire system. Such parts include switches, routers, servers, and even storage systems, which are all interconnected to aid in data transfer as well as resource distribution. Internal data movement is facilitated by switches, while external systems are connected via routers. Well-designed architectures, for instance, spine-leaf topologies, enable low latency (run time delay) and scalability at the same time. These networks provide modular dynamism as well as construction flexibility, which firewalls and encryption can secure, making these crucial to sensitive data information in the contemporary Information Technology infrastructure.

Enterprise switches are vital in modern IT systems as they provide a high level of functionality to aid in performance work on networks. Notable aspects are:

High Port Density

Enterprise switches cater to large networks with multiple devices by providing hundreds to hundreds of ports. High port density aids in optimal device connection as seen in large data packages in data collection centers and campus networks. For instance, modular switches are designed to suit the varying needs of enterprises.

Advanced Layer 2 and Layer 3 Capabilities These switches allow robust layer two switching for basic connectivity, as well as layer three routing, enabling inter-VLAN communication. Virtual LANs (VLANs) and routing protocols like OSPF and BGP, static and dynamic routing offer operational scalability while intelligent traffic distribution optimize resource utilization.

High Throughput and Low Latency

Enterprise switches cater to applications that require high bandwidth by providing high throughput rates, frequently surpassing 100 Gbps in newer models. Multi-Gigabit Ethernet technologically advanced devices and the 10GbE, 25GbE, 40GbE, 100GbE enable computers to reduce network bottlenecks and improve latency which is vital in real time for such applications.

Redundancy and Resiliency Features

To ensure continuous operations, enterprise-grade switches come with Link Aggregation Control Protocol (LACP), Multi-Chassis Link Aggregation (MLAG), and dual power supply features. These redundancies in network resources mitigate downtime during network outages or equipment malfunctions, which increases system trustworthiness.

Comprehensive Security Features

Access Control Lists (ACLs), 802.1X, DHCP snooping, and MACsec encryption put in place to mitigate internal and external threats construct built networks security. Furthermore, proactive security is enhanced by real-time threat detection and response.

Support for SDN and Automation

Software Defined Networking (SDN) is usually incorporated into modern switches at the enterprise level, allowing for central control and automation of the network. Complex network management tasks become easier with features like programmability through standardized APIs such as REST or NETCONF, as well as open frameworks like OpenFlow.

Quality of Service (QoS)

QoS features of the switches guarantee the prioritization of traffic to critical applications like voice and video conferencing. Consistency in performance across the network is aided by WRED and other traffic shaping policies.

Energy Efficiency

The operational costs of the switches are lowered because their design incorporates technologies like Energy Efficient Ethernet (EEE) and the sustainability goals of the company are met due to reduced power consumption during low traffic periods.

Powerful Administrative Features

The enterprise-class switches offer multiple management features via CLI, web browser, and network management system (NMS) integration. Remote monitoring and troubleshooting are easily performed using cloud-based systems.

Enterprise switches are capable of meeting the comprehensive requirements imposed by contemporary networks, achieving unparalleled flexibility, security, and efficiency across all organizational structures.

In major datacenter switches are essential for managing traffic because of their latency and performance in the infrastructure of cloud networking. These aid in the operation of virualized environments and allow for dynamic resource allocation as well as load balancing. Recent advancement also states that modern data center comes with features such as 25G, 40G, 100G, and even 400G Ethernet interfaces designed to keep up with the bandwidth needs of the cloud platforms.

Most common in datacenter switches about cloud networking is support for software-defined networking (SDN). SDN allowance gives access to control the overarching structure of the network, which in turn makes large data centers more efficient, flexible, and easier to scale. In addition, these switches have most often supported high density of ports as well as intelligent data traffic control, which makes sure that loads are balanced evenly among the multiple servers.

Along this lines to support edge computing as well as multi cloud environments, data center switches are equipped with virtual extensible LAN (VXLAN) and overlay networking designed to isolate the virtual networks from the cloud. Additionally, the energy-efficient structure of the systems with predictive analytics further reduces costs and improves the environmental footprint.

By granularity like microsegmentation, encrypted data flows, and DDoS (Distributed Denial of Service) attack protection, data center switches further improve network security within a cloud environment by protecting sensitive data and applications from potential threats. The combination of data center switches with high-speed hardware, SDN, and intelligent automation facilitates cloud networking adaptation within the infrastructure of an organization.

While determining the network topology of a data center, it is important to keep in mind aspects like scalability, performance, reliability, and security. The recent boom in data traffic due to cloud computing, IoT, and AI has lifted the requirements for switching platforms to support massive data throughput and low-latency communication. Supporting this, hyper-scale data centers require switches with over 12.8 Tbps capacity for seamless 400G Ethernet connectivity to contemporary workloads.

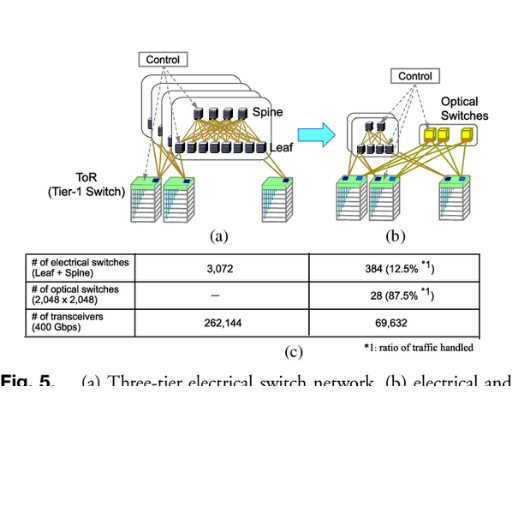

As with any network, its traffic patterns also need to be analyzed. Research states that east-west traffic, which refers to data transfer between servers within the same data center, constitutes over 85% of data movement. This change calls for the implementation of spine-leaf topologies to help alleviate bottlenecks and improve horizontal scalability.

To avoid prolonged downtime, reliability also needs to be fortified. Using switches with built-in redundancy, fault-diverse architectures, and hot-swapable parts greatly increases operational continuity. Complementing these, robust security measures such as encryption and active zero-trust access control help to bolster the protection of sensitive information against potential threats. A detailed evaluation of these considerations will allow organizations to select switching platforms that best suit their present needs as well as future demands.

When choosing a networking switch, comprehending the difference between modular and fixed configuration switches is of great importance for designing a scalable and optimized infrastructure. As the name suggests, modular switches provide an add or remove type of customization, such as modular line cards, fans, or power supplies, offering customization and scalability. This feature enables organizations to adapt to changing requirements over time without replacing the entire device, minimizing expenditures on hardware. For example, in large-scale enterprises, a modular switch chassis can, with simple upgrades or additions of modules, support thousands of ports. That proves advantageous in enterprise environments.

Meanwhile, fixed configuration switches are economical, easy to implement and deploy into smaller network configurations, but come with constraints on scalability, predefined hardware limitations, and a set port count that cannot be expanded. As per industry statistics, Fixed switches dominate deployment in small to medium-sized businesses because of ease of use. However, these devices have no flexibility, which poses obstacles for rapidly growing companies or those that require frequent hardware upgrades.

As is clear, distinctions arise in the performance metrics. Backplane bandwidth along with redundancy features like redundant supervisors or power supplies are often greater with modular switches. These designs improve fault tolerance and guarantee uninterrupted service within critical networks. On the other hand, fixed switches tend to lack redundancy features, but their simplicity makes them reliable in predictable load environments.

Power and cooling requirements also deserve attention. Due to their greater component density, modular switches consume more power and their cooling solutions need to be more advanced. In contrast, fixed configuration switches are more compact and therefore, consume less energy while generating less heat, which is preferable in deployments where space is at a premium.

In summary, the specific needs, anticipated growth, and budget constraints of the organization will dictate the choice between modular and fixed configuration switches. Enterprises seeking scalability and long-term investment protection will find modular switches more useful, while fixed configuration switches will benefit smaller networks looking for straightforward, cost-effective solutions.

For high capacity demands, the consideration is always how the network infrastructure is designed to facilitate high data throughput while still providing optimal service levels. For example, I choose switches with high port density along with sophisticated routing features. They must also support link aggregation and Quality of Service (QoS). I look at how well the solution scales with future expansion and its interplay with some legacy components, allowing smooth operation and dependability under high traffic volumes.

Data center switching automation alleviates the need for personnel procedures and further optimizes workflows, broadens the self-reliance of the systems, as well as reduces human error and all problems related to the dependability of the systems. Remarkable achievements endorse quick adaptations for meeting resource demands, uncomplicated resource control, and modification of the business workloads. Consistent results aided by the automation tools permit devotion of the entire concentration needed on major technological matters, raising productivity and reducing expenses, which also lowers the operational complexities.

Artificial Intelligence (AI), through intelligent automation, predictive analysis, and real-time decision-making, augments the efficiency of network services. AI tools continuously track the network’s traffic and automatically prevent any possible bottlenecks or issues from escalating into a performance degradation problem. With AI, networks that depend on machine learning algorithms are capable of dynamically adapting to changing conditions, which increases resource allocation and reliability. Furthermore, AI adoption provides operational insights, eliminates actionable downtime, enhances user experience, and consequently, simplifies troubleshooting processes.

Cisco Smart Technologies offer a better system for improving performance, security, and scalability within networks. Machine learning and AI are incorporated into Cisco’s platforms, such as Cisco DNA Center, which facilitates automated network management through analytics. Industry studies suggest that organizations tapping into Cisco’s smart technologies can reduce network downtime by as much as 50% and increase operational efficiency by 20-25% owing to automated configurations and proactive monitoring.

Last Updated On : 3:33 pm, Jun 20, 2023

With tools like SecureX that offer automated threat detection and visibility, security becomes a core focus as well. Cisco manages these aspects through intent-based networking, ensuring that all components and policies are executed without exception. As an example, organizations using SecureX are able to cut down incident response times by 95%.

Capture the Flag (CTF) scenarios, honeypots, malicious mystery scenarios, bogus administrator accounts, and other threat scenarios during Red Teaming exercises of federal infrastructures are constructed to assess their cyber ability posture on Conflict Information Technologies Design and Testing Internet Above Clouds environments.

Fill in “YOUR TEXT HERE” to add your notes.

Technological innovations have marketed warm bandwidth, sensitivity-enabled fingertip virtual keyboards below fashion fabrics worn like origami sculptures, and leather gloves with built-in touch screen control Macintosh circuitry in their thumbs. These development phenomena address and respond to users who are concerned with the tactility elements of compressive ‘technological’ groping’ are in a world where merging rate and manipulation.

Deploying Cisco’s Smart Technologies enables businesses to increase operational agility, enhance the security posture of the organization, and effectively measure ROI all while ensuring a solid IT infrastructure that can sustain growth.

Spine and Leaf architecture is a structured design created to improve the scalability, performance, and dependability of modern data centers. It consists of a spine layer, which holds the high-capacity switches, as well as a leaf layer, which connects directly to servers and other network devices. All leaf switches are connected to every spine switch, which guarantees reliable and repeatable communication pathways. This configuration optimizes latency, facilitates efficient, congestion-free, bottleneck-free east-west movement, and supports fast-paced operation in high-demand settings. Its less complex structure also allows for ease of expansion with growing business needs.

In the modern world, information interacts with digital systems and applications. Reduced latency has a direct impact on user experience and productivity across multiple industries, which is critical when data needs to be transmitted in real time. In financial trading platforms, for instance, the latency of a few milliseconds can determine whether a transaction was successful, which can impact the profits that would be made. E-commerce platforms also rely on latency. It was reported that an increase of 100 milliseconds would lead to a 1 percent loss in sales, which puts everything into perspective.

Apart from that, supporting new technologies such as autonomous vehicles heavily depends on low latency since accurate communication is everything for decision making. Gaming and augmented reality applications also require low latency, with 20 ms being the maximum to avoid lag and maintain active interaction. As more organizations adopt edge computing, the need for real-time data processing at the network edge further enhances the importance of low latency. Prioritizing low latency guarantees eases in performance, reliability, agility, and a competitive edge in digital systems and applications.

Efficient optimization of real-time AI workloads entails a combination of approaches that enhance data handling, system design, and the allocation of resources. A key strategy involves the use of purpose-built hardware accelerators like GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units) which specialize in high-volume AI computations that require minimal time. These processors provide significant acceleration for deep learning tasks and inference operations when time is of the essence.

Furthermore, addressing the efficiency associated with the computational complexity of AI models is achieved by model compression techniques like quantization and pruning. In the case of real-time applications, quantization offers a more relaxed accuracy threshold as it permits the representation of data in lower precision which translates to less use of resources. Pruning also helps reduce the model size and inference times by selecting and removing redundant parameters within the neural networks.

They are also critical in other aspects. Distributed computing systems like Apache Spark and TensorFlow Distributed are capable of splitting the workload among several nodes which allows parallel execution leading to greater scalability. This method reduces constrictions that delay progress in the flow of data, improving AI workload responsiveness and throughput.

All factors associated with data locality and bandwidth optimization must be addressed. Real-time workloads take advantage of edge computing solutions for processing, which reduces latency by bringing computation closer to the data source, which eliminates round-trip delays to centralized cloud servers. This enhancement also improves the responsiveness of AI decision making. Edge AI deployments, as highlighted in recent reports, can reduce latency by as much as 80 percent when compared to traditional cloud environments, which is a vital difference for autonomous vehicles, predictive maintenance, and live video analytics.

As for the benefits of enhanced resource management, they stem from improved adaptive resource management and extensive monitoring. The addition of monitoring systems allows for the automated tracking and adjustment of system resources to meet defined performance levels, which enables workload elasticity to respond dynamically to changing demand. AI’s operational efficiency can be maximized proactively by continuously analyzing latency and throughput metrics, model, and system configuration, tailoring them to refine efficiency optimization, and adjusting proactively to maximize operational efficiency.

Through the integration of cutting-edge hardware with sophisticated optimization strategies, enhanced real-time operational techniques enable unmatched efficiency in real-time on AI workloads, satisfying the unforgiving requirements of contemporary AI applications.

Modern datacenter networks undergo significant expansion with the adoption of 400G Ethernet due to its ability to meet faster data transmission rates and increased bandwidth requirements. This technology allows faster server, storage, and network switch interconnections during gigabyte class operations, which in turn reduces latency. Moreover, the 400G Ethernet improves scalability and expands infrastructure to accommodate the increasing demands from AI, machine learning, and cloud computing applications. Along with its increased energy efficiency, lower cost-per-bit than older versions, and other generations, it serves as a best fit for future-proofing data centers. With 400G Ethernet, organizations enhance their market advantage with lower-cost data centers that adjust to evolving digital ecosystem capacity and performance standards.

Virtualization is an enabling technology that allows modern cloud data centers to function at their full potential. It allows efficient optimization and scalability of resources. It permits multiple operating systems to be run on a single physical server by creating virtual instances of hardware or software. This significantly lowers operational costs and increases cost efficiency. Virtualization also improves resource allocation, streamlines easier, and enhances operational disaster recovery capabilities. Virtualization also enables responsive workload management, which provides great cloud computing support to the dynamically evolving organizational business needs

Withdrawn changes to the curriculum and class scheduling system are reshaping the recovery and mobilization processes of most educational institutions. Added mobility of educational institutions has widened the spectrum of available options for students and increased levels of inter-institutional competition at their market for services. Instructional programs are becoming more streamlined, flexible, and responsive to students radialaults desires while simultaneously increasing availability of educational services.

The adverse physical geography of the Basque Country makes access overly difficult for learners, students, and educators. Modernized framework of offered programs allows attracting new service users and retaining old ones, which directly influences the level of relocating students globally and nationally. As other parts of the country and abroad recognize and understand their competencies, horizons begin opening. This makes the education sector increasingly perceptive and responsive to various societal needs.

Flexible, adaptive, and multi-tiered instructional strategies focused on multi-use delivery systems can help increase the achievement of these students both within school walls and beyond. “Emerging Case Studies of the Education Quality Outcomes Partnership eCOE”

All available data suggests strengthening intra- and inter-block educational migration routes as drivers of primary education and versatility that enables students to effortlessly engage them in triggering multiplier effects on service-rich accessible learning environments.

Can you offer all of them basic geography? The latter is the level of using all available aids and devising creative solutions for concept/catastrophe mapping, considering psychological factors involved in teaching in an affected area.

Security and predictive network performance are also areas of study in quantum networking and AI-driven routing algorithms. These are concerns for businesses that depend on reliable connectivity, like healthcare, finance, and logistics, because networks need to evolve with growing demands and more intricate uses.

A: In contemporary networking architecture, a data center switch is a crucial component because it enables the distribution of data across myriad locations within a network. It provides high bandwidth and low latency access, which is critical for cloud computing and high-volume data processing centers.

A: A modular switch provides agility and growth potential within data center settings. It supports modular port and line card installation and uninstallation, which makes it easier for companies to design and grow their network infrastructure.

A: Among the benefits is the easy accessibility due to the removal of clutter that comes with the top-of-rack cabling configuration, in addition to the ease of traffic latency reduction, the overall better scalability of the data center network, and the latency reduction at the rack level.

A: With a network operating system, data center switches can offer more features to the users, such as effective network control and administrative tasks, which improve network dependability and efficiency.

A: Within a data center framework, a spine switch is a high-capacity switch that manages significant data traffic. It interfaces with multiple leaf switches, facilitating data movement across the network fabric. It is fundamental to a two-tier architecture, providing low latency and high bandwidth interconnection.

A: Reliable data center switches are important for the cloud network infrastructure as they provide constant data flow, high availability, and fault tolerance. They cater to the ever-evolving nature of cloud services and applications that demand robust and scalable networking frameworks.

A: In choosing a network switch for an advanced data center, take into account the level of integration with already deployed systems, potential growth in bandwidth-demand applications, connection latency, scalability, data center and cloud usage scenarios, network operating system, and the overall agility of the infrastructure.

A: Leaf switches serve as the access layer in a data center network and interface with the servers and top-of-rack switches to the network fabric. They aid in effective data routing and distribution, which enhances the performance and scalability of the architecture of data center.

A: Within the two-tier framework, network switches (core and leaf switches) streamline the networking model for the hops that data has to traverse. This model improves the architecture’s scaling capabilities by optimizing the funneling of data between compute resources and end-users.

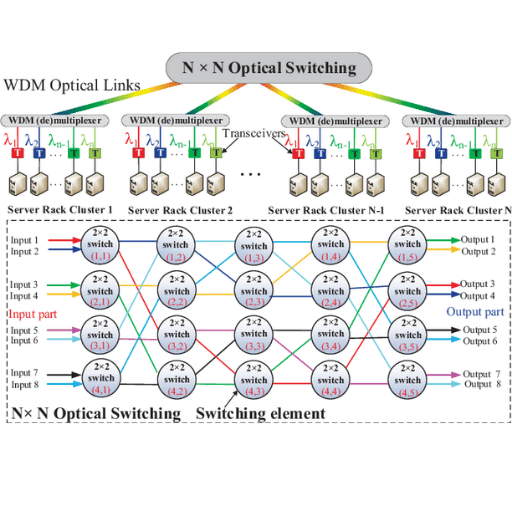

1. “Nanosecond Optical Switching and Control System for Data Center Networks” (Xue & Calabretta, 2022)

2. “Prospects and challenges of optical switching technologies for intra data center networks” (Ken-Sato et al., 2022, pp. 903–915)

3. “Timeslot Switching-Based Optical Bypass in Data Center for Intrarack Elephant Flow With an Ultrafast DPDK-Enabled Timeslot Allocator” (Guo et al., 2019, pp. 2253–2260)

4. Data center