As data center workloads continue to evolve toward AI, HPC, and cloud-native applications, the demand for ultra-high bandwidth and low-latency networking has never been greater. The NVIDIA ConnectX-7 SmartNIC is designed to meet these needs, delivering up to 400Gb/s of throughput with advanced offload, security, and virtualization capabilities. By integrating cutting-edge technologies such as RoCEv2, GPUDirect, and line-rate encryption, ConnectX-7 redefines how compute, storage, and network layers interact in modern data centers.

The NVIDIA ConnectX-7 is NVIDIA’s latest generation of high-performance network adapter (SmartNIC / NIC), designed for demanding workloads such as AI training clusters, high-performance computing (HPC), and cloud data centers that require high bandwidth and ultra-low latency. Supporting speeds of up to 400 Gb/s, the seventh-generation ConnectX adapter further enhances intelligent network processing, hardware offload, and security capabilities.

As a key component of the NVIDIA Spectrum-X networking platform, ConnectX-7 is often deployed together with NVIDIA BlueField DPUs and NVIDIA Quantum-2 InfiniBand systems to build the high-speed interconnect architecture of AI factories and large-scale data centers.

ConnectX-7 supports both InfiniBand and Ethernet protocols. Its core design focuses on high throughput, ultra-low latency, and in-network computing, leveraging hardware acceleration and offload mechanisms to achieve efficient data transfer and processing. This not only reduces CPU workload but also enhances network security, efficiency, and scalability.

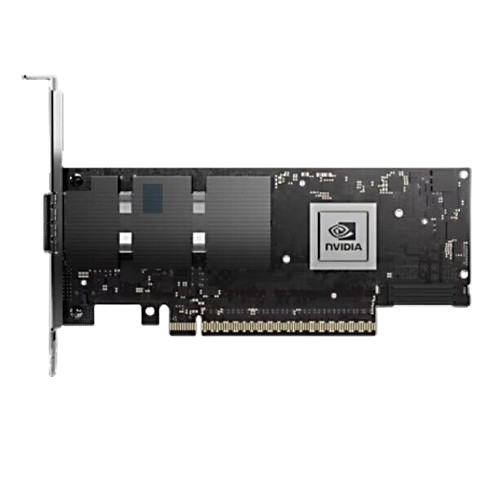

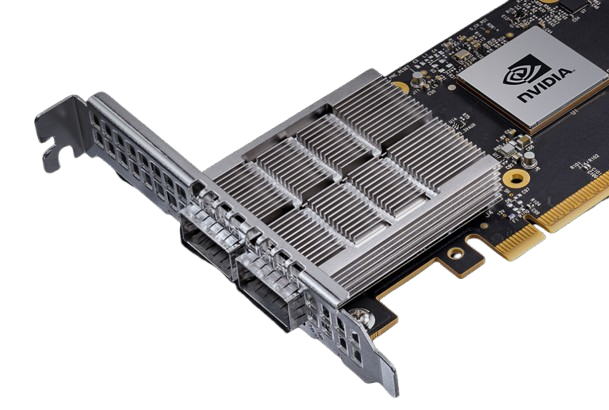

The architecture of ConnectX-7 is based on a single-chip ASIC (Application-Specific Integrated Circuit) design, integrating a PCIe Gen 4.0/5.0 x16 interface (supporting 16GT/s or 32GT/s), which enables single-port 400Gb/s or dual-port 200Gb/s configurations through connectors such as OSFP, QSFP112, or SFP56. Socket Direct technology allows the PCIe bus in dual-socket servers to be split into two x16 interfaces, directly connecting to multiple CPUs to enhance bandwidth and efficiency. The ASIC internally includes storage components (such as 256Mbit SPI Quad Flash for firmware storage) and Hardware Root-of-Trust, supporting secure boot and firmware updates.

The architecture is compatible with InfiniBand specification v1.5 and IEEE 802.3 standards, supporting InfiniBand rates from SDR to NDR (up to 400Gb/s, using PAM4 encoding and RS-FEC error correction) as well as Ethernet rates (from 10GbE to 400GbE, including link aggregation, VLAN tagging, and congestion notification). The data processing pipeline adopts a modular design, including ingress/egress processing, protocol engines, and PCIe interfaces, achieving end-to-end data integrity.

The core logic of ConnectX-7 is “hardware acceleration + intelligent network processing.” In data path acceleration, it employs RDMA technology to enable direct transmission between host or GPU memories, avoiding CPU involvement and memory copies, replacing traditional CPU-dependent processing flows. Meanwhile, its intelligent offloading mechanism shifts tasks like TCP/IP, encryption, virtualization, and traffic shaping to the NIC hardware, supporting inline encryption/decryption and checksum/segmentation offload, freeing up host resources.

Additionally, leveraging the SHARP protocol for in-network computing, it performs aggregation operations (such as gradient summation) at the NIC or switch level, reducing node communication burdens. Finally, GPU direct optimization integrates GPUDirect RDMA, enabling zero-copy GPU-to-GPU communication via PCIe or NVLink, which is crucial for distributed training (e.g., Transformer/LLM) and enhances AI and HPC efficiency.

The NVIDIA ConnectX-7 SmartNIC is designed to deliver ultra-high throughput and intelligent networking for a variety of accelerated computing environments. Its advanced offload capabilities, RDMA support, and in-network computing make it ideal for the following key scenarios:

ConnectX-7 enables efficient GPU-to-GPU and node-to-node communication through GPUDirect RDMA and SHARP aggregation.

It is widely used in AI factories, LLM training clusters, and distributed inference platforms, where communication latency directly affects overall model performance and training speed.

In supercomputing systems, ConnectX-7 provides deterministic low-latency communication and lossless RDMA transfers for scientific simulations, CFD, molecular dynamics, and other compute-intensive workloads.

ConnectX-7 supports multi-tenant virtualization, SR-IOV, and DOCA-based hardware acceleration, making it ideal for large-scale cloud providers that need secure and isolated high-bandwidth networking between thousands of virtual machines or containers.

With NVMe-over-Fabrics (NVMe-oF) and RoCEv2, ConnectX-7 enables high-speed, low-latency access to distributed storage systems.

It helps accelerate AI data pipelines and ensures efficient I/O operations for analytics and database workloads.

In edge and telecom deployments, ConnectX-7 provides hardware-based encryption, traffic shaping, and real-time packet processing, delivering secure and efficient connectivity for 5G UPF, edge AI, and IoT analytics applications.

The NVIDIA ConnectX-7 SmartNIC enables high-speed data connections between AI, HPC, and cloud infrastructures. It supports 400G Ethernet and NDR 400G InfiniBand networks, allowing flexible deployment in data centers that require low latency and high throughput.

In a typical AI cluster, each GPU node connects to the network via ConnectX-7 adapters. These adapters link to NVIDIA Spectrum-4 Ethernet switches or NVIDIA Quantum-2 InfiniBand switches, forming a unified high-performance switching fabric. The system supports direct connections to storage servers, ensuring efficient data movement between the compute and storage layers.

GPU Nodes: Compute resources for AI training or inference

ConnectX-7 Adapters: Interfaces connecting compute and network

Spectrum-4 Ethernet Switch → 400G Ethernet Network

Quantum-2 InfiniBand Switch → 400G InfiniBand Network

Storage: High-speed shared storage or NVMe-over-Fabrics

This hybrid architecture allows seamless scaling between Ethernet and InfiniBand, optimizing performance for AI and cloud workloads.

The NVIDIA ConnectX-7 SmartNIC represents a major leap forward in data center networking, combining 400Gb/s ultra-high bandwidth, intelligent hardware offload, and in-network computing into a single, highly efficient platform. As a core component of the NVIDIA Spectrum-X architecture, it bridges compute, storage, and networking layers with exceptional performance and scalability.

By supporting both InfiniBand and Ethernet protocols, ConnectX-7 delivers the flexibility required for diverse workloads — from large-scale AI training and high-performance computing (HPC) to cloud virtualization and high-speed storage networks. Its ability to accelerate data paths, offload complex protocols, and enable GPU direct communication makes it indispensable for next-generation AI factories and hyper-scale data centers.

Looking ahead, as AI and data-driven applications continue to redefine the boundaries of computing, the ConnectX-7 will remain a cornerstone technology — enabling faster, more secure, and more energy-efficient data movement across the world’s most advanced digital infrastructures.